Taking inspiration from OpenAI’s o1 model, Hugging Face researchers have demonstrated that intelligently scaling compute during inference can significantly improve the performance of open source language models. Their approach combines different search strategies and reward models.

Scaling computing resources during pre-training is critical to the development of large-scale language models (LLMs) in recent years, but the required resources have become increasingly expensive and researchers are exploring alternative approaches. Masu. Scaling computing power during inference offers a promising solution by using dynamic inference strategies that allow models to spend more time processing complex tasks, according to Hugging Face researchers. I will.

While “computational scaling during testing” is nothing new and has been a key factor in the success of AI systems like AlphaZero, OpenAI’s o1 significantly improves language model performance by increasing “think” time. For the first time, we have clearly demonstrated what can be done. “About a difficult task. However, there are several possible implementation approaches, and it remains unclear which one OpenAI will use.

From basic to complex search strategies

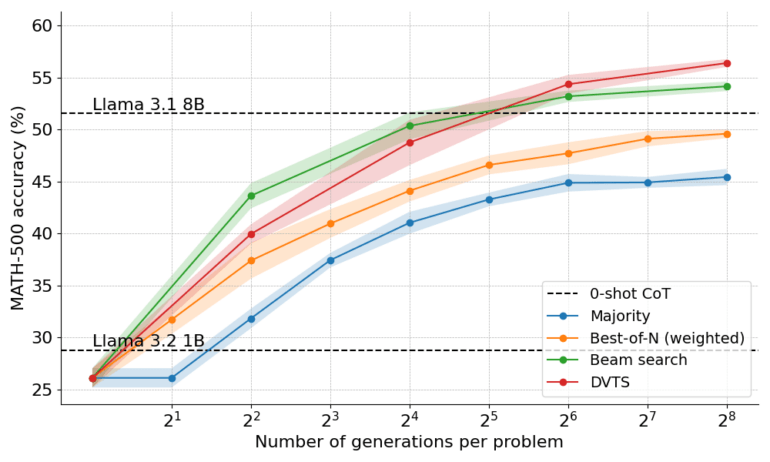

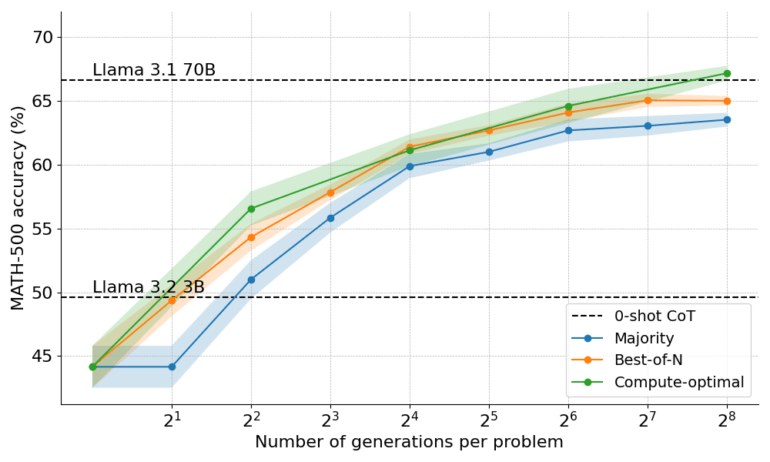

Scientists considered three main search-based approaches. The “Best-of-N” method generates multiple solution proposals and selects the best one. Beam Search uses a process reward model (PRM) to systematically explore the solution space. The newly developed “Diverse Verifier Tree Search” (DVTS) further optimizes the diversity of solutions found.

advertisement

The actual test results are impressive. An Llama model with just 1 billion parameters matched the performance of a model 8 times larger. In the mathematical task, we achieved almost 55% accuracy. This is close to the average performance of computer science doctoral students, Hugging Faith said.

The 3 billion parameter model outperformed the 70 billion parameter Llama 3.1, which is 22 times larger, thanks to the team’s proposed optimized computational method that selects the best search strategy for each computational budget.

In both cases, the team compared the results of a small-scale model that used the inference techniques to the results of a large-scale model that did not use these techniques.

Verifiers play an important role

Validators or reward models play a central role in all these approaches. Evaluate the quality of the generated solutions and guide your search towards promising candidates. However, according to the team, benchmarks like ProcessBench show that current verification tools still have weaknesses, especially when it comes to robustness and versatility.

Improving verification capabilities is therefore an important starting point for future research, but the ultimate goal is a model that can autonomously verify its own output, and the team believes OpenAI’s o1 will do that. suggests.

recommendation

More information and some of the tools used are available at Hugging Face.