California just passed landmark legislation to increase transparency and accountability in artificial intelligence (AI). From January 1, 2026, companies developing AI systems will have to follow new rules designed to protect consumers and ensure responsible AI development.

The new bill signed by Governor Gavin Newsom consists of two major pieces of legislation that change how AI companies operate in California: Assembly Bill 2013 (AB 2013) and Senate Bill 942 (SB 942) .

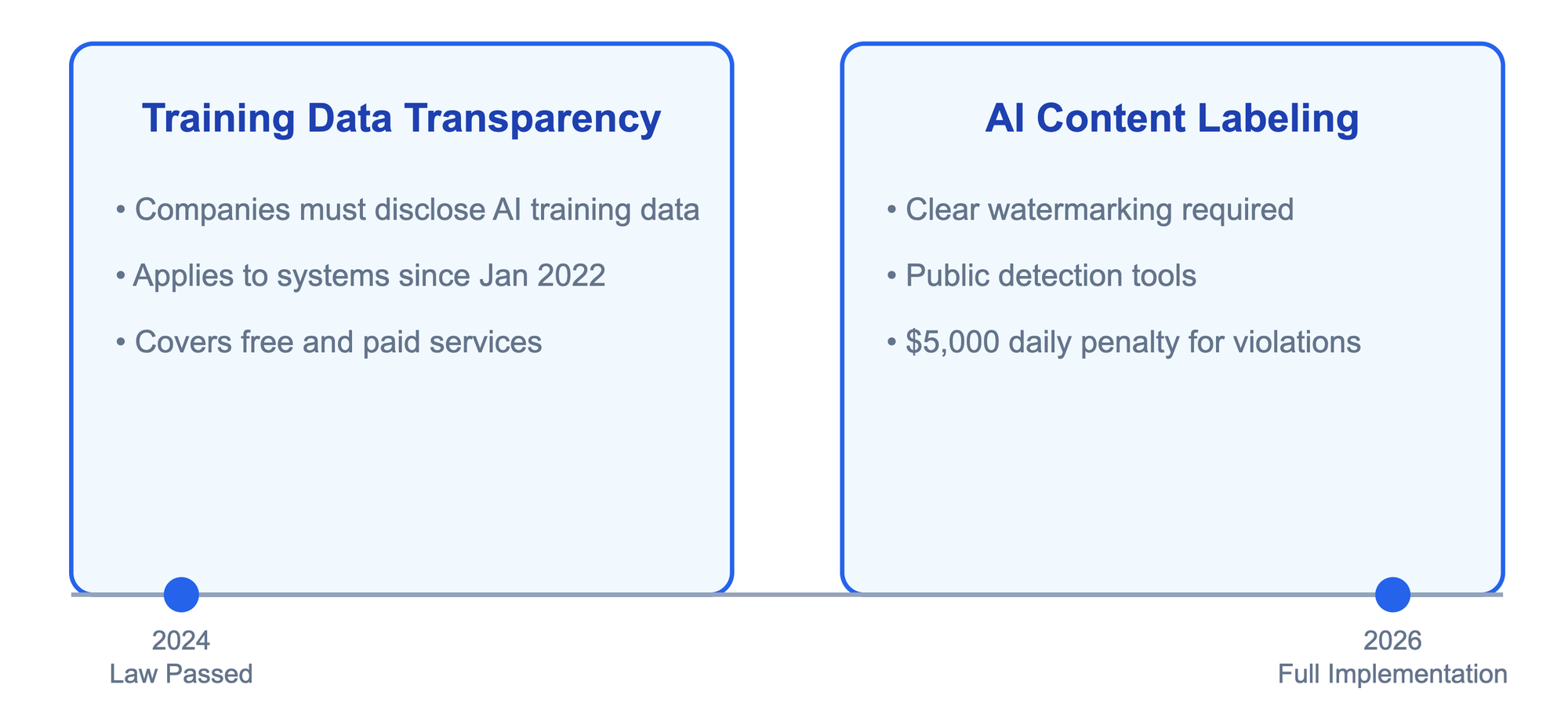

Assembly Bill of 2013 (AB 2013): Generative AI Training Data Transparency Act

Require AI companies to be open about the data they use to train their AI systems. This means companies must explain what information they used to teach the AI to produce text, images, video, or audio.

Think of it like reading the ingredient list on a food package. Just as consumers want to know what’s in their food, they will now be able to know what data goes into the AI systems they use. This transparency requirement applies to both free and paid AI services, including those released or significantly modified after January 1, 2022.

Senate Bill 942 (SB 942): California AI Transparency Act

We focus on making AI-generated content easy to identify. Companies should add clear labels or “watermarks” to AI-generated content to help distinguish human-generated content from AI-generated content. This is similar to products being labeled as “Made in the USA” or “Organic.” From now on, content will be marked with a marker to indicate it’s “Made by AI.”

To help people verify AI-generated content, the law requires the development of public tools that can detect AI-generated material. These tools will be freely available to anyone who wants to check whether what they are looking at was created by AI.

The law carries significant enforcement measures. Businesses that fail to comply could be fined up to $5,000 per day. The California Attorney General and local governments have the authority to enforce these rules.

For everyday Californians, these laws mean more protection from misinformation and more control over their digital experiences. When you scroll through social media or browse a website, you can now easily tell whether you’re looking at human-generated content or AI-generated content. .

While these rules may make it difficult for small AI companies to compete, proponents argue that building trust in AI technology is critical to long-term success. . The law aims to encourage innovation while ensuring accountability and transparency in AI development.

California’s approach could influence how other states and countries regulate AI. Home to many major technology companies, California standards often serve as unofficial national benchmarks. These laws could serve as a model for future AI regulation in the United States and other countries.

Businesses have until 2026 to prepare for these changes, giving them time to adjust their practices and implement necessary transparency measures. This timeline acknowledges that significant changes are required in the way AI companies operate, while ensuring that protecting consumer interests is not delayed indefinitely.

As artificial intelligence becomes more integrated into our daily lives, these laws are an important step toward ensuring that AI strengthens, rather than undermines, public trust. They set a clear direction for a future where powerful AI technologies develop, while strongly protecting the public interest.

*** This is a syndicated blog from the Security Bloggers Network, “Meeting Technology Entrepreneurs, Cybersecurity Authors, and Researchers” written by Deepak Gupta, technology entrepreneur and cybersecurity author. Read the original post: https://guptadeepak.com/californias-pioneering-ai-legislation-shaping-the-future-of-artificial-intelligence/