Three weeks ago, I showed how difficult it is to properly evaluate LLM performance in mathematical problems and introduced Math-Verify, a better solution for verifying mathematical models (read more about the presentation )!

Today, we can use Math-Verify to thoroughly reevaluate all 3,751 models submitted to the Open LLM leaderboard so far, and share the more unbiased and robust model comparisons. I’m excited!

Why mathematics ratings on the open LLM leaderboard have broken

オープンLLMリーダーボードは、ハグするフェイスハブで最も使用されているリーダーボードです。さまざまなタスクでオープンな大手言語モデル(LLM)パフォーマンスを比較します。 One of these tasks, called Math-Hard, is particularly relevant to mathematical problems. Evaluate how well LLMS solves high school and college level math problems. Using a 5-shot approach, we use the highest difficulty level (level 5) of Hendrycks Math Dataset, spreading over seven topics (Precalculus, Prealgebra, Algebra, Intermediate Algebra, Count/Probability and Number Theory) (Models are provided with models) 5 examples of prompts to show you how it should answer.

A typical question looks like this:

For all real numbers $r and $s, define the mathematical operation $\#$ so that the following conditions apply: $r\\#\0 = r, r\\#\s = s \\#\r$, and $(r+1)\\#\s =(r\\#\s) +s+1$. How much is $11\\#\5$ worth?

The answer is:

71

On the leaderboard, the model must finish the answer with a very specific string (follow Minerva-Math Paper):

“The final answer is (answer). I hope it’s correct.”

The leaderboard then parses (answers) in Sympy, converts it into symbolic representation (simplifies the values if necessary) and ultimately compares it to the gold target.

However, users have reported many of the issues mentioned above.

First, a recurring problem was that some models were unable to follow the answer format expected from the example. Instead, I output other sentences to show the answers. The answer was marked as wrong, even if it is actually correct, as the format is not followed! (これは、あなたが興味を持っているのが「モデルが数学にどれだけ良いか」である場合、特に問題です)。

example Issuue failed extract 7*sqrt(2) + 14 No, therefore the sum of the infinite geometry series is (\frac {7}{9}). Failed extracts 7/9 none (p(n)) and (p(n+1)) share common factors above 1 (\boxed {41}). Failed extraction 4 no \frac {1} {9} Extraction Failed 1/9 no \boxed {5} Car failed extraction 5 no

The next step also presented some issues by converting (answer) into symbolic expressions. This time, it is linked to sympy analysis.

📄Example I hope it is correct. Parametric EQ EQ (2x + 4Y + Z -19, 0) 0 (23) extraction failure failed 23 ((-\inf, -14)\cup(-3, \infty))) Extraction coupling 100% no failure of extraction for symbol 1 for no failure (interval.open(-oo, -14), interval.open(-3, oo)) \begin {pmatrix}\frac {1}{50}&\ frac {7}{50}\frac {7}{50}&\frac {49}{50}\end{pmatrix}MatrixMatrixFailed extraction by matrix (((1/50, 7/50)),(7 /50, 49/50)))) None

In the final step, many problems also occurred when comparing the extracted answers with the target formula.

Example 1No Support for Variable Assignment True False Matrix.Ones == Matrix.ones Matrix no Support Equivalence True false {1}\Union {1,4} == No Support for {1,4} set

All of these issues have been completely fixed with the new Math-Verify parser!

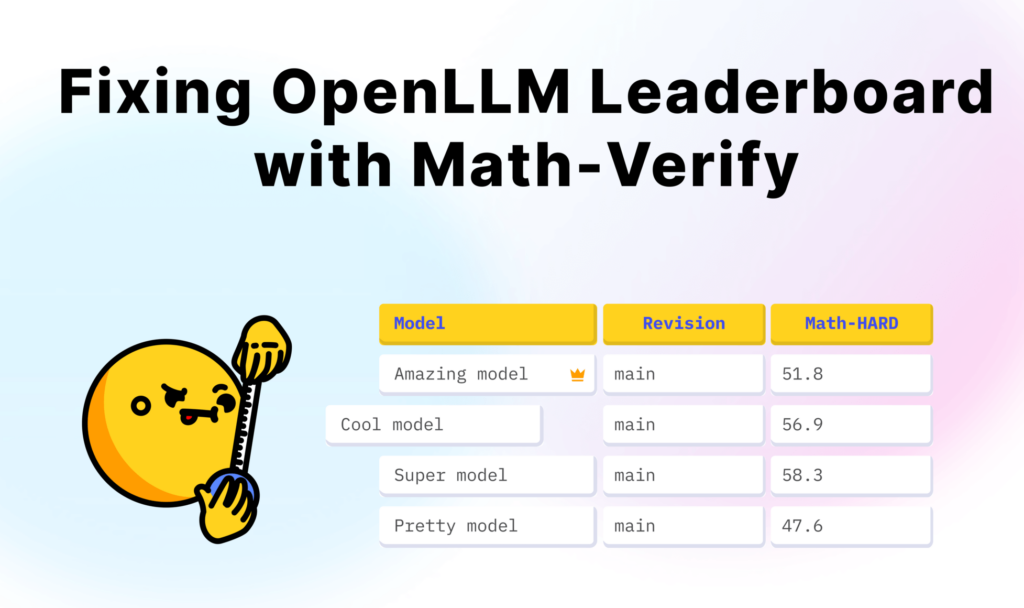

Which model is the best in mathematics? A complete reconstruction of the card thanks to a more fairer rating

As all these problems tend to accumulate, some models suffered deeply from this, and their performance was strongly underestimated. So it was as easy as removing the previous evaluator and modifying only the 3 lines of code. (You can even try it with your math avoidance!)

Therefore, this means reevaluating all submitted models since June, completely overhauling the top 20 models in the mathematical subset of the leaderboard.

The impact of change

On average, the model solves 61 problems overall, representing an increase of 4.66 points overall!

最も重要な改善を示した2つのサブセットは、それぞれ8.27と6.93の利益を持つ代数関連(代数とPrealgebra)の両方でした。極端な場合、一部のモデルは、これらのサブセットで90ポイント近くの改善を実証しました。 We believe that the greatest improvement was seen as these subsets frequently include answers and matrices presented as sets (for questions with multiple solutions). Math-Verify enhances the processing of both answer types and contributes to these notable benefits.

Changes to model family

We first discovered a mathematical evaluation problem when examining Qwen models with an unusually low score compared to self-reported performance. After the introduction of Math-Verify, these models scored more than doubled, showing previous severe performance underestimation.

However, the Qwen model is not alone. Another major family affected is Deepseek. After switching to Math-Verify, the DeepSeek model almost tripled its score! This is because their answers are usually wrapped in a box (\boxed {}) notation that the old evaluator could not extract.

Math Hard Leaderboard Changes

As mentioned at the beginning, the top 20 rankings have undergone a major change, with Nvidia’s Acemath model currently dominating the mathematics hard leaderboard. Another major beneficiary of this change is the Qwen derivative. This is the only model that most often ranks just below Acemath. Below is a complete table comparing the leaderboard rankings of the old top 20.

Changes to the leaderboard

Finally, we looked into how the results across the leaderboard have evolved. The top four remain unchanged, but the rest have undergone significant changes. With the rise of multiple QWEN derivatives in Math Subset, the presence of QWEN models during the top 20 has further grown the models that have grown further in the overall results.

Many other models also jumped completely into the rankings, winning over 200 locations! You can see the results in detail on the Open LLM leaderboard.

I’ll summarize

The introduction of Math-Verify has significantly improved the accuracy and fairness of ratings on the Open LLM leaderboard. This has restructured the leaderboard and many models have shown significant improvements in scores.

We encourage all developers and researchers to adopt Mathematics Verphy for their own mathematics assessments. This allows you to evaluate the model with more reliable results. Additionally, explore updated rankings and see how your favorite models have changed their performance.