Emphasizing Purview’s key role as an enterprise platform for protecting corporate data, Microsoft is adding AI capabilities to help customers investigate and mitigate data security incidents by accelerating the investigation process after a breaches. Last week we provided new insights into Purview data security research. It is currently open to the public.

Purview Data Security Investigations has added a notable addition to the Microsoft security lineup, which was demonstrated at last week’s online security event. As explained by various corporate executives, the company recruits AI to target attackers to fight AI in order to combat the growing attack surface and the scope of the threat to combat AI. This enhancement comes just after a set of AI security agents delivered by Microsoft and Partners.

Collectively, the company aims to enhance its security product portfolio with AI capabilities, following recent past major security challenges and failures.

This report details Data Security Investigations and AI-centric enhancements for ENTRA identity management and AI-focused defenders.

Purview Power

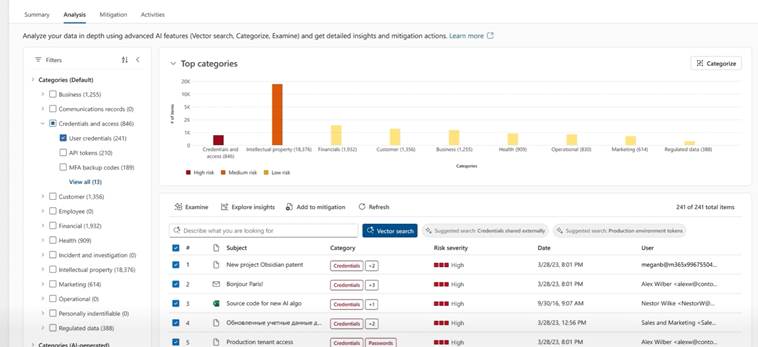

Data Security Investigations leverages AI to analyse large data at scale to quickly launch and run data security investigations.

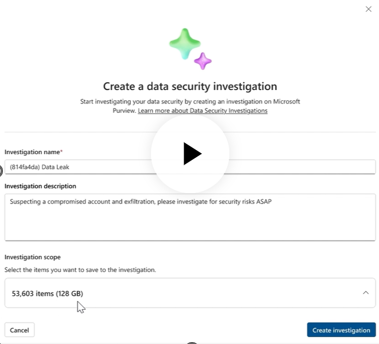

Research can be pre-scoped with affected data, saving the time required for security administrators to process the data and identify where key risks reside, and begin to understand security cases, explained Rudra Mitra, corporate vice president at Microsoft Purview. In the demo, the company demonstrated its ability to separate events in individual categories using over 50,000 events being analyzed and examples of those related to eligibility and access.

“All 53,603 items related to the activity of at-risk users will be brought directly to the survey,” explained Mitra.

The automatically generated report provides administrators with an overview, remaining risks, mitigation steps, and thought processes or methodologies behind the evaluations they have created.

Purview Data Security Investigations provides the ability to visualize the correlation between affected users and their activities, allowing administrators to discover additional users and new content that needs research. The Investigation feature also allows you to actively search for other sources within Microsoft 365 or Corporate Data Estate to find incident data.

Microsoft is expanding its toolkit to offload critical manual processes that could potentially focus resources by applying AI to Purview’s customers’ installed-based security investigations.

Shadow of the intersection ai

Microsoft executives have provided new data points to Shadow AI and its troubling trends towards risk. 78% of users use AI tools such as the Consumer Genai app through a web browser.

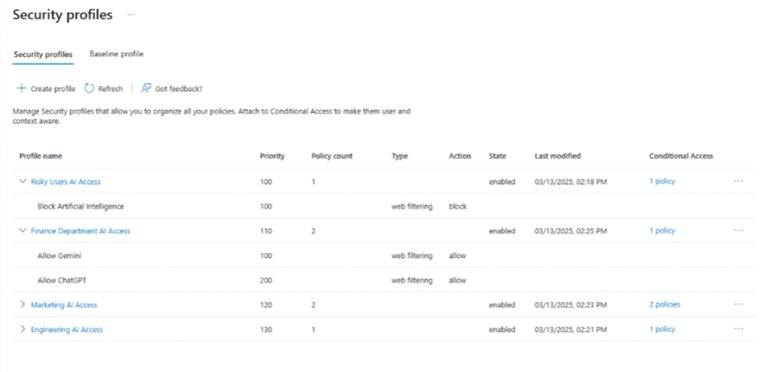

To address this issue, the company offers new features in ENTRA access management and scope to prevent risky access scenarios and data leaks. Microsoft Entra offers a new web filter for admins to create AI app access policies based on user identity type and role. “This allows us to have one set of sets for the finance department and another set of policy for researchers,” said Herain Oberoi, general manager of data and AI security. These ENTRA access controls are now generally available.

For Purview, the company offers new data security controls (now published) for Microsoft Edge for Business. Types or submitted sensitive information will be detected and blocked in real time. For example, the new AI web filter allows “dangerous” users to access only the AI applications they need.

In a demonstration of this feature, the company showed how administrators could create new policies. This is set to detect the most common types of sensitive data by default. There are also custom classifiers that can be flagged. For example, you can suppress merger or acquisition-related keywords, suppress them, and prevent data leakage that is highly sensitive to unauthorized AI apps.

Protecting AI Services

In an online event, Microsoft highlighted the importance of securing not only data within AI apps, but also AI services hosted on cloud infrastructure. The new Microsoft Defender feature is intended to lock down these services. The new feature gives security teams the authority to identify, prioritize and mitigate risks, and provides real-time cloud detection and response to protect AI applications, explained by Rob Lefferts, Vice President of Threat Protection.

To that end, Microsoft is expanding its AI security attitude management capabilities in Microsoft Defender, beyond current support for Azure Open AI, Azure Machine Learning, and AWS Bedrock. With the latest enhancements, Defender now supports the Google Vertex AI model in May. Support for all models in the Azure AI Foundry model catalog is generally available, including metalama, mistral, deep seek, and custom models. This extension provides a unified approach to managing AI security risks across multi-cloud and multi-model environments.

Security co-pilot proof points

Finally, Microsoft Customer St. Luke’s University Network shared the core benefits it has achieved through the use of Microsoft Security Copilot. Security co-pilots overcome the need to collect data from multiple dashboards, identify the most important data, and speed up incidents for team members quickly.

“Security Copilot is integrated with Microsoft Defender and Microsoft Sentinel, allowing you to navigate data and provide a given alert and better context.”

David Finkelstein, Chief Information Security Officer at St. Luke, added: “Security Capolit offers the ability to look at that information, build a roadmap, and build the strategies behind how to fill those gaps and make things easier and more efficient.

AI Agent & Copilot Summit is an AI-first event that defines opportunities, impacts, and outcomes with Microsoft Copilot and agents. Based on the success of 2025, the 2026 event will take place in San Diego from March 17th to 19th. Please see details.