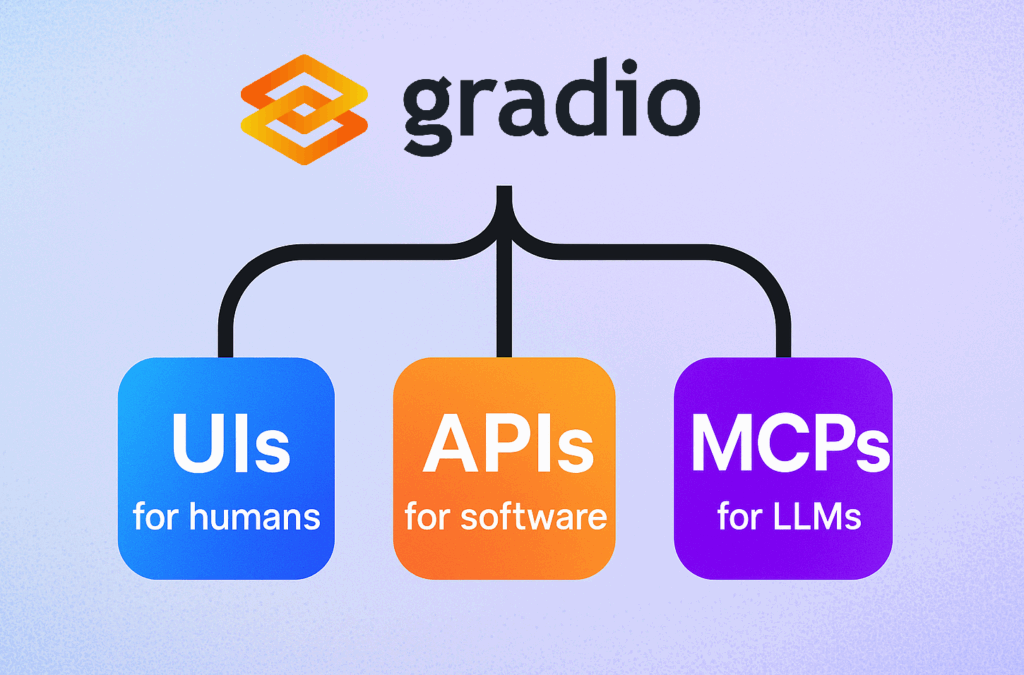

Gradio is a Python library used by over 1 million developers each month, building an interface for machine learning models. In addition to creating UIS, Gradio also exposes API features. – The Gradio app can start the Model Context Protocol (MCP) server for LLMS. This means that the grade app is called a tool by LLM whether it’s an image generator, a tax calculator, or something else at all.

This guide shows you how to build an MCP server with just a few lines in Python using Gradio.

Prerequisites

If it is not already installed, please install Gradio with MCP Extra.

Pip install “Grade (MCP)”

This will install the required dependencies, including the MCP package. You also need LLM applications that support tool calls using MCP protocols such as Claude Desktop, Cursor, and Cline (these are known as “MCP clients”).

Why build an MCP server?

An MCP server is a standardized way of exposing tools for use by LLMS. The MCP server can provide all sorts of additional features to LLMS, including the ability to generate or edit images, compose audio, and perform specific calculations such as prime factor size counts.

Gradio makes it easy to build these MCP servers and transforms Python functionality into a tool that can use LLMS.

Example: Count letters with words

LLMS is notoriously not so good at counting the number of letters in words (for example, the number of “r” in “strawberry”). But what happens if you equip them with tools to help them? First, create a simple gradient app that counts the number of letters by word or phrase.

Import Gradation As gr

def Letter_counter(Words, letters):

“” “Counts the occurrence of a particular character by word.

args:

Word: Word or phrase to analyze

Letter: Letters to count occurrences

Returns:

How many times a letter appears in a word

“” “

return word.lower(). count(letter.lower()) demo = gr.interface(fn = letter_counter, inputs = (“Sentence”, “Sentence”), output =“number”title =“Letter Counter”description =“Counter how many times a letter appears in a word”

) demo.launch(mcp_server =truth))

Note that you set mcp_server = true in .launch(). This is everything the Gradio app needs to function as an MCP server! Now when you run this app, it is:

Start MCP Server to Start a Normal Grade Web Interface Print MCP Server URL in the Console

You can access the MCP server:

http:// Your-server:port/gradio_api/mcp/sse

Gradio automatically converts the character_Counter function to an MCP tool that can be used with LLMS. The function docus string is used to generate a description of the tool and its parameters.

All you need to do is add this URL endpoint to your MCP client (eg, Claude desktop, cursor, klein, or small agent). This usually means pasting this configuration in the settings.

{“mcpservers”:{“gradio”:{“url”: “http://

(By the way, you can copy and paste the exact configuration by going to the “View API” link in the footer of your Gradio app and clicking “MCP.”)

Gradio <> Key features of MCP integration

Tool Conversion: Each API endpoint in your Gradio app is automatically converted to an MCP tool with a corresponding name, description, and input schema. To view the tools and schemas, go to http:// your-server:port/gradio_api/mcp/schema, or go to the “View API” link in the footer of your Gradio app and click “MCP”.

Gradio allows developers to create sophisticated interfaces using simple Python code that provides dynamic UI operations for immediate visual feedback.

Environment variable support. There are two ways to enable the MCP server feature:

Use the MCP_Server parameter as mentioned above.

demo.launch(mcp_server =truth))

Using environment variables:

export gradio_mcp_server = true

File Processing: The server automatically handles file data conversions including:

Convert base64 encoded strings to file into data processing image files and return them in the correct format to manage temporary file storage

Recent gradient updates have improved image processing capabilities with features like Photoshop-style zoom, PAN and full transparency control.

It is highly recommended to pass the input image and file as the full URL (“http://…” or “https://…”) as MCP clients don’t always handle local files correctly.

The hosted MCP server is located in the space. You can release the gradient application for free by hugging the face space. This allows you to use a free hosted MCP server. Gradio is part of a broader ecosystem, including Python and JavaScript libraries for building or querying machine learning applications programmatically.

An example of such a space is: https://huggingface.co/spaces/abidlabs/mcp-tools. Note that you can add this configuration to your MCP client and start using the tool in this space immediately.

{“mcpservers”:{“gradio”:{“url”: “https://abidlabs-mcp-tools.hf.space/gradio_api/mcp/sse”}}}}

And that’s it! By building an MCP server using Gradio, you can easily add different kinds of custom features to your LLM.

Read more

If you want to dive deeper, here are some articles we recommend: