New Delhi: Artificial intelligence (AI) is at the forefront of technology, driving almost every aspect of life, from healthcare to entertainment, education, security, research and more. Now, technology is finding use cases in mitigating the threat of fake news, deepfakes and hate speech. Professor Dinesh Kumar Vishwakarma, Head of IT Department at Delhi University of Technology (DTU), is actively working on developing such AI models along with his team.

The professor highlighted the growing need for AI-based fact-checking in today’s digital landscape. Traditional fact-checking methods rely on confirmation from reporters and experts, but their models claim to provide automated solutions. Users can simply enter news content, and AI evaluates its reliability.

Detecting fake news, hate speech, and deepfakes

Professor Vishwakarma explained that he is working on an AI model that can analyze online content and determine whether news content is fake or true. The team’s students have developed an AI-based algorithm that allows users to copy and paste content into the system. This will assess reliability by finding sources and cross-checking them with trusted news publications.

Similarly, this model is designed to analyze speech and categorize statements that fall within the category of hate speech. By examining audio patterns, lip syncs and facial expressions in the video, AI can detect instances of hate speech.

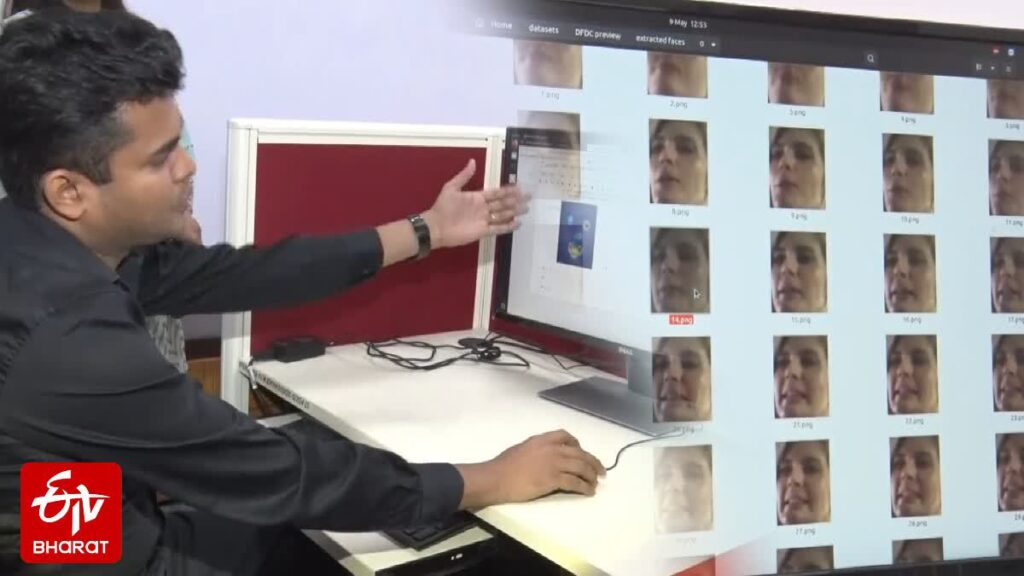

PhD researchers in DTU’s IT department are also working on deep fake detection. They developed an AI-based model that analyzes images and videos for manipulation signs. Users can take screenshots of suspicious, deep, fake images and put them in AI systems. This allows you to analyze whether the image has been digitally modified. Researchers explained that video clips also work as they analyze the system one by one, as they require multiple stills from the clip.

Developing public application

AI-powered detection system for fake news, deepfakes and hate speech developed in Python is accessible only to DTU faculty and students. However, it will be available to the public following the development of a dedicated application or web app. Professor Vishwakarma explained that they are currently working on such apps and will then be released for wider accessibility.

He emphasized the need for such technology, especially during elections, as misinformation and hate speech are widely distributed for political gain. Fake news is often undetected until after the election, until it affects public opinion and outcomes.

Additionally, he pointed out the rise of misleading YouTube content. YouTube content often had thumbnails not match actual video content, which made viewers feel cheated. The AI model aims to prevent such instances and ensure that people receive accurate information without being misunderstood. In December 2024, YouTube took steps in this direction and announced corrective actions for videos posting ClickBait thumbnails or titles in India.