Searched Generation (RAG) enhances text generation in large language models by incorporating fresh domain knowledge stored in external data stores. Separating company data from knowledge learned through language models during training is essential to balancing performance, accuracy and security privacy goals.

In this blog, you will learn how Intel can help develop and deploy RAG applications as part of OPEA, the open platform for enterprise AI. We’ll also discover how Intel Gaudi 2 Ai Accelerators and Xeon CPUs can dramatically improve enterprise performance through real rag use cases.

Before diving into the details, first get access to the hardware. Intel Gaudi 2 is purposefully built to accelerate deep learning training and inference in data centers and in the cloud. It is published in Intel Developer Cloud (IDC) and on-premises implementations. IDC is the easiest way to start with Gaudi 2. If you don’t have an account yet, please register for one, register for Premium before applying for access.

On the software side, you build applications with Langchain, an open source framework designed to simplify the creation of AI applications using LLMS. Provides template-based solutions to enable developers to build RAG applications using custom embeddings, vector databases, and LLM. The Langchain documentation provides more information. Intel is actively providing multiple optimizations for Langchain, enabling developers to efficiently deploy Genai applications on the Intel platform.

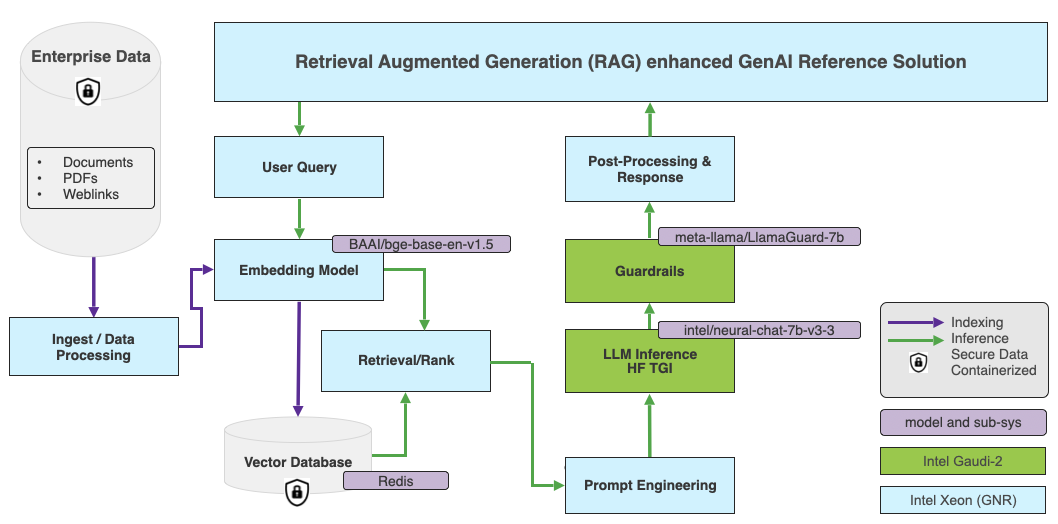

In Langchain, you use the Rag-Redis template to create RAG applications. Extends the BAAI/BGE-Base-En-V1.5 embedded model and Redis as the default vector database. The diagram below illustrates a high-level architecture.

The embedded model runs on an Intel Granite Rapids CPU. Intel Granite Rapids Architecture is optimized to provide the lowest cost of ownership (TCO) for high-core performance-sensitive and general-purpose computing workloads. GNR also supports AMX-FP16 instruction sets, increasing performance for mixed AI workloads by 2-3 times.

LLM runs on an Intel Gaudi 2 accelerator. When it comes to hugging face models, the best Havana library is the interface between the hugging face transformer and the diffuser library and Gaudi. It provides tools to easily provide loading, training and inference for single-card and multi-card configuration models for various downstream tasks.

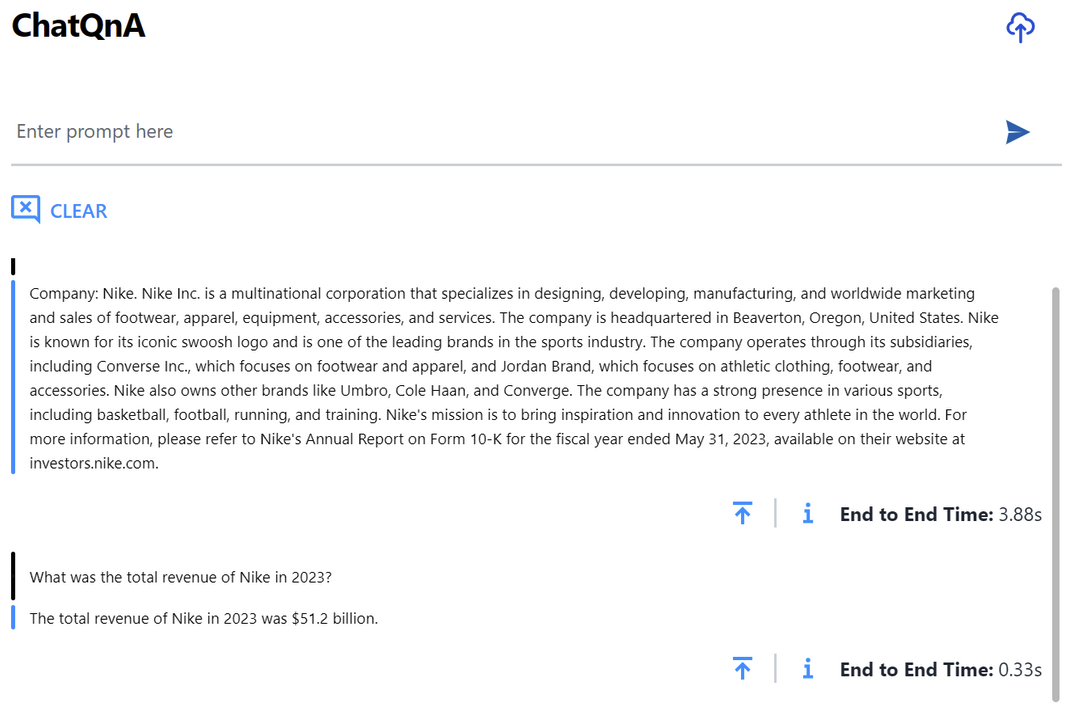

Provides DockerFile to streamline your Langchain development environment setup. Once you have started the Docker container, you can start building the Vector database, the RAG pipeline, and the Langchain application within the Docker environment. Follow the ChatQNA examples for detailed step-by-step instructions.

To enter a vector database, use Nike’s public financial documents. Here is the sample code.

# Intake PDF files containing Nike’s Edgar 10K filing data. company_name = “nike” data_path = “data” doc_path = (os.path.join(data_path, file)file in os.listdir(data_path))(0) content = pdf_loader(doc_path)chunks = text_splitter.split_text(content) Redis.from_texts(texts = (f “company:{company_name}.” + chunk for chunk for chunk for chunk in chunks), embedding = bedder, index_name = index_name, index_schema = index_schema, redis_url = redis_url,)

Langchain uses the chain API to connect prompts, vector databases, and embedded models.

The complete code is available in the repository.

Extended model running on Xeon CPU embedder = huggingfacembeddings(model_name = embed_model) VectorStore.AS_RETRIEVER(search_type = “MMR”) runnable Parlale(“Context”:cusports”:runnablepassthrough) |

Run the chat model in Gaudi2 on a Hugging Face Text Generation Inference (TGI) server. This combination allows for high-performance text generation of open source LLM, popular with Gaudi2 hardware such as MPT, Llama, and Mistral.

No setup is required. You can use pre-built Docker images to pass the model name (such as Intel NeuralChat).

モデル= Intel/Neural-Chat-7B-V3-3 Volume = $ PWD/DATA DOCKER RUN-P 8080:80-V $ volume:/data = runtime = habana-e habasible_devices = all-e oppi_mca_btl_vader_single_copy_mechanism = none-cap-addddddddddddddddddd tgi_gaudi – model-id $モデル

This service uses a single Gaudi Accelerator by default. Multiple accelerators may be required to run a larger model (e.g. 70b). In that case, the appropriate parameters, for example -harded true and -num_shard8. For gate models such as Llama or Starcoder, it must be specified using the face token of -e Hugging_face_hub_token = hugging.

Once the container is running, verify that the service works by sending a request to the TGI endpoint.

curl localhost: 8080/generate -x post \ -d ‘{“inputs”: “NFL team that won the Super Bowl in the 2010 season?

If the generated response is displayed, LLM is running correctly and you can enjoy high performance inference with Gaudi 2.

The TGI Gaudi container uses the BFLOAT16 data type by default. For higher throughput, we recommend enabling FP8 quantization. Test results show that FP8 quantization should produce a 1.8-fold increase gain of throughput compared to BF16. FP8 instructions are available in README files.

Finally, you can enable content moderation in the Metalama Guard model. The README file provides instructions for deploying Llama Guard to TGI Gaudi.

Use the following steps to start the RAG application backend service: The Server.py script uses FastAPI to define the service endpoint.

docker exec -it qna-rag-redis-server bash nohup python app/server.py&

By default, the TGI Gaudi endpoint is expected to run on LocalHost on port 8080 (i.e. http://127.0.0.1:8080). If it is running on a different address or port, set the TGI_ENDPoint environment variable accordingly.

Use the following steps to install the Frontend GUI component:

sudo apt -get install npm && \ npm install -gn && \ n stable && \ hash -r && \ npm install -g npm@rest

Next, replace the localhost IP address (127.0.0.1) with the actual IP address of the server the GUI runs on and update the doc_base_url environment variable in the .env file.

Run the following command to install the required dependencies:

NPM installation

Finally, start the GUI server with the following command:

nohup npm run dev &

This will run the front-end service and launch the application.

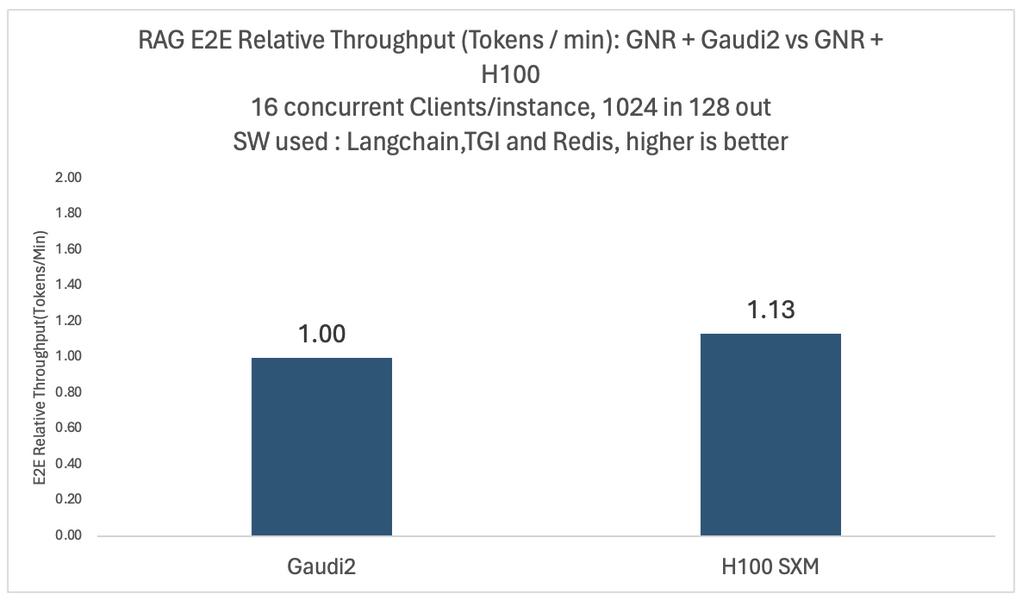

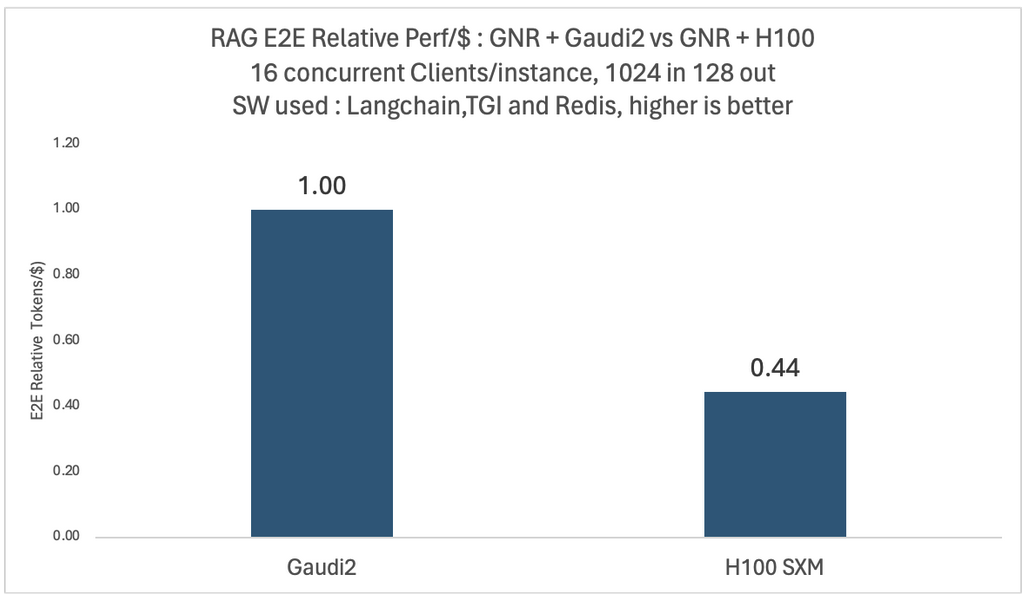

Intensive experiments were conducted on a variety of models and configurations. The two diagrams below show the relative end-to-end throughput and performance per dollar comparison of the Llama2-70B model with 16 simultaneous users on four Intel Gaudi 2 and four NVIDIA H100 platforms.

In both cases, the same Intel Granite Rapids CPU platform is used for vector databases and embedded models. Comparison of performance per dollar uses publicly available pricing to calculate average training performance per dollar, similar to what the MOSAICML team reported in January 2024.

As you can see, the H100-based system has 1.13x throughput, but can only offer 0.44x performance per dollar compared to the Gaudi 2. These comparisons may vary based on customer-specific discounts from various cloud providers. A detailed benchmark composition is listed at the end of the post.

The above example deployment shows a RAG-based chatbot on an Intel platform. Additionally, as Intel continues to release Genai examples right away, developers will benefit from validated tools that simplify the creation and deployment process. These examples offer versatility and ease of customization, making them ideal for a wide range of applications on the Intel platform.

When running Enterprise AI applications, systems based on Intel Granite Rapids CPUs and Gaudi 2 accelerators offer a greater advantage in the total cost of ownership. FP8 optimization allows for further improvements.

The following developer resources should help you kickstart your Genai project with confidence.

If you have any questions or feedback, I would like to answer them on the Hugging Face Forum. Thank you for reading!

Acknowledgements: We would like to thank Chaitanya Khen, Suyue Chen, Mikolaj Zyczynski, Wenjiao Yue, Wenxin Zhang, Letong Han, Sihan Chen, Hanwen Cheng, Yuan Wu, and Yi Wang for nourishing Intel-Grade Systems on Intel-Gaudi.

Benchmark configuration

Gaudi2 configuration: HLS-Gaudi2 with 8 Habana Gaudi2 HL-225H Mezzanine cards and 2 Intel® Xeon® Platinum8380 CPU @ 2.30GHz and 1TB of system memory. OS: Ubuntu 22.04.03, 5.15.0 kernel

H100 SXM configuration: LAMBDA LABS instance GPU_8X_H100_SXM5; 8xH100 SXM and two IntelXeon® Platinum 8480 CPU@2 GHz and 1.8TB of system memory. OS Ubuntu 20.04.6 LTS, 5.15.0 kernel

Intel Xeon: 2SX120C @ Production Granite Rapids Platform with 8800 MCR DIMMs with 1.9GHz and 1.5TB system memory. OS: Cent OS 9, 6.2.0 kernel

The LLAMA2 70B will be deployed on four cards (a query normalized to eight cards). Gaudi2’s BF16 and H100’s FP16.

The embedded model is Baai/BGE-Base v1.5. Test: TGI-GAUDI 1.2.1, TGI-GPU 1.4.5 Python 3.11.7, Langchain 0.1.11, Sente-Transformers 2.5.1, Langchain Benchmarks 0.0.10, Redis 5.0.2, Cuda 12.2.2.2.2.2/Compiler.32965470 0, Tei 1.10,

The RAG has a maximum input length of 1024 and a maximum output length of 128. Test Data Set: Langsmith Q&A. Number of simultaneous clients 16

Gaudi2 TGI parameter (70b): batch_bucket_size = 22, prefill_batch_bucket_size = 4, max_batch_prefill_tokens = 5102, max_batch_total_tokens = 32256, max_waiting_tokens = 5, streaming = false

TGI parameters for H100 (70b): batch_bucket_size = 8, prefill_batch_bucket_size = 4, max_batch_prefill_tokens = 4096, max_batch_total_tokens = 131072, max_waiting_tokens = 20, max_batch_size = 128, forse

TCO Reference: https://www.databricks.com/blog/llm-training-and-inference-gaudi2-ai-aaccelerators