A few days before the upcoming Worldwide Developer Conference (WWDC), Apple published a study entitled “Understanding the strengths and limitations of The Illusion of Thinking: Understanding the strengths and limitations of Remings of Thements Models via the complexity of the issue.” See how much AI models such as Anthropic’s Claude, Openai’s O model, Deepseek R1, and Google’s Thinking Model can scale to replicate human reasoning. Spoiler alerts – Not as much as the entire AI marketing pitch. Can this show what is available for Apple’s AI conversation before the keynote?

This study uses established mathematics and coding benchmarks to question the current standard assessment of large-scale inference models (LRMs), arguing that it does not reveal insights into inferring the structure and quality of traces, suffering from data contamination. Instead, we propose a controlled experimental testbed using an algorithmic puzzle environment. I’ve written about the limitations and the need to evolve with AI benchmarks.

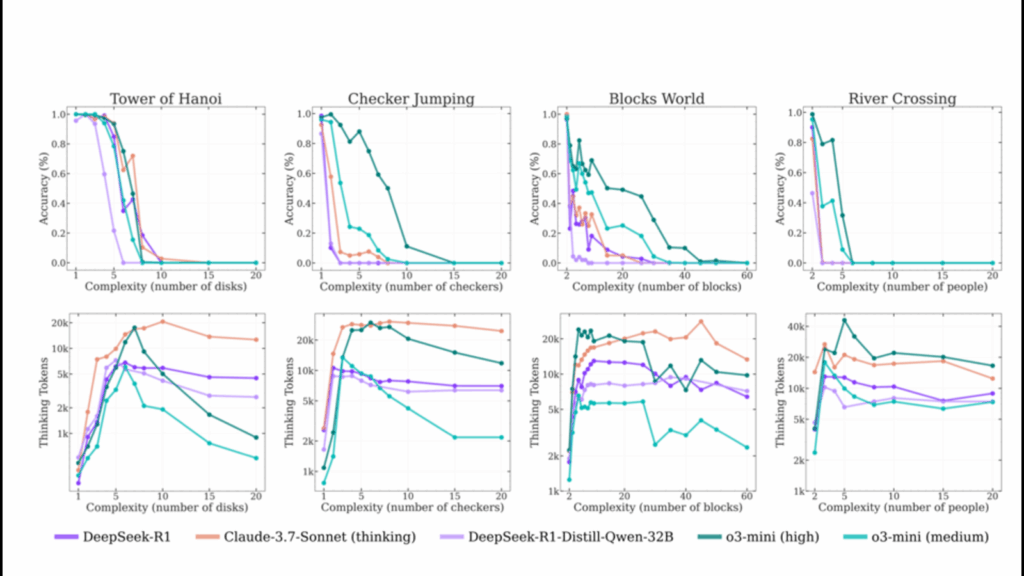

“We show that cutting edge LRMS (e.g., O3-Mini, Deepseek-R1, Claude-3.7-Sonnet-Thinking) fails to develop generalizable problem-solving capabilities, ultimately breaking down to zero across specific complexities in different environments,” the researcher’s paper states. These findings are a harsh warning to the industry. LLM today is far from general purpose inference.

The emergence of large-scale inference models (LRMs) such as Openai’s O1/O3, Deepseek-R1, Claude 3.7 Sonnet Thinking, and Gemini Thinking have been hailed as an important advance that potentially marks stairs towards more common artificial intelligence. These models characteristically generate responses according to detailed “thinking processes,” such as long thinking sequences, before providing the final answer. They have shown promising results on various inference benchmarks, but the very ability of the benchmark to determine rapidly evolving models is questionable.

Researchers cite comparisons between non-thinking LLM and their “thinking” evolution. “When complexity is low, unrethinking models are more accurate and token efficient. As complexity increases, inference models require more tokens. Examples of Claude 3.7 Sonnet and Claude 3.7 Sonnet’s thinking demonstrate how both models maintain accuracy up to complexity level 3. At the same time, thinking models use significantly more tokens.

This study sought to challenge a general assessment paradigm. This often relies on established mathematical and coding benchmarks that are susceptible to data contamination. Such benchmarks also focus primarily on the accuracy of the final answer, providing limited insight into the inference process itself. This is an important differentiator of the “thinking” model compared to simpler, larger-scale language models. To address these gaps, this study utilizes the world of Hanoi towers, checker jumps, river crossings, and blocks – a controllable puzzle environment. These puzzles allow you to precisely manipulate the complexity of the problem while maintaining consistent logical structures and rules that must be explicitly followed. The structure, in theory, opens a window. It looks at how these models attempt to “think.”

Findings from this controlled experimental setup reveal a major limitation of current frontier LRM. One of the most prominent observations is the complete accuracy collapse that occurs beyond a specific complexity threshold in all tested inference models. This is not a gradual deterioration, but rather a sudden drop to near zero accuracy, as the problem becomes difficult enough.

“The state-of-the-art LRMS (e.g., O3-Mini, Deepseek-R1, Claude-3.7-Sonnet-Thinking) fails to develop generalizable problem-solving features, and ultimately collapses to zero beyond a specific complexity in different environments.”

These results inevitably challenge the notion that LRMS truly possesses the generalized problem-solving skills required for task planning or multi-step processes. This study also identifies counterintuitive scaling limitations of the model’s inference effort (measured by the use of inference tokens during the “thinking” phase). This reduces inference efforts that are seen as these models spend more tokens in the beginning, but are in fact close to the collapse of accuracy in practice.

The researchers ask, “Despite these claims and advances in performance, the fundamental benefits and limitations of LRM remain poorly understood. The key questions still persist. Are these models capable of generalizable inferences or do they utilize different forms of pattern matching?” There are further questions about performance scaling that increases the complexity of the problem, comparisons with unthinkable standard LLM counterparts if the same inference token calculations were provided, and the inherent limitations of the current inference approach, and improvements needed to advance towards more robust inference.

Where do you go from here?

Researchers have revealed that there are limitations to the test methods as well. “Our puzzle environment allows for controlled experiments by finely controlling the complexity of the problem, but they represent narrow slices of inference tasks and may not capture the diversity of real-world or knowledge-intensive reasoning problems,” they say. They add that “the use of a deterministic puzzle simulator assumes that inference can be fully verified at every step.” They say it limits the validity of the analysis to more inference.

There is little argument that LRM represents progress, especially due to the relevance of AI. However, this study highlights that not all inference models are possible, particularly in the face of increased complexity. These findings may suggest that prior to WWDC 2025 and by Apple researchers that the publication of AI inferences is likely to be practical. The focus area can include specific use cases where current AI methodologies can be trusted (research papers show lower to moderate complexity, lower reliance on perfect long order execution). Although we are here in the age of large-scale inference models, this study of “the illusion of thought” means that AI with true inference remains Mirage.