Neural networks face significant challenges in generalizing to out-of-distribution (OOD) data that deviate from in-distribution (ID) training data. This generalization problem causes serious reliability issues in real-world machine learning applications. Recent research has revealed interesting heuristics that describe model behavior across distribution-shift benchmarks, particularly the “accuracy on the line” (ACL) and “agreement on the line” (AGL) phenomena. However, empirical evidence shows that linear performance trends can break down catastrophically across different distribution shift scenarios. For example, a model with high within-distribution accuracy (92-95%) can significantly reduce OOD accuracy in the 10-50% range and degrade traditional performance. Forecasting methods are unreliable and unpredictable.

Existing research has considered various approaches to understand and alleviate the challenges of distribution shifts in neural networks. Theoretical studies have investigated the conditions under which accuracy and matching linear trends are maintained or broken. The researchers found that certain transformations to the data distribution, such as adding anisotropic Gaussian noise, can disrupt the linear correlation between in-distribution and out-of-distribution performance. Test-time adaptation techniques have emerged as a promising direction to enhance model robustness, employing strategies such as self-supervised learning, batch normalized parameter updates, and pseudo-label generation. These methods aim to create more adaptive models to maintain performance across different data distributions.

Researchers from Carnegie Mellon University and the Bosch AI Center have proposed a new approach to address the challenge of distribution shifts in neural networks. Their key findings reveal that recent test time adaptation (TTA) techniques improve OOD performance and enhance ACL and Agency-on-the-Line (AGL) trends in models . Researchers have shown that TTA can transform complex distribution shifts into more predictable transformations in the feature embedding space. This method combines complex data distribution changes into a single “scaling” variable, allowing for more accurate estimates of model performance across different distribution shifts. This provides a systematic approach to select optimal hyperparameters and adaptation strategies without requiring labeled OOD data.

The proposed method architecture uses a comprehensive experimental framework to rigorously evaluate the TTA technique over different distribution shifts. The experimental setup includes 15 fault shifts across the CIFAR10-C, CIFAR100-C, and ImageNet-C datasets to focus on scenarios with historically weak performance correlations. An extensive collection of models is used across over 30 different architectures, including convolutional neural networks such as VGG, ResNet, DenseNet, and MobileNet, and vision transformers such as ViTs, DeiT, and SwinT. Seven state-of-the-art test-time adaptation methods were investigated using diverse training strategies such as self-supervision, batch normalization layers, layer normalization layers, and different parameter update approaches targeting feature extractors. .

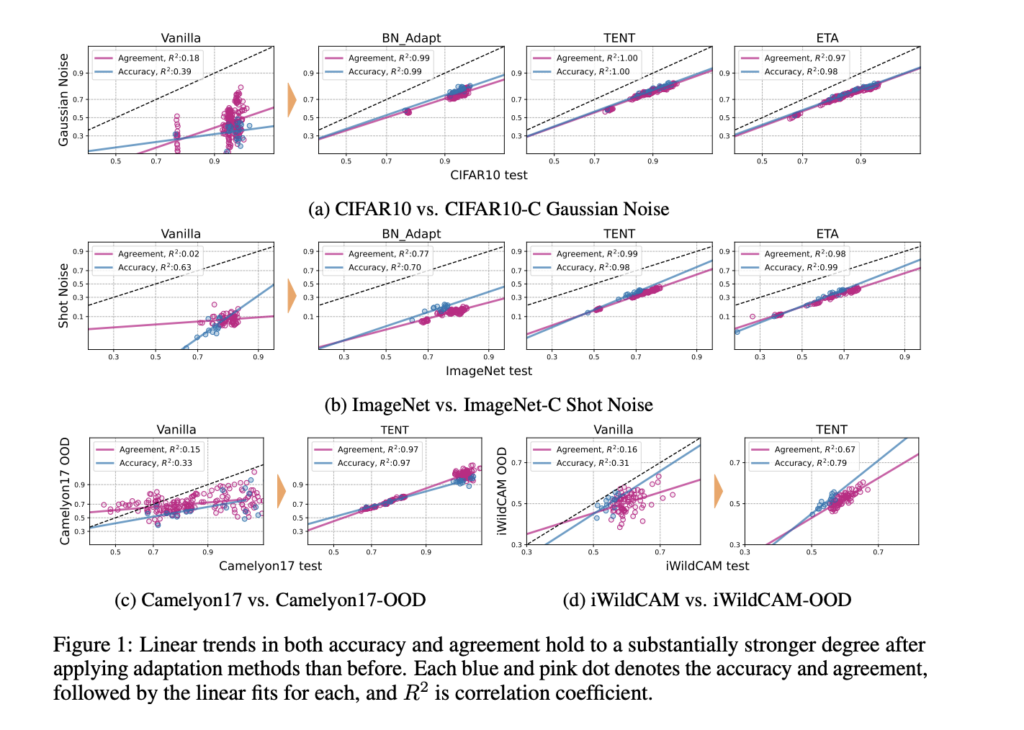

The experimental results reveal that the performance of the model changed significantly after applying the TTA technique. Distribution shifts that were previously characterized by weak correlation trends, such as CIFAR10-C Gaussian noise, ImageNet-C shot noise, Camelyon17-WILDS, and iWildCAM-WILDS, have dramatically improved correlation coefficients. Specifically, methods like TENT show remarkable improvements, converting low correlation trends into highly consistent linear relationships between in-distribution and out-of-distribution accuracy and agreement metrics. These observations remained consistent across multiple distribution shifts and adaptation methods. Moreover, models fitted using the same method with different hyperparameters show strong linear trends across different distribution scenarios.

In conclusion, the researchers highlight important advances in understanding TTA techniques across distributional changes. By demonstrating that recent TTA techniques can significantly enhance AGL trends across different scenarios, this study reveals how complex distribution shifts can be reduced to more predictable transformations. This observation allows for more accurate OOD performance estimation without the need for labeled data. However, there are potential limitations, particularly the need for sufficient ID data to estimate concordance rates. Finally, this study opens promising avenues for future research in developing complete test-time techniques to observe and exploit AGL trends.

Check out the paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram channel and LinkedIn group. Don’t forget to join the 60,000+ ML SubReddit.

🚨 Trending: LG AI Research releases EXAONE 3.5: 3 open source bilingual frontier AI level models that deliver unparalleled command following and long context understanding for global leadership in exceptional generative AI….

Sajjad Ansari is a final year undergraduate student at IIT Kharagpur. As a technology enthusiast, he focuses on understanding the impact of AI technology and its impact on the real world, and delves into the practical applications of AI. He aims to explain complex AI concepts in a clear and accessible way.

🧵🧵 (Download) Large-scale language model vulnerability assessment report (recommended)