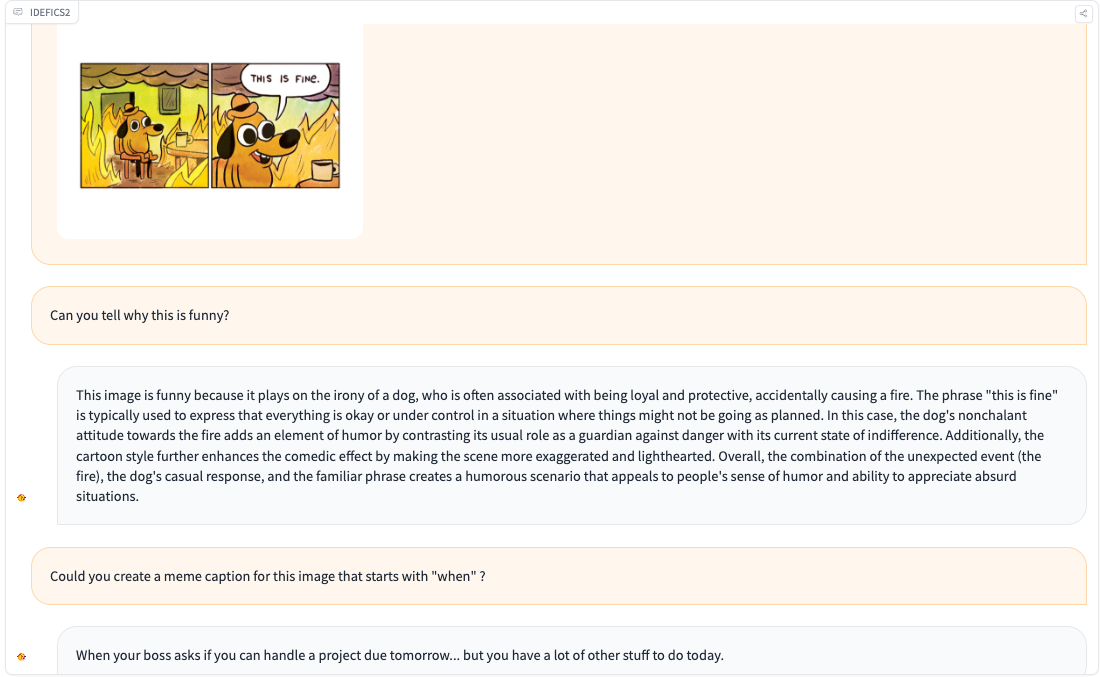

I’m excited to release IDEFICS2. IDEFICS2 is a common multimodal model that takes input sequences of text and images and generates text responses. Answer images questions, explain visual content, create stories based on multiple images, extract information from documents, and perform basic arithmetic operations.

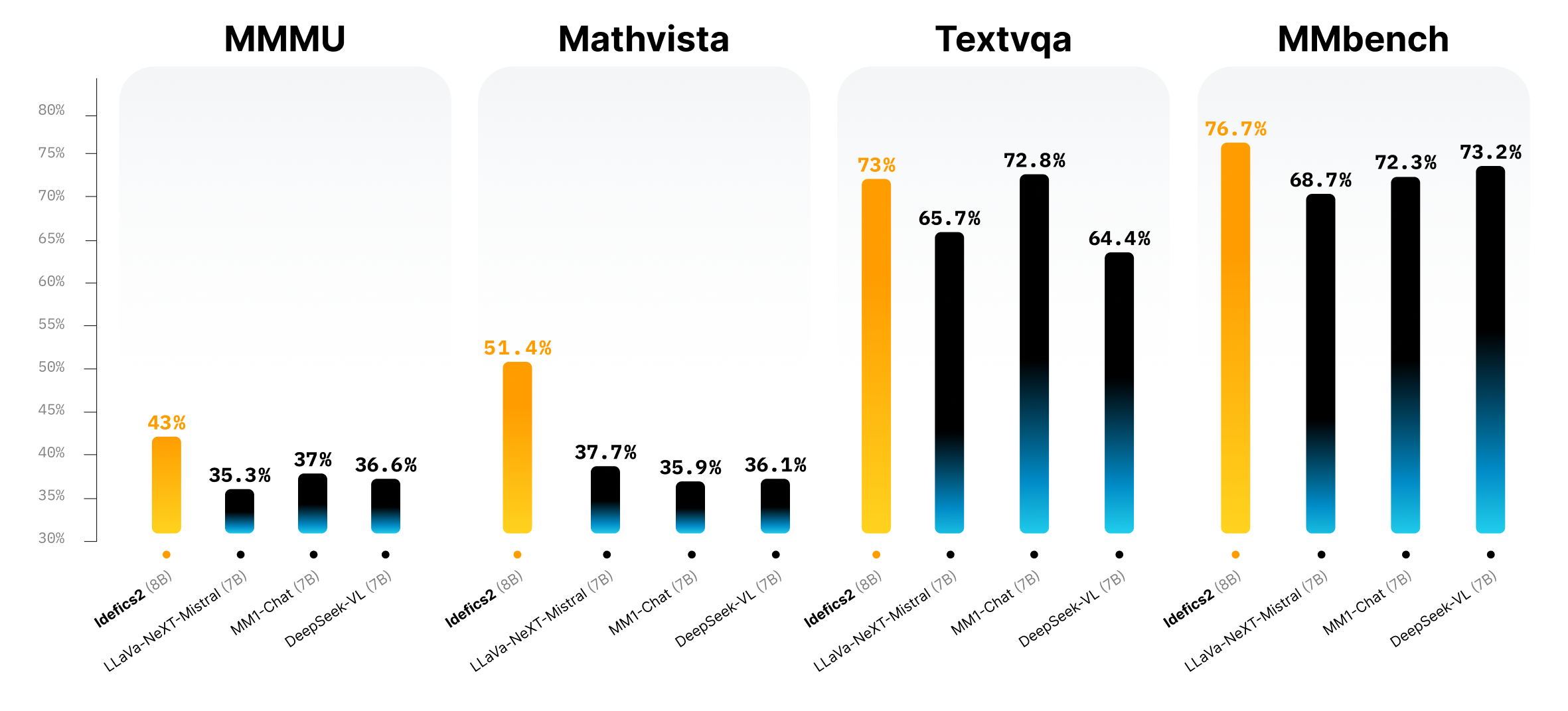

IDEFICS2 uses IDEFICS1:8B parameters, open licensing (Apache 2.0), and enhanced OCR (optical character recognition) capabilities, IDEFICS2 is a powerful foundation for a community working on multimodality. Performance in visual question-answer benchmarks is the top class size and competes with much larger models such as the LLAVA-Next-34B and MM1-30B-chat.

IDEFICS2 is also integrated into 🤗 transformers from Get-go, making it easy to Finetune in many multimodal applications. You can try out the hub model now!

Open the model

Weight size #Token

mmmu per image

(Val/Test) Mathvista

(testmini)textvqa

(val)mmbench

(Test) VQAV2

(test-dev) docvqa

(Test) DeepSeek-Vl llava-next-34b ✅34b 2880 51.1/44.7 46.5 69.5 79.3 83.7-mm1-chat-7b❌7b 720 37.0/35.6 35.9 GEMINI 1.0 PRO ❌🤷♂️ 🤷♂️47.9/ – 45.2 74.6-71.2 88.1 Gemini1.5 Pro 80b— 39.3-68.8 -IDEFICS2 (w/oim. Split)*✅8b 64 43.5/37.9 51.6 70.4 76.8 80.8 67.3 IDEFICS2 (w/im. split)

* w/ im. Split: Following strategies from Sphinx and Llava-Next, 4 allows optional sub-image splitting.

Training data

IDEFICS2 was trained with a mixture of openly available datasets for pretraining: interleaved web documents (Wikipedia, Oberic), image caption pairs (public multimodal dataset, LaION-COCO), OCR data (PDFA(EN), IDL and rendered text, inter-image data (WebSight)).

Interactive visualization allows you to explore the Obelics dataset.

Following the general practices of the Foundation Model Community, we will further train a basic model for task-oriented data. However, these data are often in different formats and are scattered across different locations. Gathering them is a barrier to the community. To address that issue, we are releasing a fine-tuning dataset of the multimodal instructions that we have been cooking: The Cauldron is an open compilation of 50 manually curated datasets formatted for multi-turn conversations. Indicates fine-tuned IDEFICS2 for cauldron concatenation and various text-only instruction fine-tuning datasets.

IDEFICS1 improvements

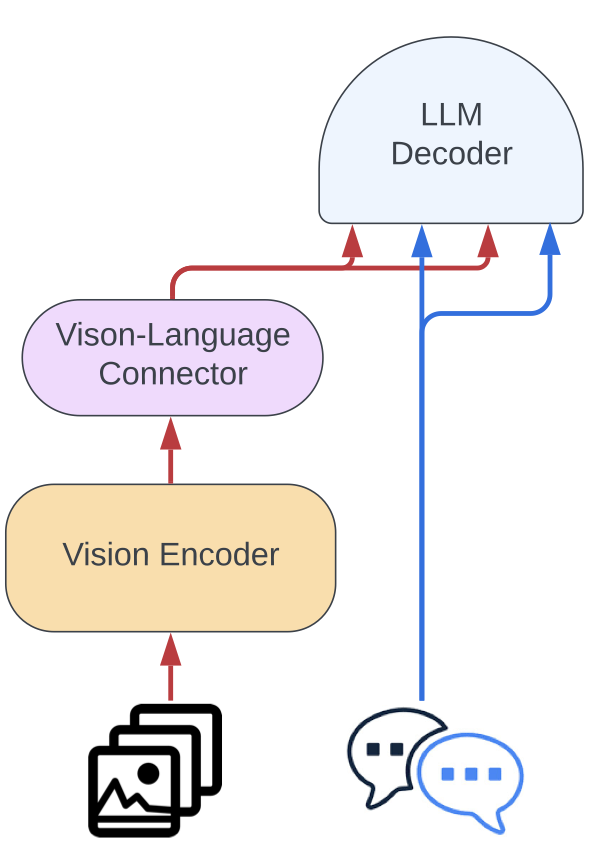

Follow your Navit strategy to work with native resolution (up to 980 x 980) and native aspect ratio images. It avoids the need to resize images to fixed-sized squares, as has been done historically in the computer vision community. Additionally, following Sphinx’s strategy, (optionally) allows for very large resolution sub-image splits and passed images. We have significantly enhanced OCR capabilities by integrating data that the model needs to transfer text into an image or document. We also improved our ability to answer questions about charts, numbers and documents using appropriate training data. Starting from the architecture of IDEFICS1 (gate mutual attendance) we simplified the integration of visual features into the language backbone. The image is fed to a Vision encoder, followed by a learned perceptor pooling and an MLP modality projection. The pooled sequence then concatenates with text embedding to obtain the (interleaved) sequence of images and text.

All of these improvements, along with pre-trained backbone improvements, bring a big jump in performance over the 10x smaller model IDEFICS1.

Get started with IDEFICS2

IDEFICS2 is available in the facehub of the Hug and is supported in the last Transformers version. Here is a code sample to try:

Import request

Import torch

from pill Import image

from transformer Import Autoprocessor, AutomodelForvision2Seq

from Transformers.image_utils Import load_imagedevice= “cuda:0”

image1 = load_image(“https://cdn.britannica.com/61/93061-050-99147dce/statue-of-liberty-island-new-york-bay.jpg”)image2 = load_image(“https://cdn.britannica.com/59/94459-050-dba42467/skyline-chicago.jpg”)image3 = load_image(“https://cdn.britannica.com/68/170868-050-8DDE8263/GOLDEN-GATE-BRIDGE-SAN-FRANCISCO.JPG”)processor = autoprocessor.from_pretrained(“Huggingfacem4/idefics2-8b”)Model = automodelforvision2seq.from_pretrained(

“Huggingfacem4/idefics2-8b”.to(device) messages =({

“role”: “user”,

“content”:({“type”: “image”},{“type”: “Sentence”, “Sentence”: “What do you see in this image?”},)},{

“role”: “assistant”,

“content”:({“type”: “Sentence”, “Sentence”: “In this image we can see New York City, and more specifically the Statue of Liberty.”},)},{

“role”: “user”,

“content”:({“type”: “image”},{“type”: “Sentence”, “Sentence”: “And how about this picture?”},)},)prompt = processor.apply_chat_template(messages,add_generation_prompt =truthinputs = processor(text = prompt, images =(image1, image2), return_tensors =“PT”)inputs = {k:v.to(device) for K, v in inputs.items()} generated_ids = model.generate(** inputs, max_new_tokens =500generated_texts = processor.batch_decode(generated_ids, skip_special_tokens =truth))

printing(generated_texts)

It also offers a handy tweaking colab for anyone looking to improve IDEFICS2 in a specific use case.

resource

If you want to dive deeper, here is an edit of all the resources in IDEFICS2:

license

This model is built on two pre-trained models: Mistral-7B-V0.1 and Siglip-So400M-Patch14-384. Both are released under the Apache-2.0 license. It also releases IDEFICS2 weights under the Apache-2.0 license.

Acknowledgments

Thank you to the Google Team and Mistral AI for making the model available in the open source AI community!

Thank you to Chun Te Lee for providing Barplot. I remember Noyan for reviews and suggestions on the blog post.