While everyone is talking about AI agents and automation, AMD and Johns Hopkins University have been working to improve the way humans and AI collaborate in research. Their new open source framework, Agent Laboratory, is a complete reimagining of how scientific research can be accelerated through human-AI teamwork.

After reviewing numerous AI research frameworks, Agent Laboratory stands out for its hands-on approach. Rather than trying to replace human researchers (as many existing solutions do), they enhance researchers’ abilities by handling time-consuming aspects of research while keeping humans in the driver’s seat. I’m focusing on that.

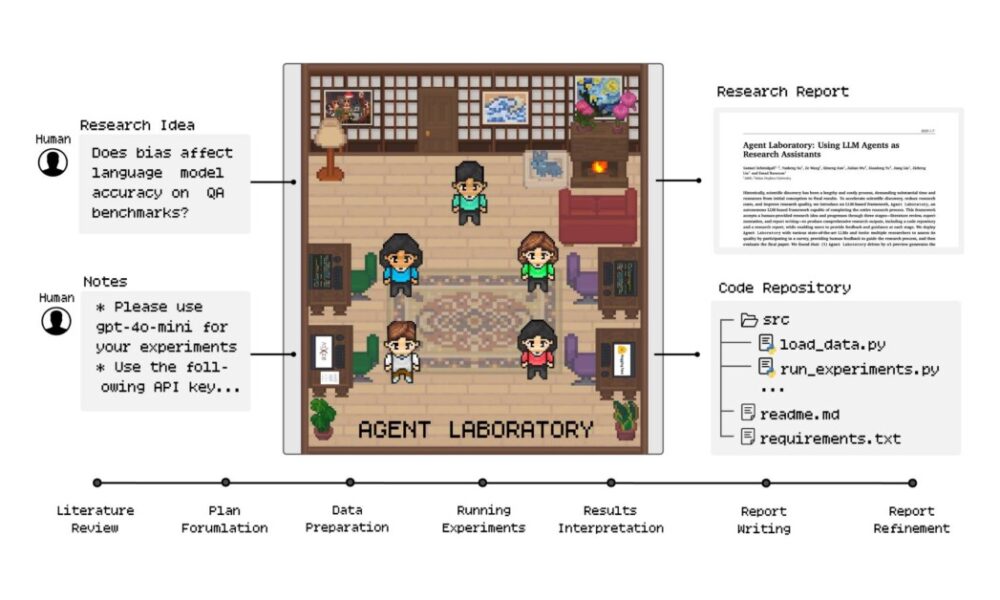

The core innovation here is simple but powerful. Rather than pursuing fully autonomous research (which often leads to questionable results), agent laboratories create virtual labs in which multiple specialized AI agents work together, each relying on human guidance. Handles various aspects of the research process while being fixed.

Virtual Lab Details

Think of the Agent Laboratory as a well-organized research team where AI agents play specialized roles. Just like in a real laboratory, each agent has specific responsibilities and expertise.

The PhD agent works on literature reviews and research planning.The Postdoc agent helps refine the experimental approach.The ML Engineer agent handles the technical implementation.The Professor agent evaluates and scores the research results.

What makes this system particularly interesting is its workflow. Unlike traditional AI tools that work alone, Agent Laboratory creates a collaborative environment where these agents interact and build on each other’s work.

This process follows the natural progression of research.

Literature review: PhD agents use the arXiv API to scour academic papers and collect and organize relevant research.Planning: PhD agents and postdoctoral agents team up to create detailed research plansimplementation: ML Engineer Agent writes and tests codeAnalysis and documentation: Teams collaborate to interpret results and generate comprehensive reports

But here’s where it gets really practical. The framework is computationally flexible, allowing researchers to allocate resources based on access to computing power and budget constraints. This makes it a tool designed for real research environments.

Schmidgall et al.

Human Factors: Where AI and Expertise Meet

Agent Laboratory is packed with great automation features, but the real magic happens in something called “co-pilot mode.” In this setting, researchers can provide feedback at each step of the process, creating true collaboration between human expertise and AI assistance.

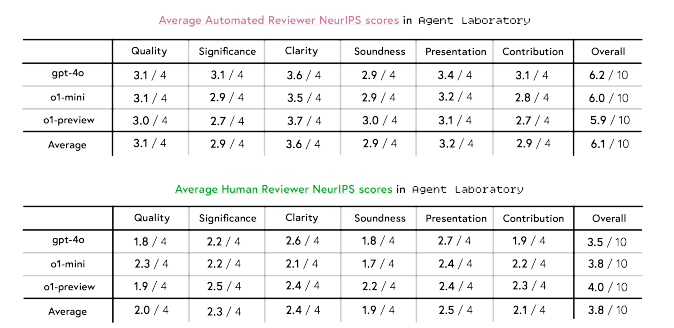

Co-pilot feedback data reveals some compelling insights. In autonomous mode, papers produced by Agent Laboratories received an average score of 3.8/10 in human evaluation. But when the researchers tackled co-pilot mode, the score jumped to 4.38/10. Of particular interest is where these improvements have appeared. Paper scores were significantly higher for clarity (+0.23) and presentation (+0.33).

But here’s a reality check: Even with human involvement, the scores for these papers were approximately 1.45 points lower than the average for accepted NeurIPS papers (5.85 points). This is not a failure, but an important learning about how AI and human expertise should complement each other.

The evaluation revealed something else interesting. AI reviewers consistently rated papers approximately 2.3 points higher than human reviewers. This gap highlights why human oversight remains important in research evaluation.

Schmidgall et al.

Breakdown of numbers

What really matters in a research environment? Cost and performance. Agent Laboratory’s model comparison approach reveals surprising efficiency gains in this regard.

GPT-4o emerges as a speed champion, completing the entire workflow in just 1,165.4 seconds. This is 3.2x faster than o1-mini and 5.3x faster than o1-preview. But more importantly, the cost per paper is only $2.33. It is expected to reduce costs by 84% compared to previous autonomous research methods, which cost approximately $15.

Looking at the model’s performance, we see the following:

o1-preview achieved the highest score for usefulness and clarity o1-mini achieved the highest experimental quality score GPT-4o lagged behind on metrics but outperformed on cost efficiency I got it

The real-world implications here are significant.

Researchers can now choose an approach based on their specific needs.

Do you need rapid prototyping? GPT-4o offers speed and cost efficiency Do you prioritize the quality of your experiments? o1-mini may be right for you Looking for the most sophisticated output? o1 – Preview shows promise

This flexibility means that research teams can adapt the framework to their resources and requirements, rather than being tied to a one-size-fits-all solution.

A new chapter in research

After examining the features and results of agent laboratories, we believe that a major shift is occurring in the way research is conducted. But it is not the story of displacement that often dominates the headlines. It’s something much more subtle and powerful.

Although the Agent Laboratory papers do not yet reach the highest conference standards in and of themselves, they create a new paradigm for accelerating research. Think of it like having a team of AI research assistants who never sleep, specializing in different aspects of the scientific process.

The implications for researchers are profound.

Free up time spent on literature reviews and basic coding to generate creative ideas. Research ideas that might otherwise have been shelved due to resource constraints become viable. Hypotheses can be rapidly prototyped and tested, potentially leading to faster breakthroughs.

Current limitations, such as the gap between AI and human review scores, are opportunities. Each iteration of these systems brings us closer to more advanced research collaborations between humans and AI.

Looking ahead, we see three important developments that have the potential to reshape scientific discovery.

As researchers learn how to effectively leverage these tools, more sophisticated human-AI collaboration patterns will emerge. Cost and time savings will democratize research, allowing smaller labs and institutions to pursue more ambitious projects. Rapid prototyping capabilities can enable a more experimental approach to research.

The key to maximizing this potential? Understand that Agent Laboratory and similar frameworks are tools for amplification, not automation. The future of research is not about choosing between human expertise and AI capabilities, but about finding innovative ways to combine them.