Over the past few months, I’ve seen multiple news articles that contain “deepfakes” or AI-generated content. From images of Taylor Swift to videos of Tom Hanks and recordings of US President Joe Biden. Deepfakes are increasingly shared on social media platforms, such as by manipulating images of people without their consent, supporting the phishing of personal information, or creating misinformation materials aimed at misleading voters. This allows them to propagate quickly and have a wider reach, which can lead to long-term damage.

This blog post explains the approach to running AI-generated content watermarking, discusses the pros and cons, and presents some of the tools available in the hub.

What is a watermark? How does it work?

Watermarks are methods designed to mark content to convey additional information such as reliability. The watermarks of content generated by AI range from perfectly visible (Figure 1) to invisible (Figure 2). Specifically, watermarks include adding patterns to digital content (such as images) and providing information about the source of the content. These patterns can be recognized either by humans or by algorithms.

There are two main ways to watermark content generated by AI: The first occurs while creating content. This can be more robust considering that it requires access to the model itself, but is automatically embedded as part of the generation process. The second method implemented after content is created can also be applied to content from closed sources or in your own model, if there is a warning that it may not be applicable to all types of content (such as text).

Data addiction and signature technology

In addition to watermarks, several related techniques play a role in limiting nonconsensual image manipulation. Some will perceptually modify images that are shared online so that AI algorithms don’t handle them well. People can see images normally, but AI algorithms have no access to comparable content, and as a result, they cannot create new images. Some tools to change images immediately include Glaze and Photo Guard. Other tools work with “poison” images, breaking assumptions specific to AI algorithm training, making it impossible for AI systems to learn how people look based on images shared online. This makes it difficult for these systems to generate fake images of people. These tools include night shades and forks.

Maintaining content reliability and reliability can also be achieved through the use of “signature” techniques that link content to metadata, such as the task of embedding metadata following the C2PA standard. Image signatures help you understand where the image comes from. Metadata can be edited, but systems like Truepic can help bypass this limitation to 1) verify the validity of the metadata and 2) ensure that it can be integrated with watermarking techniques to make it difficult to delete information.

Open closed watermarks

For the public, there are advantages and disadvantages of providing different levels of access to both watermarks and detectors. Openness helps inspire innovation, as developers can iterate through key ideas and create better systems. However, this should be balanced with malicious use. When the AI pipeline open code calls watermarks, it’s easy to remove the watermark step. Even if that aspect of the pipeline is closed, if the watermark is known and the watermark expression code is open, malicious actors can read the code and understand how to edit the generated content in a way that the watermark doesn’t work. If access to the detector is also available, you can continue to composite editing until the detector returns low confidence, reverting what the watermark has to offer. There is a hybrid open crop approach that directly addresses these issues. For example, the Truepic Waterming code is closed but provides a public JavaScript library that allows you to validate content credentials. The IMATAG code that calls the watermark during generation is open, but the actual watermark and detector are private.

Translate and pass through various types of data

Watermarks are important tools across modalities (audio, images, text, etc.), but each modality brings its own challenges and considerations. Therefore, there is also the intention of watermarking. Either prevent the use of training data from the training model, mark the output of the model to prevent the content from being manipulated, or detect data generated by AI. The current section explores the modalities of different data, the challenges presented for watermarks, and open source tools that exist in facehubs where you can hug them to perform different types of watermarks.

Image watermark

Perhaps the best-known type of watermark (both human-created or AI-created content) is performed on the image. Tagged training data and proposed tagging to affect the output of trained models. The best known method of this type of “image cloaking” approach is “night shades.” The hub has a similar image cloaking tool. For example, Fawkes, developed by the same lab that developed Night Shades, targets images of people whose goal is to block facial recognition systems. Similarly, there is Photoguard. This is intended to protect images against operations using the Generate AI tool, for example, to create deepfakes based on them.

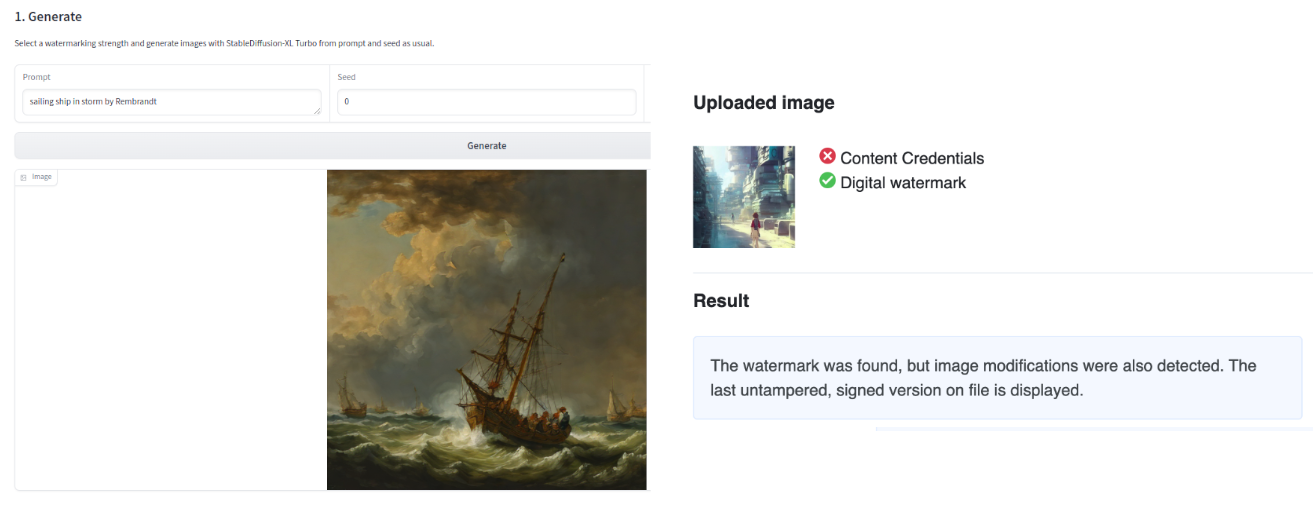

When it comes to the output image of the watermark, the hub has two complementary approaches. IMATAG (see Figure 2) performs watermarks during content generation by taking advantage of modified versions of popular models such as the stable diffused XL turbo.

Truepic also embeds C2PA content credentials in images, allowing you to store metadata about the origin and generation of the image of the image itself. Both Imatag and Truepic spaces can also be watered down by the system to detect images. Both of these detection tools work with their own approaches (i.e. approach specific). Although the hub has a typical existing deep-fark detection space, our experience has shown that these solutions have variable performance depending on the quality of the image and the model used.

Text watermark

Taking the watermarks of images generated by AI, and giving the strong visual nature of this content, the text is a completely different story. Now, the current approach for watermarking relies on the promotion of subpojomaria based on previous texts. Let’s dive into what this will look like with LLM-generated text.

During the generation process, LLM outputs a list of logits for the next token before performing sampling or greedy decoding. Based on previous generated text, most approaches divide all candidate tokens into two groups. They are called “red” and “green.” “Red” tokens are restricted and “Green” groups are advertised. This can occur by allowing red group tokens to be completely resolved (hard watermark) or by increasing the probability of green groups (soft watermark). The more you change the original probability, the more transparent the strength. Waterbench has created a benchmark dataset that facilitates comparison of performance across watermark algorithms while controlling watermark strength in apple and app comparisons.

Detection works by determining the “color” of each token and calculating the probability that the input text will come from the model in question. It is noteworthy that shorter texts have much lower confidence, as they have fewer tokens to look up.

There are several easy ways to implement LLM watermarks on your hugging facehub. LLMS space watermarks (see Figure 3) demonstrate this using the LLM watermark approach in models such as OPT and Flan-T5. Production-level workloads can be used with the Text Generation Inference Toolkit to implement the same watermark algorithm, set corresponding parameters, and use it in modern models.

It has not yet been proven whether universally transparent text is possible, as is the universal watermark of AI-generated images. Approaches such as GLTR are intended to be robust to accessible language models (considering that they rely on comparing the logits of generated text to logits of different models). Detects whether a particular text was generated using the language model without accessing that model (as it is closed source or not knowing which model was used to generate the text) is currently not possible.

As explained above, the method of detecting generated text requires a reliable amount of text. Even so, detectors can have a high false positive rate and are mislabeled in text written as a composite by people. In fact, Openai removed its in-house detection tool in 2023, taking into account low accuracy. This resulted in unintended consequences when teachers used to measure whether student submission assignments were generated using ChatGPT.

Watermark audio

Data extracted from individual voice (VoicePrint) is often used as a biometric security authentication mechanism for identifying individuals. Although it is generally paired with other security factors such as PINs and passwords, this biometric data violation still presents a risk and can be used to access a bank account given that many banks use voice recognition technology to validate clients on the phone. As audio is easier to replicate with AI, methods for verifying the reliability of voice audio should also be improved. Watermark audio content is similar to watermark images in the sense that there is a multidimensional output space that can be used to inject metadata about the source. For audio, watermarks are usually performed at frequencies that the human ear is unaware (below 20,000 Hz) and can be detected using an AI-driven approach.

Given the high stakes nature of audio output, audio content watermarking is an active area of research, with multiple approaches proposed in the past few years (such as wave fuzz, Venomave, etc.).

Audioseal is an audio localized watermark method with state-of-the-art detector speeds without compromising the robustness of the watermark. Co-train generators that embed watermarks in audio and detectors that detect watermark fragments on longer audio, even if editing is present. Audioseal achieves cutting-edge detection performance for both natural and synthetic speech at the sample level (second resolution of 1/16k), creating limited changes in signal quality, and is robust for many types of audio editing.

Audioseal was also used to release seamless registration and seamless streaming demos with safety mechanisms.

Conclusion

Disinformation is accused of producing synthetic content in real life, and cases of inappropriate expression by people without consent can be difficult and time-consuming. Much of the damage is done before corrections and explanations. Therefore, as part of our mission to democratize superior machine learning, we believe it is important to have a mechanism at Face to quickly and systematically identify AI-generated content. AI watermarks are not innocent, but they can be a powerful tool in the fight against the malicious and misleading use of AI.

Related Press Stories