A new study by Dartmouth Health researchers highlights the potential risks of artificial intelligence in medical imaging research, showing that algorithms can be taught to give the correct answer for illogical reasons. .

The study, published in Nature’s Scientific Reports, used a cache of 5,000 X-rays of human knee joints and also took into account dietary surveys completed by patients.

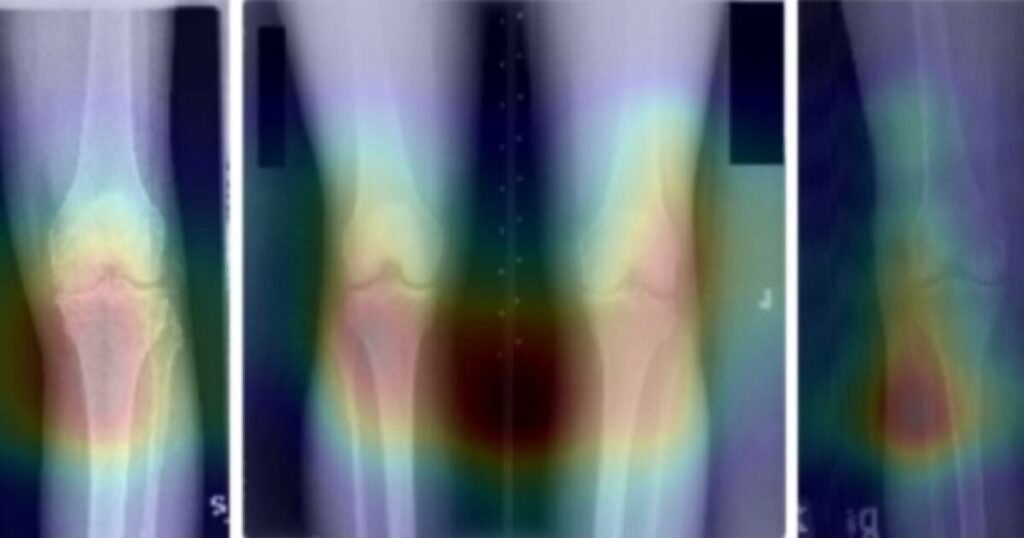

Artificial intelligence software then determines which patients are most likely to drink beer or eat refried beans, based on the X-ray scan, even if there was no visual evidence of either activity on the X-ray. I was asked to identify which is higher. knees.

“We like to assume that we’re seeing what humans see, or what humans would see if they had good vision,” said co-author Brandon Hill, a machine learning researcher at Dartmouth-Hitchcock College. ” said Brandon Hill, co-author of the paper. “And that’s the central issue here is that when it makes these associations, we infer that it must be from something in physiology or medical imaging. That’s not necessarily the case.”

In fact, machine learning tools often accurately determined which knee, and hence which of the x-rayed humans, was more likely to drink beer or eat beans. This was done by also making assumptions about race, gender, and knees. The city where the medical image was taken. The algorithm was also able to identify the model of the X-ray scanning machine that took the original image, and was able to associate the location of the scan with the likelihood of a particular eating habit.

Ultimately, it was these variables that the AI used to determine who drank beer and ate refried beans, a phenomenon researchers call “shortcuts” in food and drink consumption. The image itself contained nothing associated with it.

“Part of what we’re showing is that it’s a double-edged sword. It can see things that humans can’t see,” Hill said. “But they can also see patterns that humans can’t see, which can make it easier to deceive people.”

The study authors said the paper highlights the things medical researchers should be careful about when implementing machine learning tools.

“If we have an AI that detects whether a credit card transaction appears fraudulent, who cares why it thinks so? Let’s make sure we can’t charge your credit card,” says Orthopedic Surgeon said Dr. Peter Schilling, lead author of the paper.

However, Schilling advises clinicians to proceed conservatively with these tools when treating patients to “really optimize the care provided.”