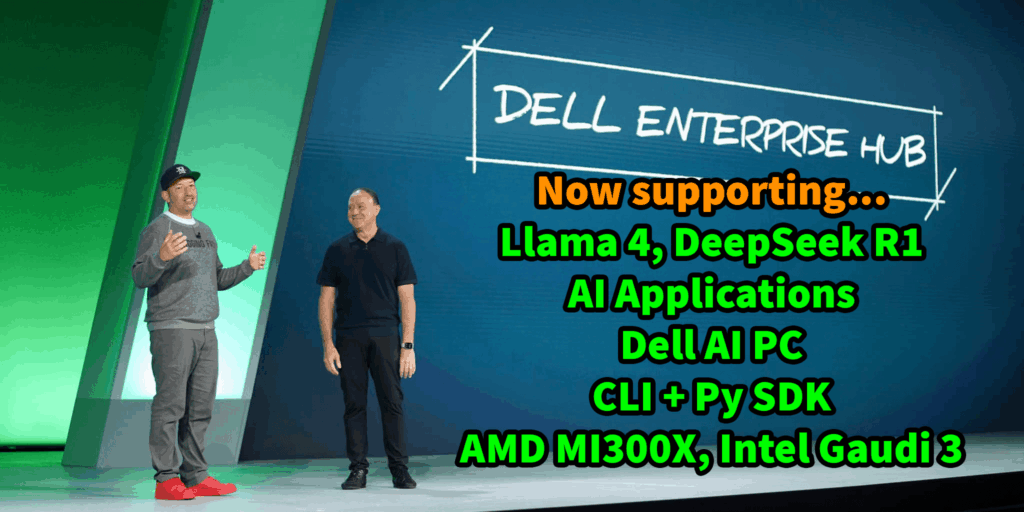

This week, Dell Tech World announced a new version of Dell Enterprise Hub. This was announced with a complete suite of models and applications to easily run AI in your facility using Dell AI servers and AI PCS.

Models ready for action

Visit Dell Enterprise Hub today and you’ll find some of the most popular models, including the Meta Llama 4 Maverick, the Deepseek R1, and the Google Gemma 3.

But what you get is more than a model, a fully tested container optimized for a specific Dell AI server platform, with simple instructions for deploying on-premises using Docker and Kubernetes.

Meta Llama 4 Maverick can be deployed on NVIDIA H200 or AMD MI300X Dell PowerEdge servers

We will work continuously with Dell CTIO and our engineering team to prepare, test and optimize the latest and largest models for your Dell AI server platform. The Lalama 4 models are now available on Dell Enterprise Hub within an hour of its public release by Meta.

Deploying AI Applications

Dell Enterprise Hub features AI applications that you can deploy right away!

If the model is an engine, the application is a car that makes them convenient, so you can actually go to the location. The new application catalog allows you to build powerful applications that run entirely on-premises for your employees and use internal data and services.

The new application catalog allows you to easily deploy major open source applications within your private network, such as OpenWebui and Anythllm.

With OpenWebui, you can easily deploy on-premises chatbot assistants that connect to internal data and services via MCP, build an agent experience that allows you to search the web, and retrieve internal data in vector databases and storage for RAG use cases.

Anythllm makes it easy to build a powerful agent assistant that connects to multiple MCP servers, allowing you to connect internal systems and external services. Includes the ability to enable multiple models, and configures role-based access controls for images, documents, and internal users.

These applications can be easily deployed using the customizable helm chart provided, and the MCP server is registered from Get Go.

Equipped with Nvidia, AMD and Intel

Dell Enterprise Hub is the only platform in the world that provides ready-to-use model deployment solutions for the latest AI Accelerator hardware.

Dell platform with NVIDIA H100 and H200 GPUs Dell platform with AMD MI300X Dell platform with Intel Gaudi 3

Working directly with Dell, Nvidia, AMD and Intel, you deploy containers to your system, they’re all configured, ready, fully tested and benchmarked, running top performance on the Dell AI server platform.

On-device model for Dell AI PCs

In addition to AI servers, the new Dell Enterprise Hub supports models that run devices on devices on Dell AI PCS.

These models allow for voice transcription on the device (Openai Whisper), chat assistants (Microsoft Phi and Qwen 2.5), upscaling of images, and generating embeddings.

To deploy your model, you can use the new Dell Pro AI Studio to follow specific instructions for your selected Dell AI PC with Intel or Qualcomm NPU. Coupled with PC fleet management systems such as Microsoft Intune, it is a complete solution for IT organizations to enable employees with AI capabilities on devices.

Currently using the CLI and Python SDK

Dell Enterprise Hub offers an online portal to the Dell AI server platform and AI features of AI PCs. But what if you want to work directly from the development environment?

To showcase the new Dell-AI open source library with Python SDK and CLI, you can use Dell Enterprise Hub directly from terminal or code within your environment. Pip Install Dell-Ai

I’ll summarize

Easy to install using Docker, Kubernetes and Dell Pro AI Studio, using models and applications for AI servers and AI PCS, Dell Enterprise Hub is the complete toolkit for deploying Gen AI applications on Enterprise, completely secure and on-premises.

As a Dell customer, that means you can do it very quickly within an hour rather than weeks.

Roll out the Network Chat Assistant with the latest open LLMS and connect it to your internal storage system (Ex. DellPowerScale) using MCP. Everything is an air gap environment, allowing access to complex agent systems. Managed Methods

If you are using Dell Enterprise Hub today, we look forward to hearing from you in the comments.