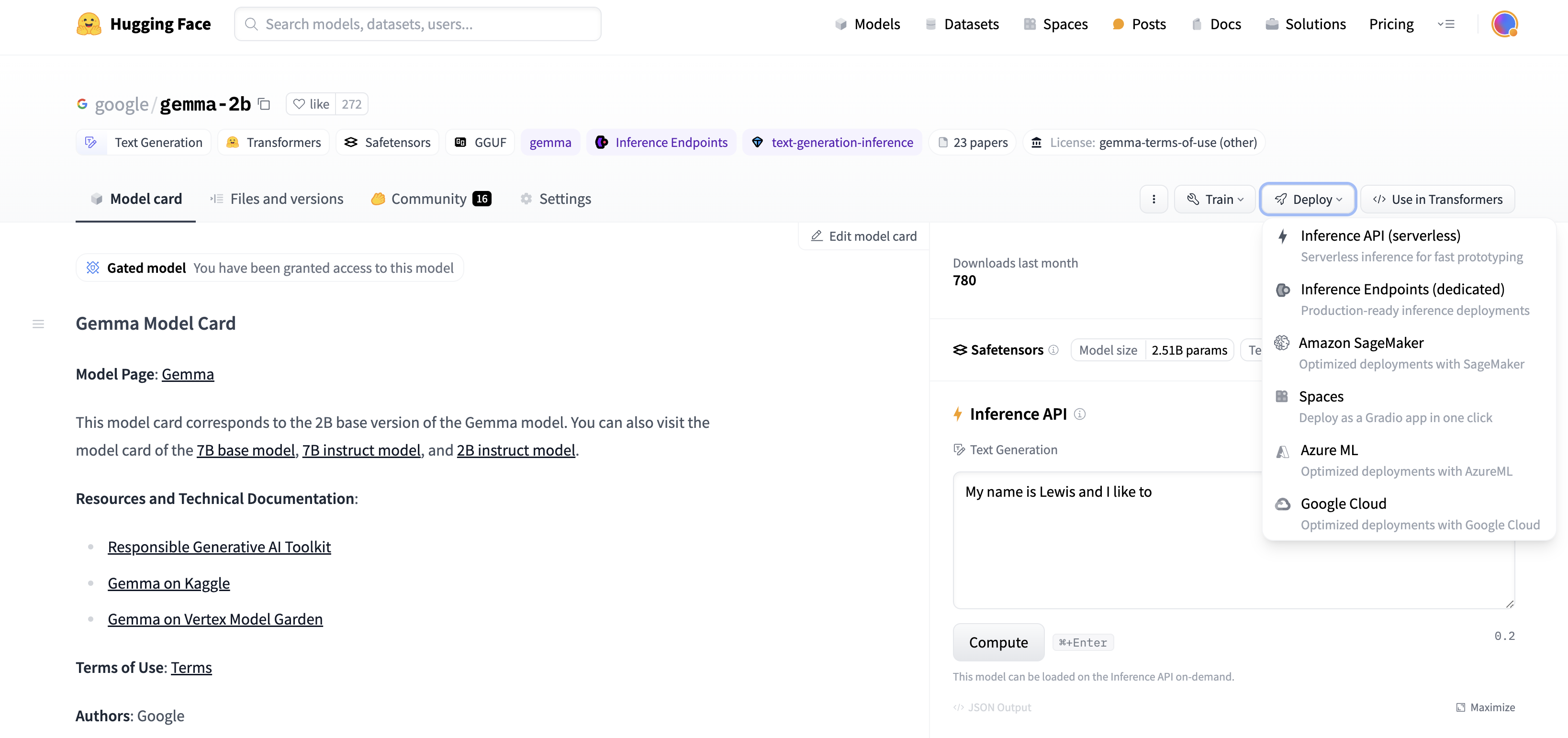

Recently, Gemma, Google Deepmind’s Open Weights Language model, announced that it will be available to the wider open source community via hugging Face. It has 2 billion and 7 billion parameter sizes, with assumptions and instruction tuned flavors. Supported by Face, supported by TGI, and easily access deployment and tweaking in Vertex Model Garden and Google Kubernetes Engine.

The Gemma family of models is also suitable for prototyping and experimenting using free GPU resources available via Colab. In this post, we’ll briefly look at how to perform parameter efficient Finetuning (PEFT) in the GEMMA model. Anyone who wants to fine-tune the Gemma model with their own dataset using the embrace face transformer and PEFT library on GPU and Cloud TPU.

Why peft?

The default (full weight) training for language models tends to be memory and computationally intensive, even at moderate sizes. On the one hand, it can be outrageous for users who rely on openly available computing platforms for learning and experiments such as Colab and Kaggle. Meanwhile, for enterprise users, the cost of adapting these models to different domains is an important metric to optimize. PEFT, or parameter-efficient fine-tuning, is a common technique to achieve this at a low cost.

Pytorch for GPU and TPU

The gemma model of the hugging face trans is optimized for both Pytorch and Pytorch/XLA. This allows both TPU and GPU users to access and experiment with Gemma models when needed. Along with the release of Gemma, the FSDP experience for Pytorch/XLA on the face has also been improved. This FSDP through SPMD integration also allows other embracing face models to take advantage of TPU acceleration through Pytorch/XLA. This post will focus on PEFT of Gemma models and more specifically low rank adaptation (LORA). For a more comprehensive set of LORA technologies, Lialin et al. And this excellent post by Belkada et al.

Low-rank adaptation of large-scale language models

Low Rank Adaptation (LORA) is one of the parameter-efficient fine-tuning techniques for large-scale language models (LLM). By freeze the original model and training only the adapter layer that is decomposed into a low rank matrix, it addresses a small fraction of the total number of model parameters to fine-tune. The PEFT library provides a simple abstraction that allows users to select which model layers to which adapter weights need to be applied.

from peft Import loraconfig lora_config = loraconfig(r =8target_modules=(“Q_Proj”, “O_Proj”, “k_proj”, “V_Proj”, “gate_proj”, “up_proj”, “down_proj”), task_type =“Cause_lm”,)

In this snippet, we refer to all nn.Linear layers as adaptive target layers.

In the following example, Dettmers et al. Explore Qlora from the base model with 4-bit accuracy for a more memory-efficient fine-tuning protocol. A model can load qlora by first installing the bitsandbytes library in your environment, then passing the bitsandbytesconfig object to from_pretrained when loading the model.

Before you begin

To access artifacts in the Gemma model, users must accept consent forms. Now let’s start implementing it.

Learn to quote

Assuming you have submitted your consent form, you can access the model artifacts from the facehub you are hugging.

Start by downloading the model and tokensor. It also includes bitsandbytesconfig for weight-only quantization.

Import torch

Import OS

from transformer Import AutoTokenizer, Automodelforcausallm, bitsandbytesconfig model_id = “Google/Gemma-2B”

bnb_config = bitsandbytesconfig(load_in_4bit =truth,bnb_4bit_quant_type =“NF4”bnb_4bit_compute_dtype = torch.bfloat16) tokenizer = autotokenizer.from_pretrained(model_id, token = os.environ(“HF_TOKEN”)) Model = automodelforcausallm.from_pretrained(model_id, quantization_config = bnb_config, device_map = {“”:0}, token = os.environ(“HF_TOKEN”)))

Next, use the well-known estimates to test your model before starting Finetuning.

Text = “Quote: Imagination is more”

Device= “cuda:0”

inputs = tokenizer(text, return_tensors =“PT”).to(device)outputs = model.generate(** inputs, max_new_tokens =20))

printing(tokenizer.decode(outputs(0), skip_special_tokens =truth)))

The model uses some extra tokens to make reasonable completion.

Quote: Imagination is more important than knowledge. Knowledge is limited. The imagination surrounds the world. – Albert Einstein i

But this is not the format we want an answer. Let’s use fine tuning to teach the model and see if we can generate answers in the following format:

Quote: Imagination is more important than knowledge. Knowledge is limited. The imagination surrounds the world. Author: Albert Einstein

First, select the English Quotes dataset Abirate/English_Quotes.

from Dataset Import load_dataset data = load_dataset(“Abirate/English_Quotes”) Data = Data.map(lambda Sample: Tokensor (sample (sample)“Quote”)), batched =truth))

Next, let’s use the LORA configuration above to Fintune this model.

Import transformer

from TRL Import sfttrainer

def formatting_func(example):text = f “QUOTE: {example(‘Quote’) ()0)}\ nauthor: {example(‘author’) ()0)}“

return (Text) Trainer = sfttrainer (model = model, train_dataset = data (“train”), args = transformers.trainingarguments(per_device_train_batch_size =1,gradient_accumulation_steps =4warmup_steps =2,max_steps =10Learning_rate =2E-4,fp16 =truth,logging_steps =1output_dir =“output”,optimal =“paged_adamw_8bit”

), peft_config = lora_config, formatting_func = formatting_func, )trainer.train()

Finally, you’re ready to test the model again at the same prompt as previously used.

Text = “Quote: That’s what imagination is.”

Device= “cuda:0”

inputs = tokenizer(text, return_tensors =“PT”).to(device)outputs = model.generate(** inputs, max_new_tokens =20))

printing(tokenizer.decode(outputs(0), skip_special_tokens =truth)))

This time, we’ll get the response in any format you like.

Quote: Imagination is more important than knowledge. Knowledge is limited. The imagination surrounds the world. Author: Albert Einstein

Accelerate with FSDP via SPMD on TPU

As mentioned earlier, embracing Face Transformers now supports the latest FSDP implementations of Pytorch/XLA. This will significantly accelerate the fine-tuning speed. To enable it, you need to add an FSDP configuration to Transformers.Trainer.

from transformer Import datacollatorforlanguageModeling, Trainer, Training Argu Article fsdp_config = {

“fsdp_transformer_layer_cls_to_wrap”🙁“Gemmadecoderlayer”),,

“xla”: truth,

“xla_fsdp_v2”: truth,

“xla_fsdp_grad_ckpt”: truth

}Trainer = Trainer (Model = Model, train_dataset = data, args = trainingarguments (per_device_train_batch_size =64num_train_epochs =100,max_steps = –1output_dir =“./output”,optimal =“adafactor”,logging_steps =1,dataloader_drop_last = truth,fsdp =“Full_shard”,fsdp_config =fsdp_config,), data_collator = datacollatorforlanguageModeling(Tokenizer, MLM =error) ) trainer.train()

Next Steps

I went through this simple example adapted from the Source Notebook and explained the Lora Finetuning method applied to the Gemma model. The complete GPU colab is here and the complete TPU script is here. We are excited by the infinite possibilities of research and learning thanks to the recent addition to our open source ecosystem. For more examples of training, fintune and deploying Gemma models, we recommend you also visit the Gemma documentation and the Launch blog.