the study

Author released on December 1, 2022

Julien Perolat, Bart de Viller, Daniel Hens, Eugene Tarasov, Florian Straves, Carl Tuiles

Deepnash learns to play StrateGo from zero by combining the game theory and a deep RL without a model.

The Game Plain’s Artificial Intelligence (AI) system has advanced to a new frontier. StrateGo is a more complex classic board game than chess and going, and is more clever than poker. Deepnash, published in Science, is an AI agent who learned the game from scratch to human expert levels by playing against himself.

Deepnash uses a new approach based on game theory and deep enhancement learning without models. The play style converges into a nash equilibrium. In other words, the play means that it is very difficult for the opponent to abuse. In fact, Deepnash has reached the top three rankings among the world’s largest online strateGo platform, Gravon human experts.

Board games are historically advanced in the AI field, and we have been able to study how to develop and execute strategies in an environment where humans and machinery are controlled. Unlike Chess and Go, Stratego is an incomplete information game. Players cannot directly observe the identity of the opponent’s work.

This complexity means that other AI -based stratego systems are struggling to exceed the amateur level. It also means that a very successful AI technology called “Game Tree Search”, which was used to learn many games with perfect information before, is not enough for STRATEGO. For this reason, Deepnash is completely beyond the search for the game tree.

The value of Master Strego is beyond the game. In order to pursue our mission of solving intelligence to make a profit to humanity, we operate in the complex and real world situation where information on other agents and people is limited. It is necessary to build an advanced AI system that can be done. Our dissertation shows how to apply Deepnash in uncertainty and balance the results to solve the results well.

Know Stratego

StrateGo is a turn -based capture game. It is a bruff and tactical game, a gathering and subtle maneuvering game. And because it is a zero -sam game, the profit by one player represents the same size for the opponent.

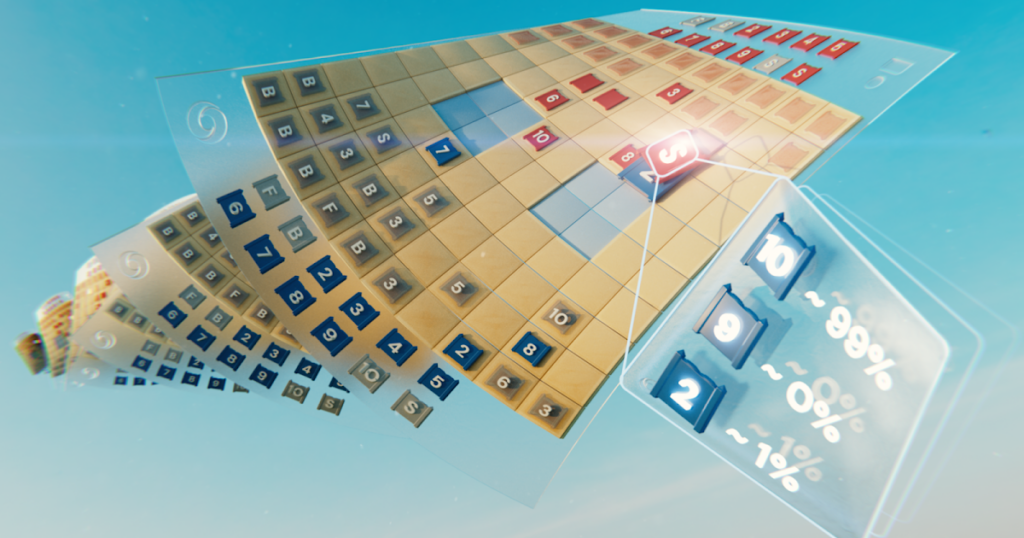

Stratego is an incomplete information game, so it is challenging for AI. Both players start by starting 40 performances, starting their favorite formations, as they are hidden by each other when the game begins. Both players do not access the same knowledge, so you need to balance all possible results when making a decision. Provides a challenging benchmark to study strategic interaction. The types of pieces and their rankings are shown below.

Left: Peace ranking. In the battle, bombs always win, except that 10 (former S) loses 10 (former S) when spies are attacked, except in cases where the higher ranks win and be captured by the mineral.

Central: possible starting. Note that the flag is safely hidden on the back, sandwiched between protection bombs. The two pale blue areas are “lakes” and never enter.

Right: The game in play, the blue spy indicates that the red spy is capturing Red 10.

STRATEGO wins the information violently. The identity of the opponent’s work is revealed only when meeting other players on the battlefield. This is completely contrasting with perfect information games such as chess and go. There, the location and identity of all works are known to both players.

Machine learning approaches that work very well with perfect information games such as DeepMind’s Alphazero are not easily transferred to StrateGo. The need to make a decision based on the incomplete information and the possibility of a bluff is to make the straightgo similar to the Texas Holdem Poker, and need the human -like ability pointed out by American writer Jack London. It’s a good card, but sometimes I play poorly. “

However, AI techniques that work very well in games such as Texas Holdem are not transferred to StrateGo due to the length of the game. In many cases, hundreds of movements before the player wins. StrateGo’s inference must be performed for a number of continuous actions without any clear insights about how each action contributes to the final result.

Finally, the number of games (represented as the “complexity of the game tree”) is very difficult to solve because it is out of the chart compared to chess, GO, and poker. This was the excitement of us about StrateGo, and why has it expressed for decades for the AI community for decades?

The difference between chess, poker, go, and straight.

I am looking for a balance

Deepnash adopts a new approach based on the combination of game theory and deep reinforcement learning without models. “Model -free” means that Deepnash is not explicitly modeling the opponent’s private game state during the game. Especially in the early stages of the game, if Deepnash rarely knows the other’s work, such a modeling will not be effective.

Also, the complexity of StrateGo’s game tree is so vast that Deepnash cannot adopt a stubborn approach to Monte Carlo Tree Search, an AI -based game. Tree search is an important factor in many revolutionary results of AI for board games and pokers that are not so complicated.

Instead, Deepnash is equipped with an idea of a new game theoretical algorithm called normalized Nash Dynamics (R-NAD). R-NAD, which is working on unparalleled scale, controls Deepnash’s learning behavior for what is known as NASH equilibrium (jump into the technical details of our paper).

The movement of game play that brings the equilibrium of Nash cannot be explained over time. If people and machines play straight, the worst victory rate to achieve is 50 %, only if you face perfect opponents as well.

In Computer StrateGo World Championship, the best stratego bot, including some winners, had a Deepnash win rate of over 97 % and was 100 %. Deepnash achieved 84 % of the victory rate and won the top three rankings to the Gravon Games platform top expert.

Please expect unexpected things

To achieve these results, Deepnash has demonstrated some remarkable actions in both the first fragmentation stage and the game play phase. Deepnash has been developed so that Deepnash is difficult to develop unpredictable strategies. This means that by creating an initial development, it is enough to prevent opponents from discovering patterns in a series of games. During the game phase, Deepnash randomly convert the seemingly equivalent action to prevent exploitation tendency.

Stratego players are striving to be unpredictable, so it’s worth the information. Deepnash shows how it is very impressive in how to evaluate information. In the following example, Deepnash (blue) sacrifices 7 (major) and 8 (colonel) early in the game in other pieces to human players, and as a result, 10 (Colonel). I was able to find the former S). 9 (general), 8 and 2 7.

In this early game situation, Deepnash (Blue) has already found many of the most powerful works of the opponent, but keeps the unique important part.

With these efforts, Deep Nash left in an important, important disadvantageous state. Lost 7 and 8, but the human enemy saved all the pieces of 7 or more. Nevertheless, Deepnash, who has a solid Intel in the opponent’s top brass, won 70 % of the chance of winning and won.

Bluff art

A good hierarchical player, like poker, can represent muscle strength even if it is weak. Deepnash has learned various tactics of such bluffs. In the following example, Deepnash pursues the opponent’s 8 known 8 as if using 2 (weak scouts that are not known to the other party). Their spy invites it to ambush. Deepnash’s tactics have succeeded in exposing only minor works and eliminating and eliminating important works that are the opponent’s spies.

Human players (red) are convinced that the unknown work that is chasing eight must be 10 of Deepnash (Note: Deepnash has already lost nine times).

For more information, see these four full -length games played by Deepnash Agening (anonymous) human experts: Game 1, Game 2, Game 3, Game 4.

“”

The level of Deep Nash play surprised me. I have never heard of an artificial player approaching the level needed to win the game with an experienced human player. However, after playing against Deepnash, I was not surprised that the TOP-3 ranking was achieved on the Gravon platform. If you are allowed to participate in the World Championships, it will work very well.

Vincent de Bohua, a paper co -author and a former Streigo World Champion

Future direction

We have developed Deepnash for the highly defined world of Stratego, but our new R-NAD methods can be directly applied to both perfect or incomplete information on the other two players Zero Sam games. R-NAD can deal with large-scale real world issues far beyond the two player game settings.

Also, in a domain that features a large number of people or AI participants with various goals that may not have information about the intentions and things that are generated in other people’s intentions and environments. I hope you can unlock AI’s new application. -The scale -management scale optimization to reduce the amount of vehicles related to the driver’s travel time.

When creating an AI system that can be robust in the face of uncertainties, we want to bring more AI problem solving capabilities to a world that is essentially unpredictable.

Read the science papers and see the details of Deep Nash.

For researchers who are interested in trying R-NAD and working in a newly proposed method, we have opened the code.

Paper author

Julien Perolat, Bart de Viller, Daniel Hens, Eugene Tarasov, Flurian Straves, Vincent de Bore, Paul Muller, Jerome T Connor, Neil Barch, Thomas Anthony, Steven Macly, Romald Ellie, Sarah H Sen, ZHE WAN Marcheba, Mina Khan, Shelzill Oaza, Finbar Timber’s, Toby Pollen, Tom Ekles, Mark Roland, Mark Rand Tott, Jean Baptist Lespiau, Biral Piot, Shagan Omidshafier, Edward Rockheart, Lauren Sifle, Natalie Bogure Ragimun, Remure Range Mander, SINGH, Demis Hassabis, Karl Tuyls.