This is a guest blog post by the Zama team. Zama is an open source encryption company that builds cutting-edge solutions for blockchain and AI.

18 months ago, Zama launched Concrete ML, an ML framework that provides privacy with bindings to traditional ML frameworks such as Scikit-Learn, ONNX, Pytorch, and Tensorflow. To ensure the privacy of your users’ data, Zama uses Fully Homomorphic Encryption (FHE), an encryption tool that allows you to perform calculations directly on encrypted data without knowing your private key.

From the start, I wanted to precompile an FHE-friendly network and make it available somewhere on the internet, making it easy for users to use. I’m ready today! You hug your face directly, not at random places on the internet.

More precisely, you can use hugging face endpoints and custom inference handlers to store concrete ML models and allow users to deploy them to HF machines with just one click. At the end of this blog post, you will be able to understand how to use and prepare pre-compiled models. This blog can also be considered another tutorial for custom inference handlers.

Deploying a precompiled model

Let’s start by deploying FHE-friendly models (created by Zama or a third party – see how to learn how to prepare a precompiled model section below).

First, look for the model to deploy. I’ve precompiled a number of models on Zama’s HF page (or you can find them in tags). Let’s say you select Concrete-ML-Incrypted-DecisionTree. As explained in the description, this precompiled model can detect spam without looking at clear message content.

Like any other model available on the Hugging Face Platform, select Deploy and then select Inference Endpoint (Dedicated).

Inference endpoint (only)

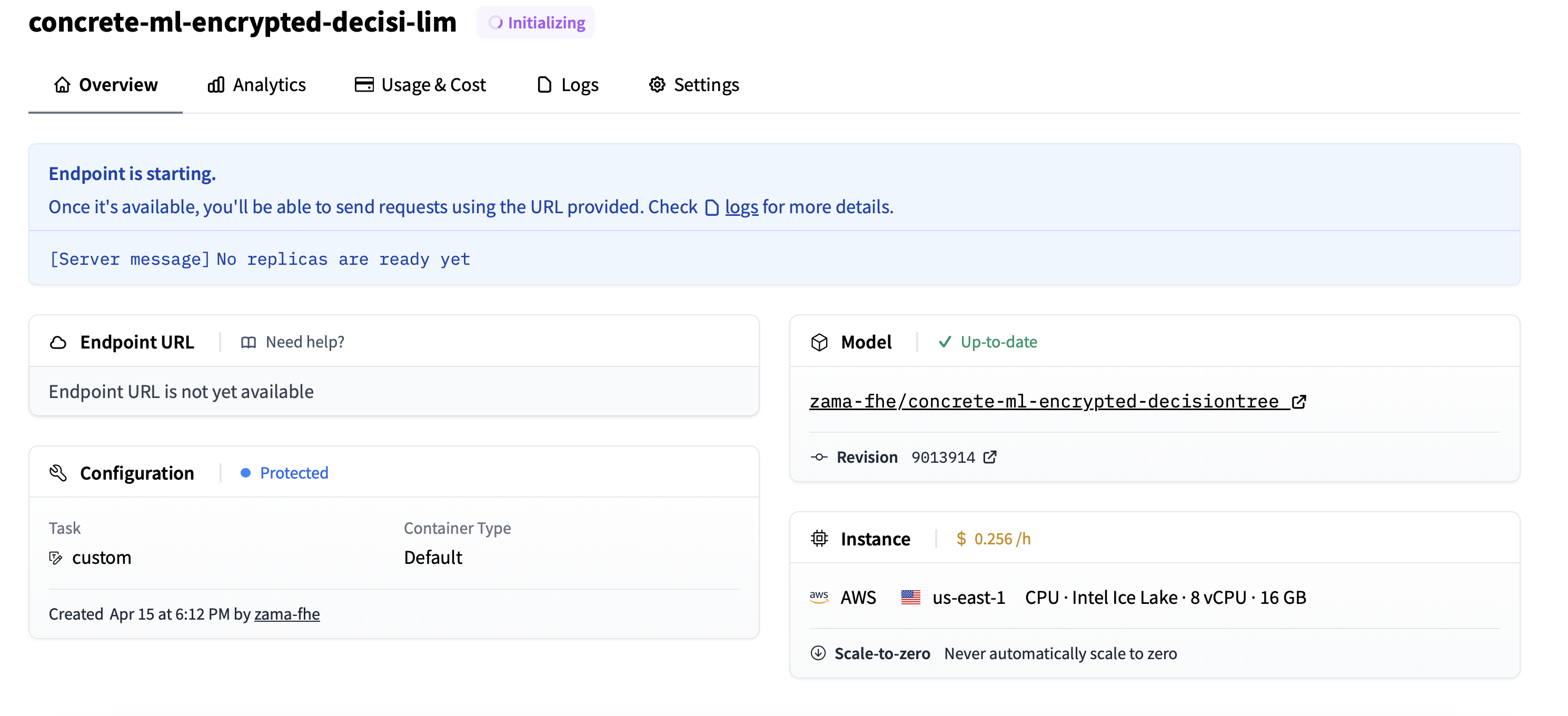

Next, select the endpoint name or region. Most importantly, choose the CPU (now not using GPU, working on it) and the best machines available. In the example below, we selected eight VCPUs. Next, click (Create Endpoint) and wait for the initialization to finish.

Create an endpoint

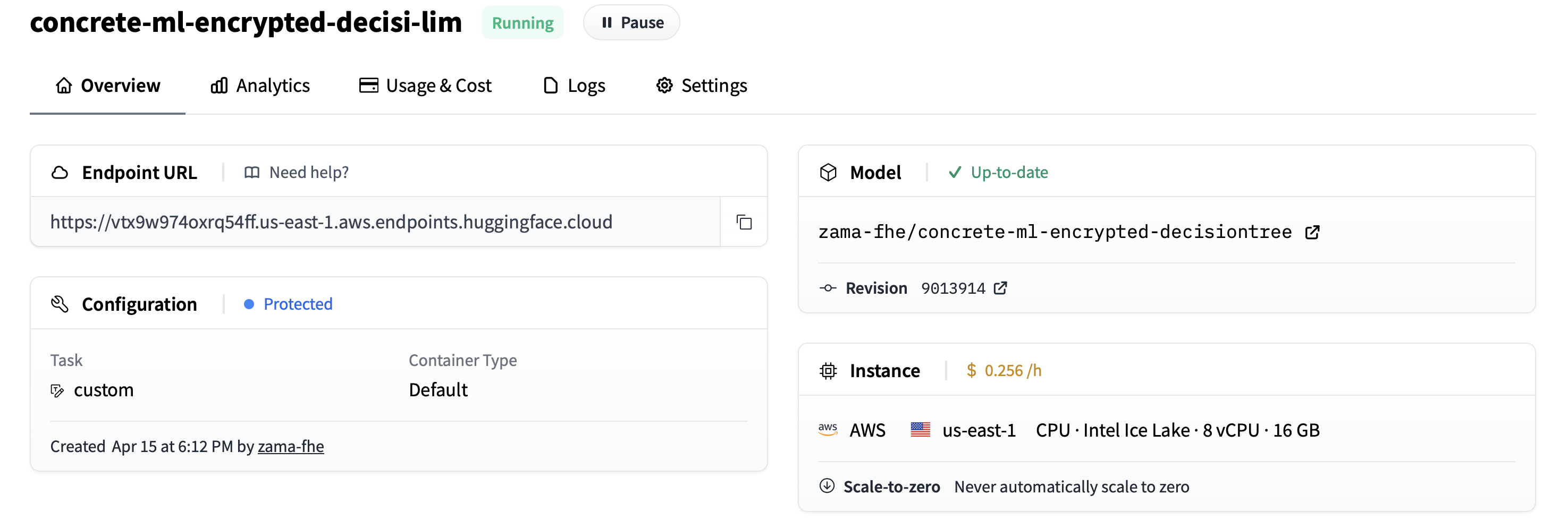

After a few seconds, the endpoint is deployed and the model that provides privacy is manipulated.

The endpoint is created

: Don’t forget to delete (or at least pause) the endpoint when it no longer works. Otherwise, it will cost more than you would expect.

Use the endpoint

Client-side installation

The goal is to not only deploy endpoints, but also make them playable to users. To do this, you will need to clone the computer’s repository. This is done by selecting the Clone Repository in the dropdown menu.

Clone repository

They are given a small command line that can be run on their terminal:

git clone https://huggingface.co/zama-fhe/concrete-ml-encrypted-decisiontree

Once the command is complete, go to the Concrete-ML-Incrypted-DecisionTree directory with your editor and open play_with_endpoint.py. Here we need to find the line API_URL =… and replace it with the new URL for the endpoint you created in the previous section.

api_url = “https://vtx9w974oxrq54ff.us-east-1.aws.endpoints.huggingface.cloud”

Of course, please enter it using the entry point URL. It also defines an access token and stores it in an environment variable.

export hf_token = (your token hf_xx..xx)

Finally, the user machine must install concrete ML locally. Create a virtual environment, source it, and install the required dependencies.

python3.10 -m venv .venv

sauce .venv/bin/activate pip install -u setuptools pip wheel pip install -r requistence.txt

Note that we are currently enforcing the use of Python 3.10 (which is also the default Python version used to cuddle face endpoints). This is because the development files currently rely on the Python version. We are committed to making them independent. This should be available in more versions.

Perform inference

Now the user can run inference on the endpoint and launch the script.

python play_with_endpoint.py

You must generate the same log as below:

Send the 0th piece of the key (remaining size is 71984.14 kbytes) Save the key in Database with uid=3307376977 sends the payload size of one piece of key (remaining size is 0.02 kbytes): 0.23 kilobytes

for 0th input, prediction = 0 prediction 0 in 3.242 seconds

for 1st input, prediction = 0 prediction 0 in 3.612 seconds

for Second input, predicted = 0 is expected in 4.765 seconds (…)

for Input from 688, predicted = 0, expected 1 in 3.176 seconds

for 689-Thing input, prediction = 1 prediction 1 in 4.027 seconds

for Expected 0 in 4.329s691 Sample accuracy is 0.8958031837916064 Total time: 2873.860s per inference Duration: 4.123s

Adapt to your application or needs

Editing play_with_endpoint.py shows that iterates through various samples of the test dataset and directly performs encrypted inferences at the endpoint.

for I in range(nb_samples): encrypted_inputs = fhemodel_client.quantize_encrypt_serialize(x_test(i).reshape(1–1)) Payload = {

“input”: “fake”,

“encrypted_inputs”:to_json(encrypted_inputs),

“method”: “inference”,

“uid”:uid,}

if is_first:

printing(f “Payload Size: {sys.getSizeof (payload) / 1024:.2f} kilobytes “)is_first = error

duration- = time.time() Duration_inference = -time.time() necrypted_prediction = query(payload) duration += time() duration_inference += time.time() necrypted_prediction = from_json(encrypted_prediction) prediction_proba = fhemodel_client.deserialize_decrypt_dequantize(necrypted_prediction) (0)PREDICTION = NP.ARGMAX (Prediction_Proba)

if Redundant:

printing(

f “for {I}-th input, {prediction =} It is expected {y_test(i)} in {duration_inference:.3f} Seconds”

)nb_good += y_test(i)==prediction

Of course, this is just an example of the use of entry points. Developers are encouraged to adapt this example to their own use cases or applications.

Under the hood

Thanks to the flexibility of the custom handlers, all of this is done and I am grateful to the face developers who are hugging me to provide this flexibility. The mechanism is defined in Handler.py. You can define the __call__ endpointhandler method as needed, as explained in the Hug Face documentation. In our case, we defined the method parameters. These methods are used to set the evaluation key once and perform all inferences one by one, as seen in Play_with_endpoint.py.

limit

However, please note that the keys are stored in the endpoint’s RAM. This is not convenient for production environments. With each reboot, the key is lost and you will need to re-cent. Additionally, if you have multiple machines to handle large amounts of traffic, this RAM is not shared between the machines. Finally, available CPU machines only provide at most 8 VCPUs for the endpoint. This can be a limitation for heavy load applications.

Preparing a Precompiled Model

Now that you know how easy it is to deploy a pre-compiled model, you can prepare it. This allows you to fork one of the prepared repositories. All model categories supported by Concrete ML (linear models, tree-based models, embedded MLP, Pytorch models) can be used as templates for new precompiled models.

Next, edit creating_models.py and change the ML task to what you’re working on in the precompiled model. For example, if you start with Concrete-ML-Incrypted-DecisionTree, you will change the dataset and model type.

As mentioned before, you must have concrete ML installed to prepare a precompiled model. You may need to use the same Python version rather than hugging face use by default (3.10 when this blog is written), or you may need someone to use containers in Python during deployment.

You can now launch python creating_models.py. This will train the model and create the required development files (client.zip, server.zip, versions.json) in the compiled_model directory. As explained in the documentation, these files contain pre-compiled models. If you have any issues, you can get support with a fhe.org mismatch.

The final step is to modify play_with_endpoint.py to handle the same ML task as creating_models.py: configuring the dataset accordingly.

You can now change this directory in the Compiled_model directory and files, and hugging face models in Crestention_models.py and play_with_endpoint.py. Certainly, you need to run some tests and make some slight adjustments for it to work. Don’t forget to add concrete ML and FHE tags. This should make sure that precompiled models are easily visible in searches.

Pre-compiled models are available

For now, we have prepared some pre-compiled models as examples and hope that the community will expand this soon. Pre-compiled models can be found by searching for concrete ML or FHE tags.

Note that limited configuration options are limited to hugging the face of CPU-assisted endpoints (up to 8 VCPUs with 16 GB of RAM today). Depending on production requirements and model characteristics, more powerful cloud instances can have faster execution times. Hopefully, to improve these timings, a more powerful machine will soon be available by hugging the face endpoint.

Additional resources

Conclusion and next steps

In this blog post, I have shown that custom endpoints are extremely easy and powerful to use. What you do with concrete ML is quite different from the usual workflow of an ML practitioner, but you can accommodate custom endpoints to address most needs. A compliment to embrace face engineers for developing such a popular solution.

I explained how to do this:

Developers can create their own pre-compiled models and make them available by hugging the face model. Companies can deploy developer pre-compiled models and make them available to users via HF endpoints. These endpoints allow the final user to perform ML tasks on encrypted data.

To go further, it is helpful to have more powerful machines available to hug the endpoints of your face to make reasoning faster. You can also imagine concrete ML embracing the Face interface and having private reasoning endpoint buttons, which further simplifies the life of developers. Finally, it may be helpful to have a way to share state between machines and keep this state non-volatile in order to integrate into several server machines (the FHE inference key is stored there).