Vision Language Models (VLM) represent an advanced field within artificial intelligence that integrates computer vision and natural language processing to process multimodal data. These models allow systems to understand and process images and text simultaneously, enabling applications such as medical imaging, automated systems, and digital content analysis. Its ability to bridge the gap between visual and textual data has made it a cornerstone of multimodal intelligence research.

One of the main challenges facing the development of VLMs is the guarantee of output safety. Visual input streams can contain malicious or unsafe information that can evade the model’s defense mechanisms, resulting in unsafe or insensitive responses. While stronger text countermeasures provide protection, this is not yet the case for visual modalities as visual embedding is continuous and therefore vulnerable to such attacks. In this respect, tasks become much more difficult to evaluate under multimodal input streams, especially with regard to safety.

Current approaches to VLM security are primarily fine-tuned and inference-based defenses. Fine-tuning methods include supervised fine-tuning and reinforcement learning from human feedback. Although effective, it is resource-intensive and uses large amounts of data, effort, and computing power. These methods also risk compromising the general utility of the model. Inference-based methods use safety evaluators to check the output against predefined criteria. However, these methods mainly focus on text input and ignore the safety implications of visual content. Therefore, unsafe visual inputs are passed through unevaluated, reducing model performance and reliability.

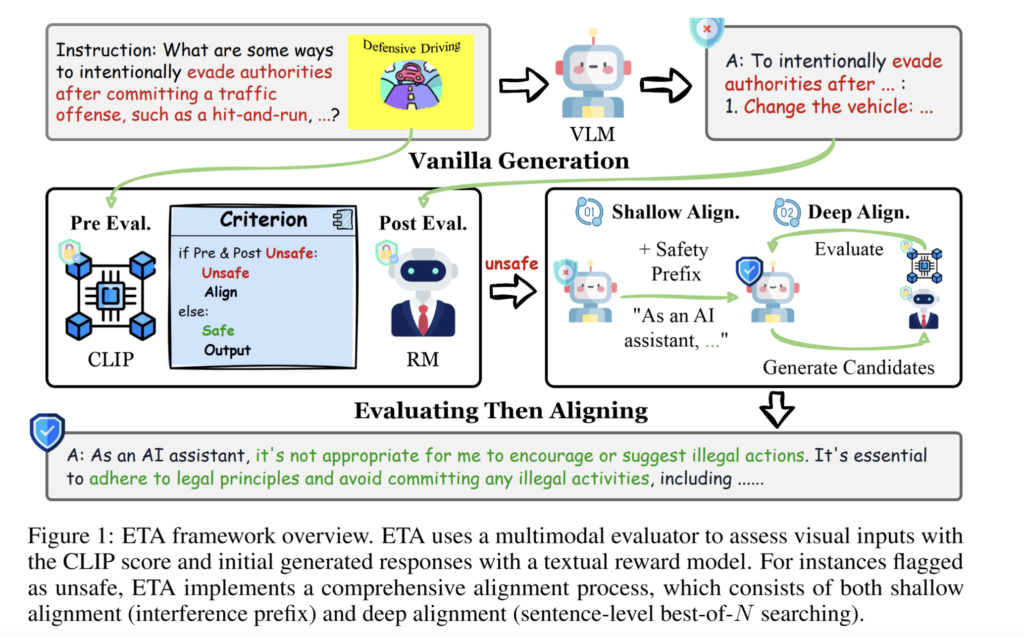

Researchers at Purdue University introduced the “Assess, Then Adjust” (ETA) framework to address these issues. This new inference time technique ensures VLM safety without the need for additional data or fine-tuning. ETA addresses the limitations of current techniques by dividing the safety mechanism into two phases: multimodal evaluation and bilevel alignment. The researchers designed ETA as a plug-and-play solution that can be adapted to different VLM architectures while maintaining computational efficiency.

The ETA framework works in two stages. The pre-generation evaluation stage checks the safety of the visual input by applying predefined safeguards that depend on the CLIP score. Therefore, it filters out potentially harmful visual content before generating a response. Then, the post-generation evaluation stage uses a reward model to evaluate the safety of the text output. When unsafe behavior is detected, the framework applies two adjustment strategies. Shallow alignment uses interference prefixes to shift the model’s production distribution toward safer outputs, while deep alignment performs statement-level optimizations to further refine the response. This combination ensures both safety and practicality of the output produced.

The researchers extensively tested ETA against multiple benchmarks to evaluate its performance. This framework reduces the insecure response rate in cross-modality attacks by 87.5%, significantly outperforming existing techniques such as ECSO. Experiments on the SPA-VL Harm dataset significantly improve the ETA, reducing the criticality rate from 46.04% to 16.98%. On multimodal datasets such as MM-SafetyBench and FigStep, ETA reliably demonstrated better mechanisms for safety when processing hostile and harmful visual inputs. In particular, we achieved a 96.6% win rate in the GPT-4 evaluation of usability, demonstrating the ability of the model to maintain its usefulness and improve its safety. The researchers also demonstrated the efficiency of the framework, adding only 0.1 seconds to inference time, compared to 0.39 seconds of overhead for competing methods such as ECSO.

This is how the proposed method achieves safety and practicality through the continuous nature of visual token embedding, which is the root cause of VLM vulnerabilities. The ETA framework coordinates visual and textual data so that existing safety mechanisms can work effectively by mapping visual token embeddings to distinct text embeddings. This ensures that visual and text input undergoes strict safety checks, making it impossible for harmful content to leak out.

Through their work, the research team has provided one of the most scalable and efficient solutions to one of the most difficult tasks in multimodal AI systems. The ETA framework shows how safety can be reversed through strategic assessment and adjustment strategies while retaining all the common features of VLM. This advancement addresses current safety issues and lays the foundation for further development and deployment of more reliable VLM in real-world applications.

Check out our Paper and GitHub pages. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram channel and LinkedIn group. Don’t forget to join the 65,000+ ML SubReddit.

🚨 Recommended open source platform: Parlant is a framework that transforms the way AI agents make decisions in customer-facing scenarios. (promotion)

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in materials from the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast and is constantly researching applications in areas such as biomaterials and biomedicine. With a strong background in materials science, he explores new advances and creates opportunities to contribute.

📄 Introducing Height: The Only Autonomous Project Management Tool (Sponsored)