In recent years, rapid scaling of large-scale language models (LLMS) has resulted in extraordinary improvements in natural language understanding and reasoning capabilities. However, important attention has been paid to this progress. It has a computational bottleneck in the inference process (generating one token at a time). As LLMS grows in size and complexity, the latency and energy requirements of continuous token generation become substantial. These challenges are particularly severe in real-world deployments where cost, speed and scalability are important. Traditional decoding approaches such as greed and beam search methods often require repeated evaluation of large models, leading to high computational overhead. Furthermore, even using parallel decoding techniques can be elusive to maintain both the efficiency and quality of the generated output. This scenario spurred the search for new techniques that could reduce inference costs without sacrificing accuracy. Therefore, researchers are investigating a hybrid approach that combines lightweight models with stronger counterparts, aiming to achieve the optimal balance between speed and performance. This is an essential balance for large-scale deployments in real-time applications, interactive systems, and cloud environments.

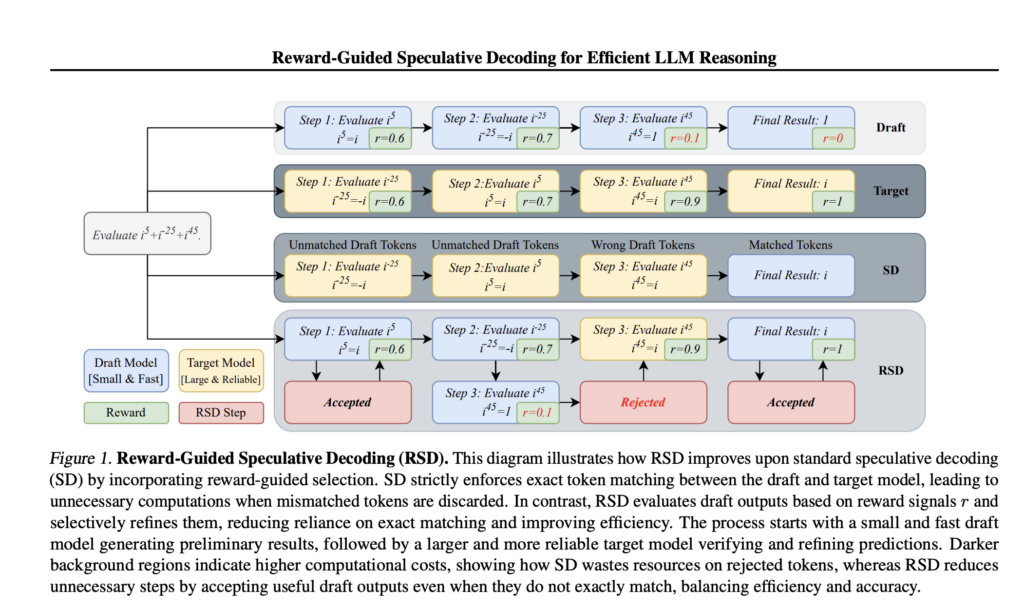

Salesforce AI Research introduces reward induction speculative decoding (RSD), a new framework aimed at improving the efficiency of inference in large-scale language models (LLM). At its heart, RSD leverages a dual model strategy. The fast, lightweight “draft” model works in conjunction with a more robust “target” model. The draft model quickly generates reserve candidates, while the Process Reward Model (PRM) evaluates the quality of these outputs in real time. Unlike traditional speculative decoding, which claims strict unbiased token matching between draft and target models, RSD introduces a controlled bias. This bias is carefully designed to prefer high reward output, as it significantly reduces unnecessary calculations. This approach is based on mathematically derived threshold strategies that determine when the target model will intervene. By dynamically mixing the outputs from both models based on the reward function, RSD not only accelerates the inference process, but also improves the overall quality of the generated responses. This groundbreaking methodology detailed in the accompanying paper represents a major advance in addressing the inherent inefficiency of sequential token generation in LLMS.

Technical details and benefits of RSD

RSD works by delving into the technical aspects and integrating two models in a continuous yet collaborative way. Initially, the draft model generates candidate tokens or inference steps at low computational costs. Each candidate is then evaluated using a reward function that serves as a quality gate. If the candidate’s token reward exceeds a given threshold, the output is accepted. Otherwise, the system calls a more computationally intensive target model and generates sophisticated tokens. This process is derived by weight functions (also binary step functions) that adjust the degree of dependence on the draft and target model. The dynamic quality control provided by the Process Reward Model (PRM) allows only the most promising output bypass the target model, thereby saving calculations. One of the outstanding benefits of this approach is its “biased acceleration.” Here, controlled bias is not a disadvantage, but a strategic choice to prioritize high-reward outcomes. This gives you two important benefits: First, the overall inference process can be up to 4.4 times faster than running only the target model. Second, in many cases, it provides an average accuracy improvement of +3.5 over traditional parallel decoding baselines. Essentially, RSD harmonizes efficiency with accuracy. This allows for a significant reduction in the number of floating point operations (flops) while providing output that meets or exceeds the performance of the target model. Details of theoretical foundations and algorithms such as mixed distributions and adaptive acceptance criteria defined by PRSD provide a robust framework for practical deployment in a variety of inference tasks.

insight

The empirical verification of RSD is compelling. The experiments detailed in the paper show that RSD consistently delivers excellent performance on challenging benchmarks such as the GSM8K, Math500, Olympiadebench, and GPQA. For example, in the Math500 benchmark (a dataset designed to test mathematical inference), the RSD is composed of a 72B target model and a 7B PRM compared to the 85.6 for the target model that is run alone. Achieves an accuracy of 88.0. This configuration not only reduces computational load with almost 4.4 times less flops, but also improves inference accuracy. The results highlight the potential for RSD over traditional methods, such as speculative decoding (SD) and advanced search-based techniques such as Beam Search and Best-of-N Strategies.

Conclusion: A new paradigm for efficient LLM inference

In conclusion, reward-guided speculative decoding (RSD) marks important milestones in search of more efficient LLM inference. By intelligently combining lightweight draft models with powerful target models and introducing reward-based acceptance criteria, RSD effectively addresses the dual challenges of computational cost and output quality. An innovative approach of biased acceleration allows the system to selectively bypass expensive calculations of high-reward outputs, thereby streamlining the inference process. A dynamic quality control mechanism fixed in the process reward model ensures that computational resources are wisely allocated and that the target model is only involved when necessary. As empirical results show a faster inference than up to 4.4× and an average accuracy improvement of +3.5 in traditional ways, RSD not only paves the way for more scalable LLM deployments, but also new standards for designing hybrid decoding frameworks. Set the

Please see the paper and the github page. All credits for this study will be sent to researchers in this project. Also, feel free to follow us on Twitter. Don’t forget to join 75K+ ML SubredDit.

Commended open source AI platform recommended: “Intelagent is an open source multi-agent framework for evaluating complex conversational AI systems” (promotion)

Asif Razzaq is CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, ASIF is committed to leveraging the possibilities of artificial intelligence for social benefits. His latest efforts are the launch of MarkTechPost, an artificial intelligence media platform. This is distinguished by its detailed coverage of machine learning and deep learning news, and is easy to understand by a technically sound and wide audience. The platform has over 2 million views each month, indicating its popularity among viewers.

Commended open source AI platform recommended: “Intelagent is an open source multi-agent framework for evaluating complex conversational AI systems” (promotion)