BigCode is releasing StarCoder2, the next generation transparently trained open-code LLM. All StarCoder2 variants were trained on Stack V2, a new large and high quality code data set. Release all models, datasets, processing, and training code. For more information, see the paper.

What is StarCoder2?

StarCoder2 is an open LLMS family for code and comes in three different sizes with 3B, 7B and 15B parameters. The flagship StarCoder2-15B model is trained with more than 4 trillion tokens and 600 programming languages from Stack V2. All models use grouped queries notes. This was trained using a 16,384 tokens context window with attention from a sliding window of 4,096 tokens and using a medium goal.

STARCODER2 offers three model sizes: a 3 billion parameter model trained with ServiceNow, a 7 billion parameter model trained by embracing the face, and a 15 billion parameter model trained by Nvidia using Nvidia Nemo on the NVIDIA accelerated infrastructure.

StarCoder2-3B was trained in 17 programming languages from Stack V2 with 3 trillion tokens. The StarCoder2-7B was trained in 17 programming languages from Stack V2 with over 3.5 trillion tokens. The StarCoder2-15B has been trained in over 600 programming languages from Stack V2 with over 4 trillion tokens.

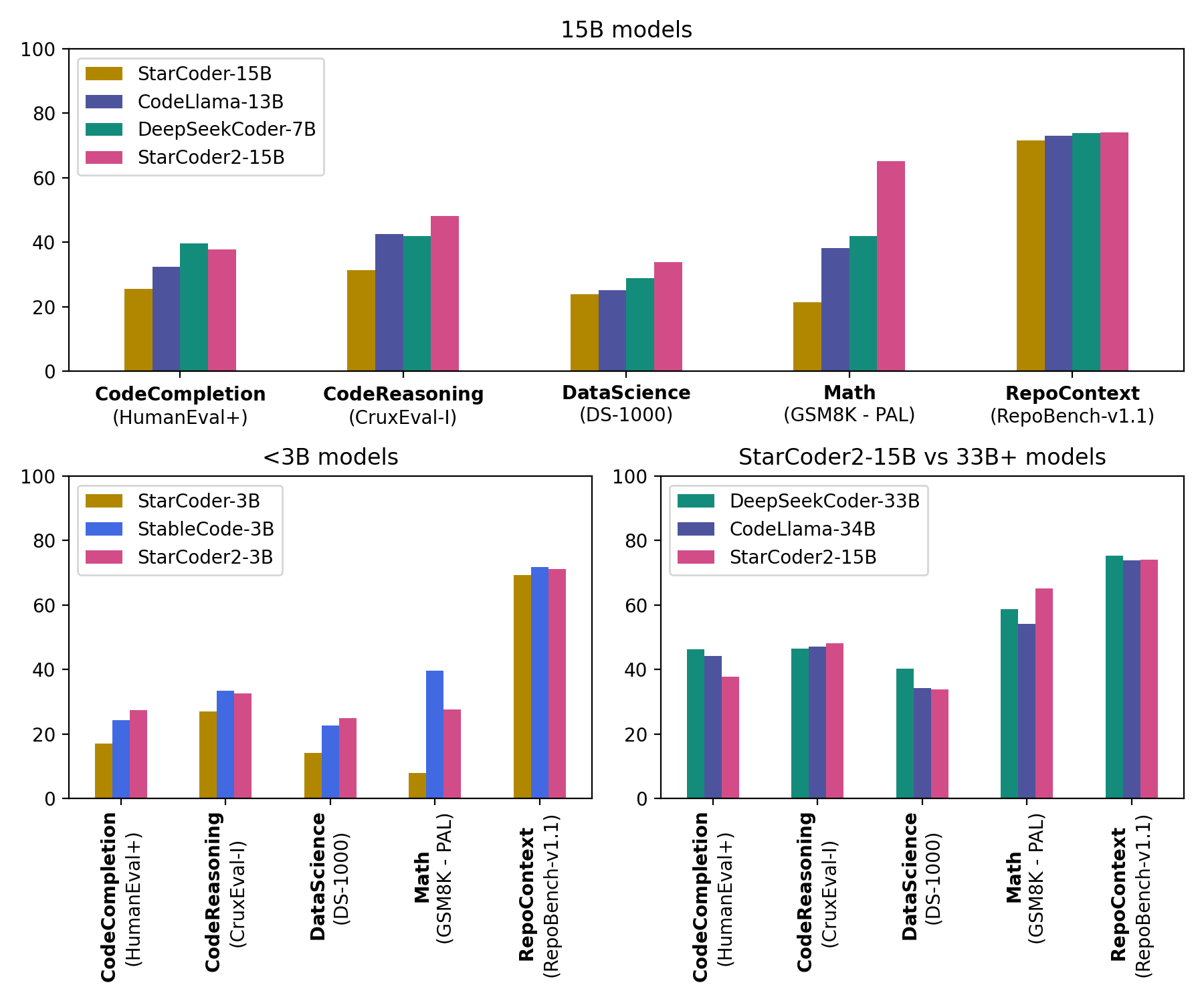

The StarCoder2-15B is the best in its size class and matches the 33B+ model in many ratings. The StarCoder2-3B matches the performance of the StarCoder1-15B.

What is Stack V2?

Stack V2 is the largest open code data set suitable for LLM pre-training. Stack V2 is larger than Stack V1, with improved language and license detection procedures and better filtering heuristics. Additionally, training datasets are grouped by repository, allowing models to be trained in the repository context.

This dataset is the largest public archive of software source code and is derived from the software heritage archives that accompany development history. The software legacy launched by INRIA in collaboration with UNESCO is an open, non-commercial initiative to collect, store, and share source code for all publicly available software. We are grateful for the software legacy that provided us with access to this valuable resource. For more information, please visit the Software Heritage website.

Stack V2 is accessible through the face hub where you hug it.

About BigCode

BigCode is a jointly leading open scientific collaboration by embracing ServiceNow with faces working on responsible development of large-scale language models of code.

link

Model

Data and Governance

others

You can find all your resources and links at huggingface.co/bigcode!