When I joined Hug Face almost three years ago, the Transformers documentation was very different from the current form today. We focused on text models and how they could be trained or used for inference into natural language tasks (such as text classification, abstraction, language modeling).

The main version of today’s Transformers Document, compared to version 4.10.0, nearly three years ago.

As trans models become more and more default ways to approach AI, the documentation expanded significantly, including new models and new usage patterns. However, new content was added in stages without actually considering how the audience and the Transformers Library evolved.

I think that’s why Document Experience (DOCX) feels like it’s getting torn apart, difficult to navigate, and outdated. Basically, it’s confusing.

This is why a redesign of the Transformers documentation is necessary to understand this confusion. The goals are:

Write for developers interested in building products using AI. It allows for organic documentation structures and growth that scale naturally rather than strictly adhere to predefined structures. Instead of modifying content to existing documents, you can create a more unified document experience by integrating it.

New Audience

IMO companies that understand the new paradigm for not only integrating AI, but building all the technology, and that internally develop this muscle, IMO companies can get 100 times better than others, differentiating and long-term value

– Crem (@crementdellangue) March 3, 2023

The Transformers document was originally written for model Tinker, a machine learning engineer and researcher.

Now that AI is not only trending, but more mainstream and mature, developers are increasingly interested in learning how to build AI into their products. This means that developers can interact with documents in a different way than machine learning engineers and researchers.

The important differences between the two are:

Developers usually start with examples of code and look for solutions for what they are trying to solve. Developers who are not familiar with AI can be overwhelmed by trances. Code examples values will reduce the code examples, or worse still not useful if you don’t understand the context in which they are used.

With the redesign, Transformers documentation is more code-first and solution-oriented. The code and explanations of beginner machine learning concepts are tightly combined to provide a more complete, beginner-friendly onboarding experience.

Once developers have a basic understanding, they can gradually improve their knowledge of trance.

Towards a more organic structure

One of Facing’s first projects was to align documents for Transformers with Diátaxis, a document approach based on user needs (learning, solving, understanding, reference).

New name, new content, new look, new address.https://t.co/plmtsmqdnx

It is probably the best document authoring system in the world! pic.twitter.com/ltcnizmrwj

– April 8, 2021

But somewhere along the way I started using Diátaxis as a plan instead of a guide. I have tried to force the content to fit neatly into one of four stipulated categories.

The stiffness prevents the emergence of naturally occurring content structures and prevents documents from adapting and scaling. The documentation on one topic quickly spanned several sections. Because it is what the structure directed and not because it makes sense.

It’s fine if the structure is complicated, but it’s not fine if it’s complicated and it’s not easy to find your way.

Redesign replaces stiffness with flexibility to allow documents to grow and evolve.

Integration vs fix

Tree rings provide past climatological records (droughts, floods, wildfires, etc.). In a sense, the Transformers documentation also has its own tree ring or era that captures its evolution.

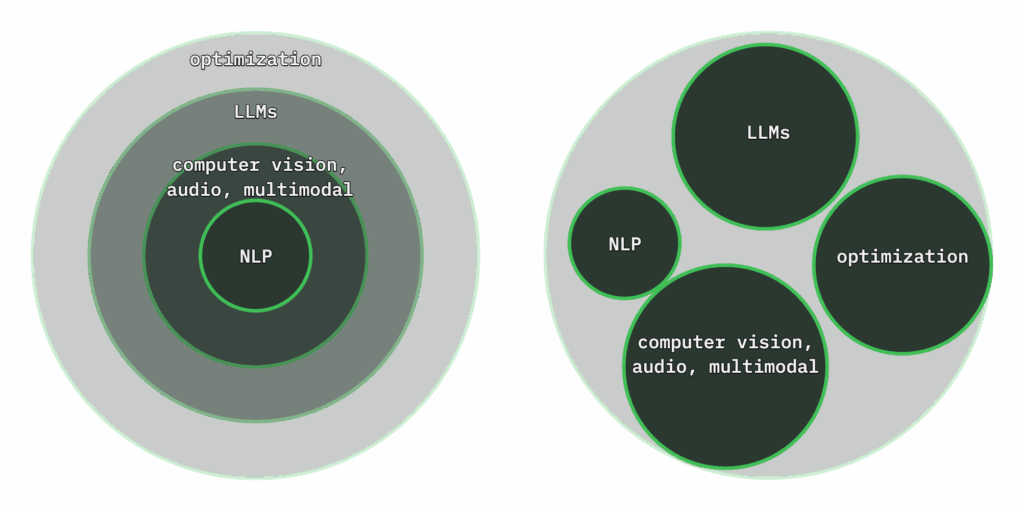

Not just in the text age: transformer models are used in computer vision, audio, multimodal, not just text, but other modalities. Large-scale Language Model (LLM) ERA: Trans models are expanded to billions of parameters, leading to new ways to interact with them, such as prompts and chat. More documentation will begin to appear on how to train LLMS efficiently, including using parameter efficient Finetuning (PEFT) methods, distributed training, data parallelism, and more. Optimization Age: Unless you’re GPU-rich, running LLM for inference and training can be a challenge. Therefore, there is a lot of interest in ways to democratize LLM among the GPU poor. There is more documentation on methods such as quantization, flash broadcasting, key value cache optimization, low rank adaptation (LORA).

Each era gradually added new content to the document, disproportionating and obscuring previous parts. Content spreads over a larger surface and navigation is more complicated.

In the Tree Ring model, new content is gradually layered with previous content. In the integrated model, on the other hand, content coexists as part of the overall document.

Redesign helps you re-adjust the overall document experience. The content feels natively integrated rather than added.

Next Steps

In this post, we explored the reasons and motivations behind the quest to redesign the Transformers document.

Stay tuned for your next post that will identify more closely to your confusion and answer important questions such as who is the intended user and stakeholder, the current state of the content, and how it is interpreted.

I’d like to shout out (@evilpingwin) (https://x.com/evilpingwin) about the feedback and motivations for redesigning the document.