This is a guest blog post by the pollen robot team. We are the creators of Regidy, an open source humanoid robot designed to operate in the real world.

In the context of autonomous behavior, the essence of robotic ease of use lies in its ability to understand and interact with its environment. This understanding comes primarily from visual perception. This allows the robot to identify objects, recognize people, navigate spaces, and more.

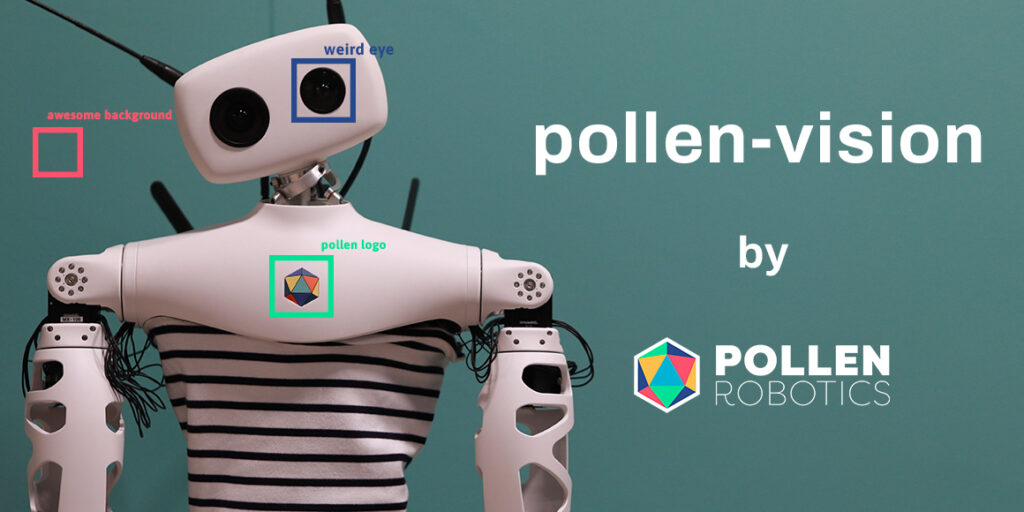

We look forward to sharing the first release of our open source pollen environment library. This is the first step to enhancing robots with autonomy to grasp unknown objects. This library is a carefully curated collection of vision models selected for direct applicability to robotics. Pollen-vision is designed to facilitate installation and use, consisting of independent modules that can be combined to create a 3D object detection pipeline, and retrieves the location of objects in 3D space (x, y, z).

We focused on choosing a zero-shot model, eliminating the need for training, and making these tools ready for immediate use.

The first release focuses on 3D objects detection. It is responsible for the basis of tasks such as robot grasping by providing reliable estimates of the object’s spatial coordinates. Currently limited to positioning within 3D space (not extended to full 6D pose estimation) This feature establishes a solid foundation for basic robotics manipulation tasks.

Pollen Vision Core Model

The library encapsulates several important models. We hope that the model we use is zero shot and versatile, allowing a wide range of detectable objects without retraining. The model must also be “real-time competent.” This means that it should run at least a few fps on the consumer GPU. The first model we chose was:

Owl-vit (Open World Localization-Vision Transformer, by Google Research): This model performs zero-shot 2D object localization of text conditions on RGB images. Bounding Box (like Yolo) Mobile SAM: Outputs a lightweight version of the segment Anything Anythy Anything Model (SAM) with Meta AI. SAM is a zero-shot image segmentation model. You can urge them with bounded boxes or points. RAM (recognized by the Oppo Research Institute): RAM designed for zero-shot image tagging determines the existence of objects in the image based on textual descriptions, laying the foundation for further analysis.

Let’s start with a very small number of code!

Below is an example of how to use pollen vision to construct a simple object detection and segmentation pipeline, using only images and text as input.

from pollen_vision.vision_models.object_detection Import owlvitwrapper

from pollen_vision.vision_models.object_segmentation Import MobilesAmWrapper

from pollen_vision.vision_models.utils Import annotator, get_bboxes owl = owlvitwrapper() sam = mobilesamwrapper() annotator = annotator() im = …predition = owl.infer(im, ((())“Paper cup”)) bboxes = get_bboxes(predition) masks = sam.infer(im,bboxes=bboxes) annotated_im = annotator.annotate(im,predition,masks=masks)

Owl-vit inference time depends on the number of prompts provided (i.e. the number of objects to detect). On a laptop with RTX 3070 GPU:

1 prompt: ~75ms per frame 2 prompts: ~130ms per frame 3 prompts: 180mm per frame

So, in terms of performance, it is interesting to only encourage owl vits using objects known to be in the image. RAM is fast and provides exactly this information, which makes RAM convenient.

Robot Use Cases: Understanding unknown objects in an unconstrained environment

The object’s segmentation mask allows you to estimate the (u,v) position in pixel space by calculating the centroid of the binary mask. It is very convenient to use a segmentation mask here. Because depth values within the mask can be averaged rather than within the perfect bounding box, it also includes backgrounds that distort the average.

One way to do that is to average the U and V coordinates of non-zero pixels in the mask

def get_centroid(mask): x_center, y_center = np.argwhere(mask == 1).sum(0) / np.count_nonzero (mask)

return int(y_center), int(x_center)

You can now enter detailed information to estimate the z coordinates of an object. The depth value is already meter, but the (u, v) coordinates are expressed in pixels. You can use the camera’s unique matrix (k) to get the (x, y, z) position of the object’s centroid in the meter

def uv_to_xyz(Z, u, V, k): cx = k(0, 2)cy = k(1, 2)fx = k(0, 0)fy = k(1, 1)x =(u -cx) *z/fx y =(v -cy) *z/fy

return np.array ((x, y, z))

This estimates the 3D position of the object in the camera’s reference frame.

If you know where the camera is located relative to the robot’s origin frame, you can perform a simple transformation to get the 3D position of the object within the robot’s frame. This means that you can move the robot’s end effector where the object is located and grasp it! 🥳

What’s next?

What we presented in this post is our first step towards our goal. This is an autonomous grasp of unknown objects in the wild. There are still some issues that need to be addressed.

Owl-vit doesn’t detect everything every time and can be inconsistent. I’m looking for a better option. So far, there has been no temporal or spatial consistency. Currently, every frame is recalculated on every frame, and we are currently working on integration of point tracking solutions, allowing us to increase the consistency of our detection techniques (for now, frontal grasp only) was not the focus of this work. We will tackle a variety of approaches to strengthen our grasping ability in terms of perception (6D detection) and grasping pose generation. It could improve overall speed

Try hay fever

Would you like to try pollen vision? Check out our GitHub repository!