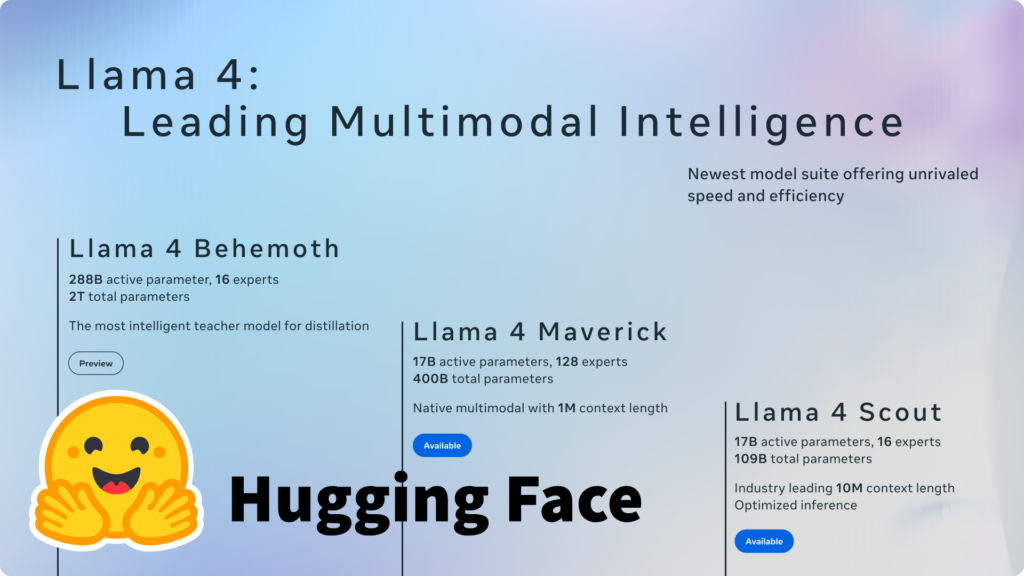

We are extremely excited to welcome the next generation of large language models, from meta to embracing facehubs. Llama4Maverick (~400b) and Llama 4 Scout (~109b)! Both are a mixture of expert (MOE) models with active parameters of 17B.

Released today, these powerful native multimodal models represent a huge advance. Working closely with Meta, we ensure that we have seamless integration into the hugging face ecosystem, including both trans and TGI from day one.

This is just the beginning of my journey with the Llama 4. Over the next few days, we will continue to work with the community to build amazing models, datasets and applications with Maverick and Scout! 🔥

What is Lama 4?

The Llama 4, developed by Meta, introduces a new autoregressive mixture (MOE) architecture. This generation includes two models.

We have a very capable Llama 4 Maverick with 17B active parameters out of a total of 400B, with 128 experts. The efficient Llama 4 Scout also has 17B active parameters out of a total of 109B, using only 16 experts.

Both models leverage early fusion of native multimodality to allow processing of text and image input. Both Mavericks and Scouts are trained with up to 40 trillion tokens of data that include 200 languages (with specific tweaking support in 12 languages, including Arabic, Spanish, German and Hindi).

For deployment, the Llama 4 Scout is designed for accessibility and fits a single server-grade GPU via on-the-fly 4-bit or 8-bit quantization, and Maverick is available in BF16 and FP8 formats. These models will be released under a custom Llama 4 community license agreement that is available in the model repository.

We are excited to announce the next integration to help the community take advantage of these cutting-edge models right away.

Hub Model Checkpoints: The weight of the Llama 4 Maverick and Llama 4 Scout Model is available directly on the face hub of the hug under the metallama tissue. This includes both base and instructional tweaked variants. This makes it easy to access, explore and download. You must accept the license terms of your model card before you can access the weights. Embracing Face Trans Integration: Build Now! The Llama 4 model is fully integrated with the transformer (version V4.51.0). This allows for easy loading, inference and tweaking using familiar APIs, including support for native multimodal features and downstream libraries such as TRL. Automatic support for transformer tensor parallel and automatic device mapping. Text Generation Inference (TGI) Support: Both models are supported by TGI for optimized and scalable deployment. This allows for high-throughput text generation and makes it easier to integrate the Llama 4 into production applications. Quantization Support: On-the-Fly FLY INT4 quantization code is provided for scouting to allow deployment in smaller hardware footprints while minimizing performance degradation. Maverick includes FP8 quantization weights for efficient deployment on compatible hardware. XET Storage: To improve uploading, downloading and faster iteration of the community that launched all llama 4 models using the XET storage backend. This storage system is designed for faster uploads and downloads, achieving around 25% deduplication on the Llama 4. All derivatives (Finetune, quantization, etc.) models need to have a higher deduplication (~40%), saving the community even more time and bandwidth.

Use a face transformer to hug

Starting with a Llama 4 using a transformer is easy. Make sure you have Transformers V4.51.0 or later installed (PIP Install -U Transformers HuggingFace_Hub (HF_XET)). A simple example using an instruction-tuned Maverick model is then responded for two images using tensor parallel at maximum speed. You need to run this script on an instance of 8 GPU using a command similar to the following:

Torchrun –Nproc-instance = 8 Script.py

from transformer Import Auto processor, llama4forconditionalgeneration

Import Torch Model_id = “Metalama/llama-4-maverick-17b-128e-instruct”

processor = autoprocessor.from_pretrained(model_id) model = llama4forconditionalgeneration.from_pretrained(model_id, attn_implementation =“flex_attention”device_map =“Automatic”torch_dtype = torch.bfloat16,)url1 = “https://huggingface.co/datasets/huggingface/documentation-images/resolve/0052a70beed5bf71b92610a43a52df6d286cd5f3/diffusers/rabbit.jpg”

url2 = “https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/cat_style_layout.png”

Message = ({

“role”: “user”,

“content”:({“type”: “image”, “URL”:url1},{“type”: “image”, “URL”:url2},{“type”: “Sentence”, “Sentence”: “Can you explain how these two images look similar and how they differ?”},)},) inputs = processor.apply_chat_template(messages,add_generation_plompt =truthtokenize =truthreturn_dict =truthreturn_tensors =“PT”.to(model.device)outputs = model.generate(** inputs, max_new_tokens =256) response = processor.batch_decode(outputs(:, inputs(“input_ids”). shape(-1):)(0))

printing(response)

printing(output(0)))

Check out the model cards for Repos (Llama 4 Maverick (~400b) and Llama 4 Scout (~109b)) for detailed usage instructions, including multimodal examples, specific prompt formats (such as system prompts), quantization details, and advanced configuration options.

The evaluation results confirm the strength of these models and show cutting-edge performance significantly outperforms its predecessors, such as the Llama 3.1 405b. For example, in the inference and knowledge tasks, instruction-tuned Maverick achieves 80.5% in MMLU Pro and 69.8% in GPQA Diamond, while scouts earn 74.3% and 57.2%, respectively.

Click to expand the evaluation results###Pre-trained models

Pre-trained Model Category Benchmark #Shotmetric Lama3.1 70b Lama3.1 405B Lama4 Scout Rama4 Maverick Inference & Knowledge MMLU 5 MACRO_AVG/ACC_CHAR 79.3 85.2 79.6 85.5 MMLU-PRO 5 MACRO_AVG/EM 53.8 61.6 58.2 62.9 MATH 4 em_maj1 53.5 50.3 61.2 Code MBPP 3 Pass @1 66.4 74.4 67.8 77.6 Multilingual Tydiqa 1 Average/F1 29.9 34.3 31.5 31.7 Image Chartqa

Instruction adjustment model

Instruction Tuning Model Category Benchmark #Shotmetric Lama3.3 70b Lama3.1 405b Lama4 Scout Rama4 Maverick Image Inferenced docvqa (test)0 anls 94.4 94.4 Coding livecodebench (10/01/2024-02/01/2025)0 Pass @1 33.3 27.7 32.8 43.4 Inference and Knowledge Multilingual MGSM 0 Average/EM 91.1 91.6 90.6 92.3 Long Context MTOB (Half Book) ENG-> KGV/KGV-> ENG-CHRF Context Window 128K 42.2/36.6 54.0/46.4 MTOB (Full Book) ENG-> KGV/KGV-> ENG-CHRF 39.7/36.3 50.8/36.3

Acknowledgments

Releasing a giant like the Llama 4 requires enormous effort across teams, regions and many VMs. In no particular order, I would like to thank Arthur, Lisandre, Cyril, Pablo, Mark and Mohammed from the Transformers team. We thank the complete VLLM team for rich discussions, insights, shared testing, rich discussions of debugging, and many challenges. Thanks to Mohit for adding support on his own to TGI’s Llama 4 due to the large optimization needs. These Chonkie models require some serious engineering at the storage level. This also required a lot of effort on Ajit, Rajat, Jared, Di, Yucheng and other Xet teams.

Many people are involved in this effort. Thank you for the hugging face, Vllm, and the team of Metalama for the amazing synergy!

reference