Training large-scale language models (LLM) has been central to advancement in artificial intelligence, but it is not without challenges. As model sizes and datasets continue to grow, traditional optimization methods (particularly Adamw) will be able to demonstrate limitations. One of the main challenges is managing computational costs and ensuring stability throughout the expansion training run. Issues like vanishing gradients, explosions, inconsistent update magnitudes, and heavy resource demands in distributed environments complicate the process. Essentially, as researchers push towards models with billions of parameters and trillions of tokens, more sophisticated optimization techniques can handle these complexities that increase efficiency and stability. There is a more urgent need.

To address these challenges, Moonshot AI collaborated with UCLA to develop Moon Light. This is an Expert (MOE) model optimized using Muon Optimizer. Moonlight comes in two configurations: a version with 3 billion active parameters and a total of 16 billion parameters trained with 5.7 trillion tokens. This work is based on the Muon Optimizer, originally designed for smaller models, by expanding its principles to meet the demands of a larger training regime. Muon’s co-innovation lies in the use of matrix orthogonalization through Newton Schulz’s iteration. This method helps ensure that gradient updates are applied more uniformly across the model’s parameter space. By addressing the common pitfalls associated with Adamw, Muon offers a promising alternative that increases both efficiency and stability in your training.

Technical details

A closer look at the technical innovations behind Moonlight reveals the thoughtful adjustments made to Muon Optimizer. Two major changes to make Muon suitable for large-scale training were key. First, integration of weight loss (a technique commonly used in ADAMW) is melting to control the growth of weight magnitude, especially when training on large models and broad token counts . Without weight collapse, weight and layer output could grow excessively, potentially decompose the performance of the model over time.

The second adjustment involves calibration of the update scale for each parameter. In fact, the size of the Muon update depends on the shape of the weight matrix. To harmonize these updates, this method scales them by coefficients proportional to the square root of the maximum dimension of each matrix. This change brings Muon’s behavior closer to AdamW’s well-understood performance, ensuring that all parameters are updated consistently.

Furthermore, the distributed implementation of Muon is built on a technique from Zero-1, splitting the optimizer state across data parallel groups. This approach reduces memory overhead and limits the communication costs typically associated with distributed training. Additional steps are required, such as collecting gradients and performing Newton Schulz iterations, but these are optimized to minimize the impact on overall training time. As a result, it requires computational resources while maintaining competitive performance.

Empirical results and insights from data analysis

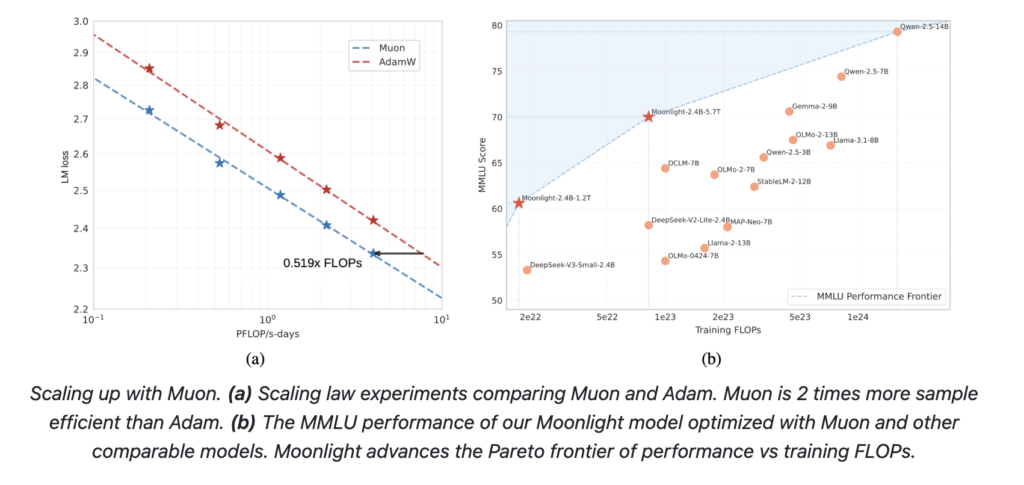

The empirical assessment of moonlight highlights the practical benefits of these technical improvements. At the mid-1.2 trillion token checkpoint, Moonlight showed a more modest improvement than counterparts trained in ADAMW (called Moonlight-A) and other similar MOE models. For example, in the task of evaluating language understanding, Moonlight achieved a slightly higher score on benchmarks like MMLU. In code generation tasks, its performance improvement is even more evident, suggesting that Muon’s sophisticated update mechanism contributes to improved overall task performance.

Scaling experiments further demonstrate the advantages of Muong. These experiments revealed that Muon could only use about half of the training computational cost, while consistent with the performance of Adamw-trained models. This efficiency is an important consideration for researchers who balance resource constraints and desire to push model functions. Furthermore, spectral analysis of the weight matrix shows that training with Moonlight with Muon leads to a more diverse range of singular values. This update direction diversity could help the model to better generalize in a variety of tasks.

Additional research at the monitored fine-tuning stage shows that when both pre-training and fine-tuning are performed using Muon, the advantages of this optimizer persist across the training pipeline. When the optimizer is switched between pre-deletion and fine-tuning, the difference is less noticeable, suggesting that consistency of the optimization method is beneficial.

Conclusion

In summary, moonlight development represents thoughtful advances in training large-scale language models. By adopting Muon Optimizer, the teams at Moonshot AI and UCLA offer viable alternatives to traditional methods like ADAMW, demonstrating improved training efficiency and model stability. Key enhancements include integration of weight collapse and adjustments to parameter-by-parameter update scales. Both help to harmonize updates across different types of weight matrices. Distributed implementations further highlight the practical benefits of this approach, particularly in reducing memory and communication overhead, in large training environments.

The insights gained from the Moonlight Project are clearly articulated in the technical report. “Muon is expandable to LLM training.” This work shows that under computational optimum conditions, Muon can significantly reduce computational costs while achieving comparable or superior performance to ADAMW. The report also highlights that the transition from Adamw to Muon requires extensive hyperparameter adjustments and does not require simplification of the researcher’s integration process.

It is hoped that in the future, it will promote open sourcing of Muon implementations and further research into scalable optimization techniques, along with prerequisite models and intermediate checkpoints. Future work may explore extending Muon to other normative constraints or integrating its benefits into a unified optimization framework that covers all model parameters. Such efforts can lead to more robust and efficient training strategies, gradually forming new standards for LLM development.

Check out the paper, models that embrace the face and github pages. All credits for this study will be directed to researchers in this project. Also, feel free to follow us on Twitter. Don’t forget to join 75K+ ML SubredDit.

Committed read-lg lg ai Research releases Nexus: an advanced system that integrates agent AI systems and data compliance standards to address legal concerns in AI datasets

Asif Razzaq is CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, ASIF is committed to leveraging the possibilities of artificial intelligence for social benefits. His latest efforts are the launch of MarkTechPost, an artificial intelligence media platform. This is distinguished by its detailed coverage of machine learning and deep learning news, and is easy to understand by a technically sound and wide audience. The platform has over 2 million views each month, indicating its popularity among viewers.

Commended open source AI platform recommended: “Intelagent is an open source multiagent framework for evaluating complex conversational AI systems” (promotion)