LLM-based multi-agent (LLM-MA) systems allow multiple language model agents to collaborate on complex tasks by splitting their responsibilities. These systems are used in robotics, finance and coding, but face the challenges of communication and refinement. Text-based communication leads to long, unstructured exchanges, making it difficult to track tasks, maintain structure and remember past interactions. Improvements such as discussion and feedback-based improvements fight because the processing order can cause important input to be ignored or biased. These issues limit the efficiency of LLM-MA systems in handling multi-step problems.

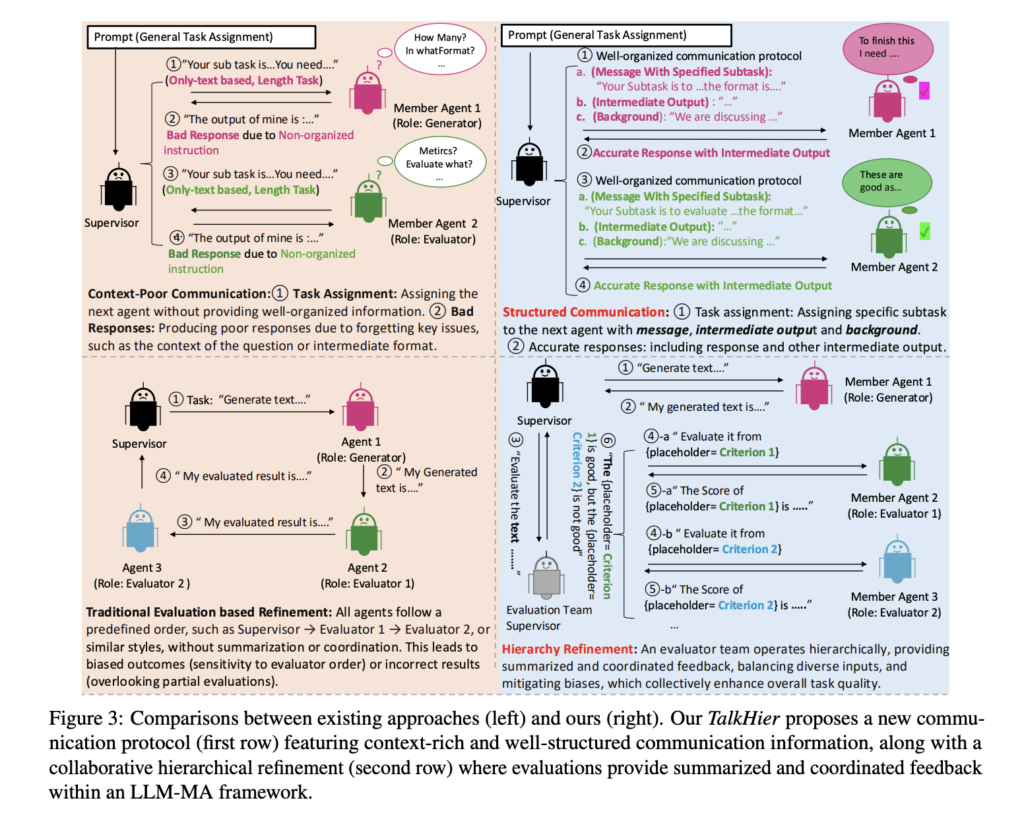

Currently, LLM-based multi-agent systems use discussion, self-healing, and multi-agent feedback to handle complex tasks. These techniques are not structured based on text-based interactions and become difficult to control. Agents struggle to follow subtasks, recall previous interactions, and provide consistent responses. Various communication structures, including chain and tree-based models, attempt to improve efficiency, but there is no explicit protocol for structuring the information. Feedback – Repair techniques try to improve accuracy, but biased and overlapping inputs create challenges and unreliable evaluations. Without systematic communication and large-scale feedback, such systems are still inefficient and error prone.

To mitigate these issues, researchers at Sony Group Corporation in Japan have introduced Talkhier, a framework that uses structured protocols and hierarchical refinement to improve communication and task coordination in multi-agent systems. I proposed it. Unlike standard approaches, Talkhier increasingly nuances explain the interaction of agent-task formulations, reducing errors and efficiency. Agents perform formalized roles, and scaling is automatically adapted to different problems across systems, resulting in improved decision-making and coordination.

This framework configures the agents in the graph such that each node is an agent and the edge represents the communication path. The agent owns independent memory. This allows you to retain relevant information and make decisions based on the informational input without using shared memory. Communication follows a formal process. The message includes content, background information, and intermediate output. Agents are teamed up with supervisors who are monitoring the process, with a subset of agents acting as members and supervisors, resulting in a nested hierarchy. The work is assigned, evaluated and improved in a series of iterations until passing a quality threshold, with the goal of minimizing accuracy and errors.

Once assessed, researchers evaluated Talkhier across multiple benchmarks to analyze its effectiveness. In the MMLU dataset covering moral scenarios, university physics, machine learning, formal logic, and US foreign policy, Talkhier, built on the GPT-4o, achieves the highest accuracy of 88.38%. The Agentverse (83.66%) and the single symbolic baseline has surpassed React-7@ (67.19%) and GPT-4O-7@ (71.15%) show the advantages of hierarchical refinement. In the wikiqa dataset, it is superior to the baseline for open domain question answers with a Rouge-1 score of 0.3461 (+5.32%) and 0.6079 (+3.30%). Ablation studies showed that removing evaluation supervisors or structured communications significantly reduced accuracy and confirmed its importance. Talkhier outperformed by 17.63% in character count violations against camera datasets of fidelity, flow ency, charm, and ad text-generated, human ratings validated multi-agent ratings. While the internal architecture of Openai-O1 has not been revealed, Talkhier has posted competitive MMLU scores, crucially beaten on Wikiqa, giving it more flexibility than a majority vote and open source multi-agent system It has been shown.

Ultimately, the proposed framework improves communication, inference, and coordination of LLM multi-agent systems by combining structured protocols and hierarchical refinement, resulting in better performance on several benchmarks. Ta. Structured interactions were guaranteed without sacrificing heterogeneous agent feedback, including messages, interim results, and contextual information. Even with the increased API costs, Talkhier has set up a new benchmark for scalable and objective multi-agent cooperation. This methodology serves as a baseline for subsequent research, directing improved effective communication mechanisms and low-cost multi-agent interactions, and ultimately towards advances in LLM-based cooperative systems. .

Please see the paper and the github page. All credits for this study will be directed to researchers in this project. Also, feel free to follow us on Twitter. Don’t forget to join 75K+ ML SubredDit.

Committed read-lg lg ai Research releases Nexus: an advanced system that integrates agent AI systems and data compliance standards to address legal concerns in AI datasets

Divyesh is a consulting intern at MarkTechPost. He pursues Btech in agriculture and food engineering at Indian Institute of Technology, Haragpur. He is a data science and machine learning enthusiast who wants to integrate these key technologies into the agriculture domain and solve challenges.

Commended open source AI platform recommended: “Intelagent is an open source multiagent framework for evaluating complex conversational AI systems” (promotion)