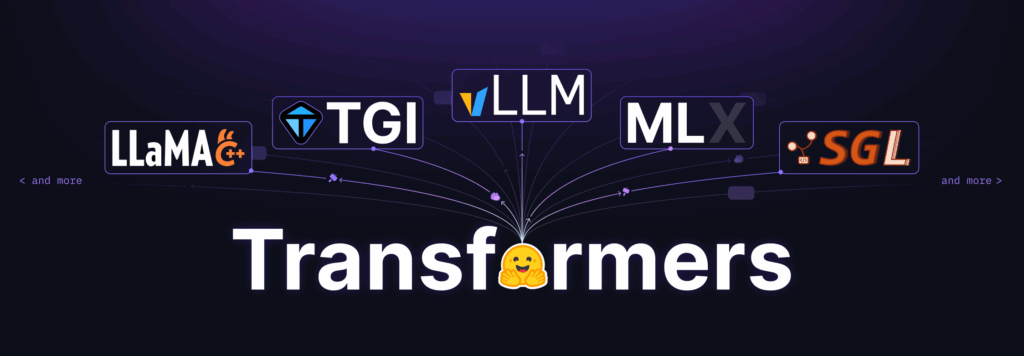

TLDR: We aim to make transformers a pivot for the entire framework in the future. If the model architecture is supported by a transformer, it can be expected to be supported by other ecosystems.

The transformer was created in 2019, following the release of the Bert Transformer Model. Since then, it has continued to aim to add cutting-edge architectures initially focused on NLP, growing into audio and computer vision. Today, Trans is the default library for LLMS and VLMS in the Python ecosystem.

Transformers currently supports over 300 model architectures, with an average of three new architectures added each week. We aim to release these architectures in a timely manner. It supports the most popular architectures (Llamas, Qwens, GLMS, etc.).

Model Resolution Library

Over time, transformers have become a central component of the ML ecosystem and have become one of the most complete toolkits in terms of model diversity. It is integrated into all popular training frameworks, including Axolotl, Unsloth, Deepspeed, FSDP, Pytorch-Lightning, TRL, Nanotron, and more.

Recently, we have been working with the most popular inference engines (VLLM, SGLANG, TGI, …) to work together to use the transformer as the backend. Added value is important. As soon as the model is added to the transformer, it becomes available in these inference engines and utilizes the strength each engine offers: inference optimization, special kernels, dynamic batching, and more.

For example, here is how to work with the VLLM transformer backend:

from vllm Import LLM LLM = LLM (Model =“New-Transformers-Model”model_impl =“transformer”))

This is everything new models need to offer production grades at ultra-fast speeds in VLLM!

For more information, see the VLLM documentation.

It also works very closely with llama.cpp and MLX, so the implementation between Transformers and these modeling libraries is extremely interoperable. For example, thanks to the substantial efforts of the community, it has become much easier to load GGUF files into transformers for further tweaking. Conversely, the transformer model can be easily converted to a GGUF file for use with llama.cpp.

The same applies to MLX. In MLX, Transformers’ SafeTensors files are directly compatible with MLX models.

We are extremely proud that the Transformers format is being adopted by the community, bringing a lot of interoperability that we all benefit from. Train the model using sloss, unfold it in sglang, export it to llama.cpp and run it locally! We aim to continue to support our community in the future.

We strive to contribute more simply models

We strive to significantly reduce barriers to model contributions in order to make it easier for the community to use transformers as a reference to model definitions. We’ve been doing this effort for several years and will accelerate significantly over the next few weeks.

The modeling code for each model is further simplified. Using a clear and concise API of the most important components (KV cache, different attention functions, kernel optimization) will condemn redundant components in favor of having a simple and single way to use the API. It promotes efficient tokenization by blaming slow tokens, and uses a high-speed vectorization vision processor as well. We continue to enhance our work on defining modular models, with the goal of new models requiring absolute minimum code changes. 6,000 contributions, 20 file changes for the new model are past.

How does this affect you?

What does this mean for you as a model user?

As a model user, in the future, the tools you use should be more interoperable.

This does not mean that we are going to lock you from using transformers in your experiments. Rather, thanks to this standardization of modeling, the tools used for training, inference and production can be expected to cooperate efficiently.

What does this mean to you as a modeler?

As a modeler, this means that a single contribution makes the model available to all downstream libraries that integrate its modeling implementation. We’ve seen this many times over the years. Releases of models are stressful and it is often a critical time of day to be integrated into all important libraries.

We hope to lower the barriers to field contribution across libraries by standardizing model implementation in a community-driven way.

We firmly believe that this updated orientation will help standardize ecosystems that are often at risk of fragmentation. I’d like to hear your feedback on the direction the team has decided to take. And about the changes we can make to get there. Come with the Transformers-Community Support Support Tab at Hub!