![]()

A practical guide to collecting data, training policies, and deploying autonomous medical robot workflows on real hardware.

table of contents

introduction

Simulation has become the cornerstone of medical image processing to address data gaps. But until now, medical robotics has often been too slow, siled, or difficult to translate into real-world systems.

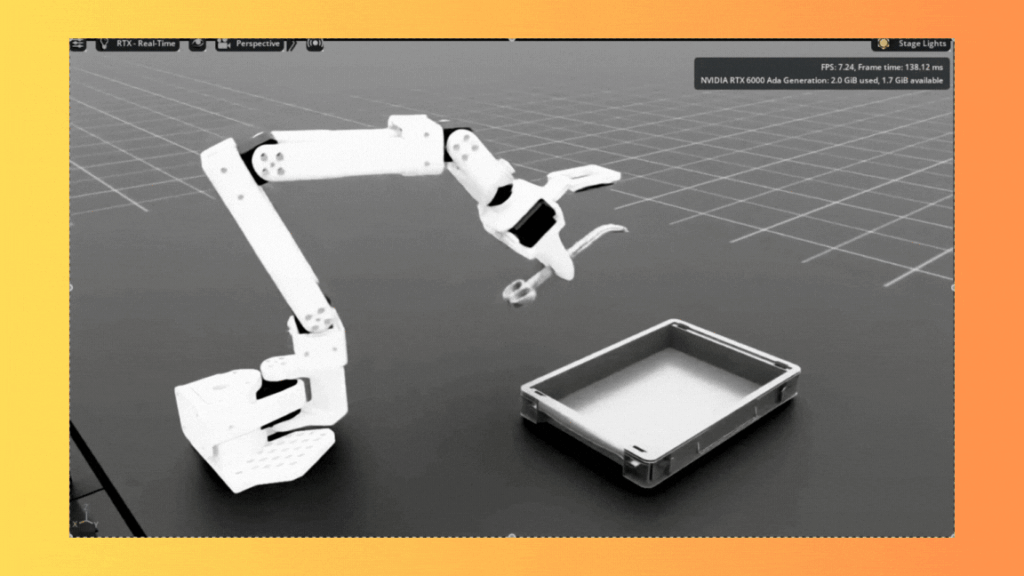

NVIDIA Isaac for Healthcare, an AI healthcare robot developer framework, helps healthcare robot developers solve these challenges by providing an integrated data collection, training, and evaluation pipeline that works with both simulation and hardware. Specifically, the Isaac for Healthcare v0.4 release provides healthcare developers with an end-to-end SO – ARM-based starter workflow and their own operating room implementation tutorial. SO-ARM Starter Workflow lowers the barrier for MedTech developers to experience a complete workflow from simulation to training to deployment and quickly start building and validating autonomously on real hardware.

This post describes a starter workflow and its technical implementation details to help you build a surgical robot in less time than you could ever imagine.

SO-ARM Starter Workflow; Building an Embodied Surgical Assistant

The SO-ARM starter workflow introduces new ways to explore surgical assistance tasks and provides developers with a complete end-to-end pipeline for autonomous surgical assistance.

Collect real-world and synthetic data with SO-ARM using LeRobot Fine-tune GR00t N1.5, evaluate with IsaacLab, and deploy to hardware

This workflow provides developers with a safe and reproducible environment to train and hone their assistive skills before entering the operating room.

technical implementation

This workflow implements a three-stage pipeline that integrates simulation and real hardware.

Data acquisition: Mixed simulation and real-world teleoperation demonstration using SO101 and LeRobot Model training: Fine-tuning GR00T N1.5 on combined datasets with dual camera vision Policy deployment: Real-time inference on physical hardware with RTI DDS communications

Notably, more than 93% of the data used to train policies is synthetically generated in simulation, highlighting the strength of simulation in bridging the robotic data gap.

Sim2Real mixed training approach

This workflow combines simulation and real-world data to address the fundamental challenge that training robots in the real world is expensive and limited, while pure simulation often fails to capture the complexity of the real world. This approach combines approximately 70 simulation episodes that cover a variety of scenarios and environmental changes with 10 to 20 real-world episodes that provide credibility and evidence. This mixed training creates a policy that generalizes beyond just either domain.

Hardware requirements

The workflow requires:

GPU: GR00TN1.5 RT Core capable architecture with 30GB+ VRAM for inference (Ampere or newer) SO-ARM101 Follower: 6-DOF high-precision manipulator with dual camera vision (wrist and room). SO-ARM101 features WOWROBO vision components such as wrist-worn camera with 3D printing adapter SO-ARM101 reader: 6-DOF remote control interface for expert demonstration collection

Best of all, developers can run all simulation, training, and deployment (physical AI requires three computers) on a single DGX Spark.

Implementing data collection

For real-world data collection using SO-ARM101 hardware or other versions supported by LeRobot:

python lerobot-record \ –robot.type=so101_follower \ –robot.port= \ –robot.cameras=“{wrist: {type: opencv, index or path: 0, width: 640, height: 480, fps: 30}, room: {type: opencv, index or path: 2, width: 640, height: 480, fps: 30}}” \ –robot.id=so101_follower_arm \ –teleop.type=so101_leader \ –teleop.port= \ –teleop.id=so101_leader_arm \ –dataset.repo_id=/surgical_assistance/surgical_assistance \ –dataset.num_episodes=15 \ –dataset.single_task=“Prepare surgical instruments and hand them over to the surgeon”

For simulation-based data collection:

python -m Simulation.environments.teleoperation_record \ –enable_cameras \ –record \ –dataset_path=/path/to/save/dataset.hdf5 \ –teleop_device=keyboard python -m Simulation.environments.teleoperation_record \ –port= \ –enable_cameras \ –record \ –dataset_path=/path/save/dataset.hdf5

simulation remote control

For users without physical SO-ARM101 hardware, the workflow provides keyboard-based remote control using the following joint controls:

Joint 1 (Shoulder Pan): Q (+) / U (-) Joint 2 (Shoulder Lift): W (+) / I (-) Joint 3 (Elbow Flex): E (+) / O (-) Joint 4 (Wrist Flex): A (+) / J (-) Joint 5 (Wrist Roll): S (+) / K (-) Joint 6 (Gripper): D (+) / L (-) R Key: Reset recording environment N Key: Mark episode as successful

model training pipeline

After collecting both simulation and real-world data, transform and combine the datasets for training.

python -m training.hdf5_to_lerobot \ –repo_id=surgical_assistance_dataset \ –hdf5_path=/path/to/your/sim_dataset.hdf5 \ –task_description=“Autonomous surgical instrument handling and preparation”

python -m training.gr00t_n1_5.train \ –dataset_path /path to your /surgical_assistance_dataset \ –output_dir /path to surgical_checkpoints \ –data_config so100_dualcam

The trained model processes natural language commands such as “Prepare the scalpel for the surgeon” or “Hand the forceps” and performs the corresponding robotic movements. With LeRobot’s latest release (0.4.0), you can now natively tweak Gr00t N1.5 in LeRobot.

End-to-end Sim collection, training, and evaluation pipeline

Simulations are most powerful when they are part of a collect → train → evaluate → deploy loop.

In v0.3, IsaacLab supports the following complete pipelines:

Generating synthetic data in simulation

Remotely control your robot using a keyboard or hardware controller Capture multi-camera observations, robot states, and actions Create diverse datasets, including edge cases that would be impossible to collect safely in real-world environments

Policy training and evaluation

Tight integration with Isaac Lab’s RL framework for PPO training Parallel environment (thousands of simultaneous simulations) Built-in trajectory analysis and success metrics Statistical validation across different scenarios

Convert the model to TensorRT

Automatic optimization for production deployment Support for dynamic geometry and multi-camera inference Benchmarking tools to verify real-time performance

This reduces the time from experimentation to deployment and makes sim2real a practical part of everyday development.

Start

Isaac for Healthcare SO-ARM starter workflow is available now. To get started:

Clone the repository: git clone https://github.com/isaac-for-healthcare/i4h-workflows.git Select a workflow: Start with the SO-ARM starter workflow for surgical assistance or explore other workflows Run the setup: Each workflow includes an automatic setup script (e.g. tools/env_setup_so_arm_starter.sh)

resource