Digital assistants will become an integral part of our daily lives. They will offer convenience and efficiency in managing tasks and accessing information. However, to function effectively, these assistants often collect, process, and store a wide range of personally identifiable information (PII).

To understand the privacy implications and enhance data protection measures, it is crucial to recognize the various types of PII typically collected by digital assistants. These categories could include:

Names: DAs require the names of users to personalize interactions and provide tailored experiences.Dates of birth/birthplace: This information is used for identity verification, customization, and security purposes.Current and previous addresses: Address data is used to offer location-based services such as weather updates, navigation, and local recommendations.Email addresses:Email addresses are essential for account creation, communication, and authentication purposes.Phone numbers: Phone numbers serve as unique identifiers and are used for communication, verification, and recovery processes.Account credentials: DAs may store credentials for emails, social media accounts, messaging apps, and banking services to facilitate seamless integration and enhanced functionality.Communication/Chat history: This data is used to improve interaction quality, learn user preferences, and provide continuity in conversations.Social maps: A social map outlines a user’s relationships and interactions, providing insights into social connections and communication patterns.Social Security Numbers/Tax IDs: Some services may request this information for identification and verification purposes, e.g. an accounting DA.Name and address of doctors: Health-related services may require this information to manage appointments, prescriptions, and health records.Health information/Health records: DAs handle health information to provide reminders, track fitness goals, and offer medical advice.Insurance information: Insurance details are stored to facilitate claims processing and policy management.Travel-related information and accounts: This data is used to streamline booking processes and enhance travel experiences.

The collection and management of these diverse types of PII by DAs raise significant privacy concerns. To address these concerns, it is essential to ensure robust data protection measures, transparency in data handling practices, and compliance with relevant privacy regulations.

As DAs become more sophisticated and more commonplace, they will increasingly be targeted by attackers exploiting API abuse vulnerabilities. This threat involves using a DA as an intermediary to access APIs and process sensitive data. To mitigate this risk, DAs must implement strong authentication and authorization mechanisms, such as OAuth, to manage user consent and data sharing permissions. Users must explicitly authorize which service providers their DA can interact with, and what information can be shared, mirroring current API security challenges like domain takeovers and unauthorized access.

DAs will interact with other digital services, potentially introducing security risks. A compromised DA can request sensitive information under false pretenses, such as credit card numbers or social security numbers. This highlights the need for robust security protocols to verify the authenticity of DAs that are accessing personal data. Only trusted and vetted DAs should be granted access to sensitive information, minimizing the risk of malicious activity within the DA network.

As DAs become more integrated into our daily lives, they face growing security threats that take advantage of their unique capabilities and trusted position within user interactions. The potential threats include the creation of malicious “custom skills” (i.e., any additional integration that can be added to a DA, such as home automation controls and external services integration), manipulating DA interactions to deliver misleading information, and exploiting APIs to alter the data that DAs rely on. Furthermore, the rise of immersive environments like the Metaverse introduces new vulnerabilities in which avatars can mimic trusted personas to deceive users.

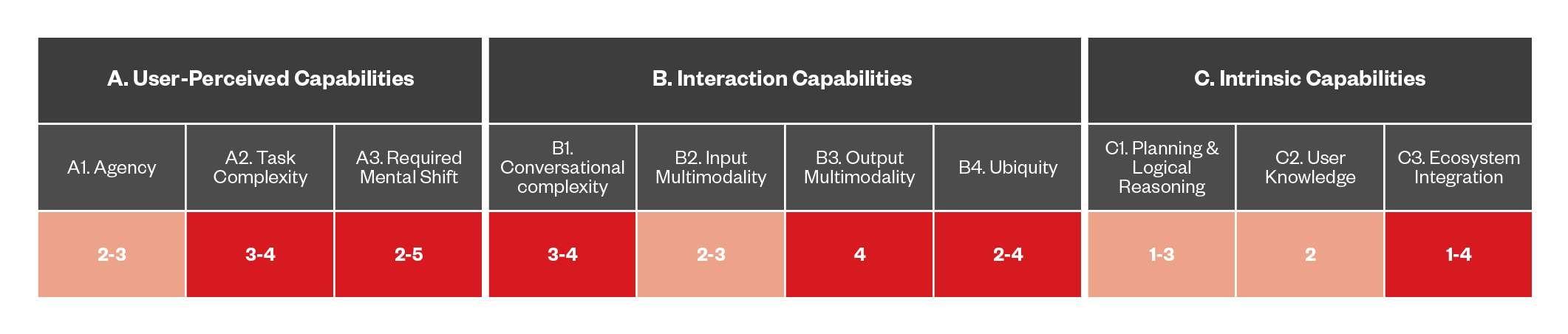

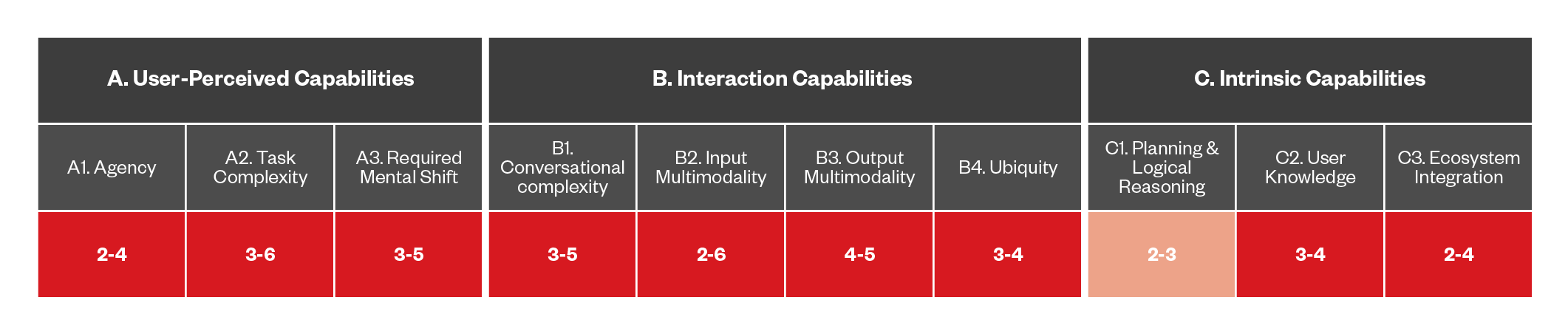

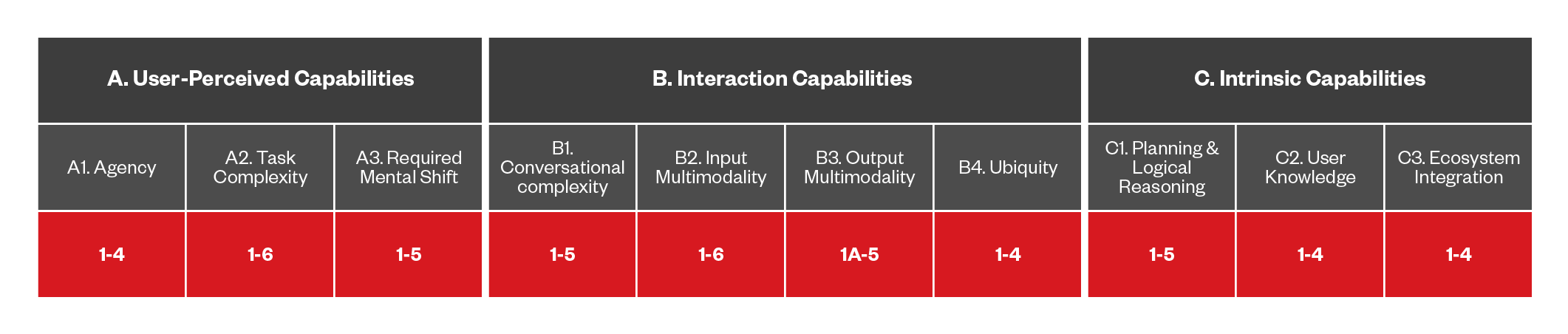

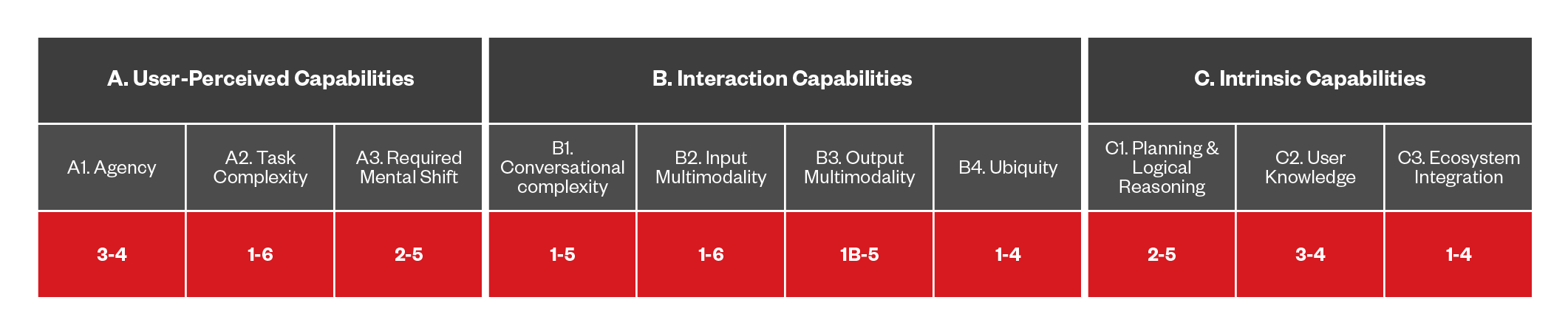

To address these threats, we came up with threat categories, which are summarized in a non-exhaustive list shown below. Each threat is assigned a number within a range of 0 – 6, depending on the levels that we previously covered. This range shows where the threats exist in each category, and in a few cases, you will see that they only exist in one level, not a range. Threats that have an upper range of 1 to 3 are denoted in orange, while those that have an upper range of 4 to 6 are denoted in red.

Skill squatting

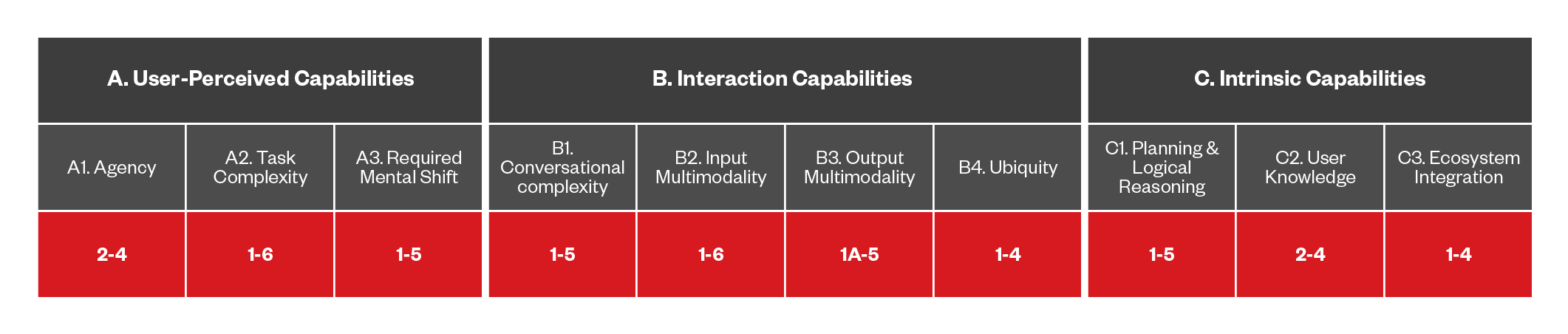

Figure 6. The capabilities of DA-based threats that use skill squatting

Skill squatting takes advantage of the phonetic similarities between skill names or how users might mispronounce or slightly alter a skill’s name. This attack involves creating malicious skills with names that are very similar to legitimate skills. When a user attempts to invoke a legitimate skill but mispronounces its name, the digital assistant might inadvertently invoke the malicious skill instead. As an example, a skill named Howtel instead of Hotel would demonstrate a skill squatting threat.

Trojan skill

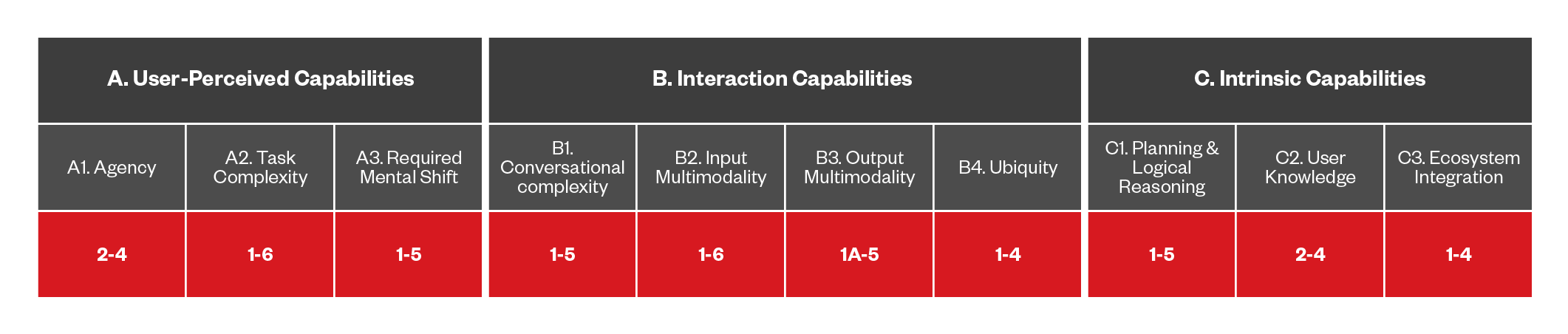

Figure 7. The capabilities of DA-based threats that use trojan skills

Trojan skills are a type of malicious software that targets voice-activated DAs. These apps appear legitimate and useful at first glance, often mimicking popular functions or offering helpful features. However, once installed, they secretly perform harmful actions that put the user’s privacy and security at risk. For example, they might steal sensitive information such as passwords or personal data, record conversations, or execute unauthorized commands without the user’s knowledge or consent. Trojan skills take advantage of the user’s trust in DA platforms and exploits vulnerabilities for installation. This type of attack emphasizes the importance of thorough review processes, ongoing monitoring, and user education to protect against hidden threats from malicious apps in increasingly connected digital environments.

Malicious Metaverse avatar DA phishing

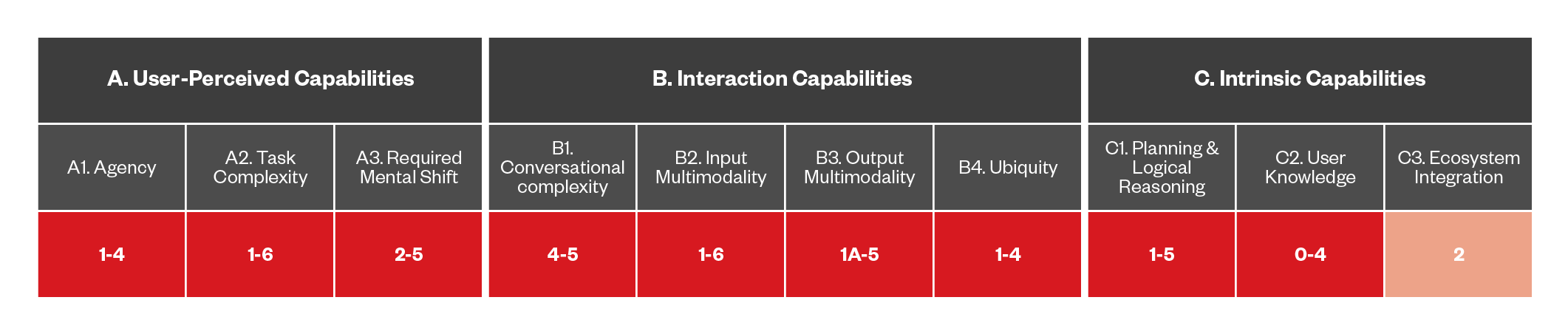

Figure 8. The capabilities of DA-based threats that use metaverse avatar DA phishing

Malicious metaverse avatars appear as legitimate DAs. These fake DAs mimic the appearance, behavior, and speech patterns of authentic assistants using advanced AI techniques. They are designed to deceive users into trusting them, which can lead to sensitive information, such as passwords, personal data, or financial details, being shared.

Additionally, these malicious avatars might manipulate users into performing actions that compromise their security or privacy. The immersive nature of the Metaverse makes it challenging for users to recognize malicious intent. Therefore, robust authentication mechanisms, constant monitoring, and user awareness are essential in preventing these sophisticated avatar phishing attacks and ensuring the integrity and security of interactions within the Metaverse (as we discussed in a previous publication).

Digital assistant social engineering (DASE)

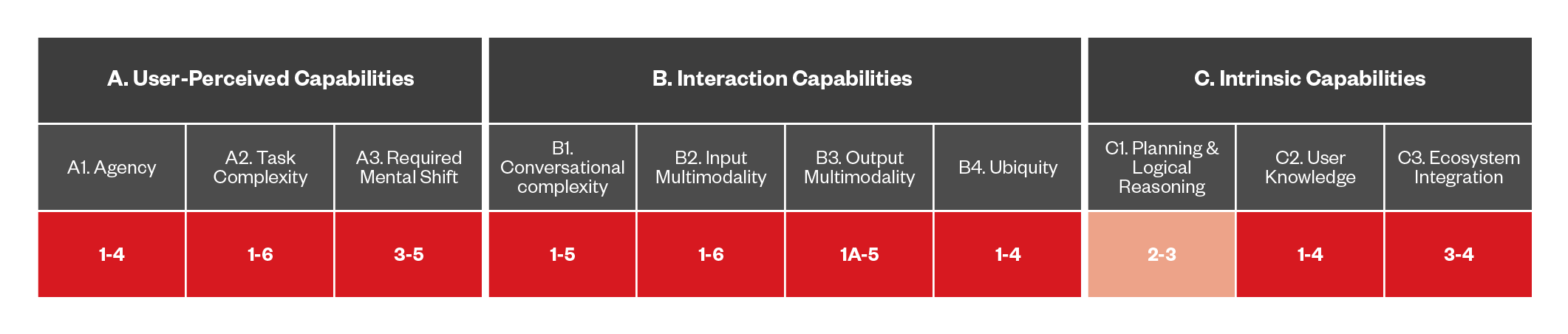

Figure 9. The capabilities of DA-based threats that use DASE

Social engineering can be performed by proxy through DAs. Attackers can manipulate the output generated by DAs to indirectly deceive users into taking harmful actions. For instance, a malicious actor might instruct the DA to relay a message like, “Amazon has requested your permission for this,” knowing that users are likely to trust this communication due to their confidence in both their DA and the brand.

Although this type of attack can be executed with any form of DA, the likelihood of the threat increases as users develop greater trust in these systems, leading them to accept information and requests without skepticism. As DAs take on more sophisticated tasks, such as managing user finances, the severity of the threat rises, given that the potential consequences of a successful attack grow significantly.

To mitigate these risks, it is essential to enhance DA security, implement rigorous verification processes for communications, educate users about the dangers of uncritical trust in AI-driven interactions, and regularly update security protocols to address emerging vulnerabilities.

The Future of Malvertising

Figure 10. The capabilities of DA-based threats that use malvertising

The future of malvertising will experience an evolution, particularly within the framework of distributed advertising. Unlike traditional malvertising, which exploits vulnerabilities in ad networks to serve malicious content, DA-based malvertising takes advantage of the personalized nature of modern recommendation engines. By subtly corrupting the knowledge base of a user’s social network, attackers can manipulate the DA’s recommendation algorithms to steer users towards harmful content.

This tactic is particularly insidious because it capitalizes on the trust users place in their social and community-driven recommendations, which typically see less scrutiny from content protection systems focused primarily on individual user behavior. The success of DA-based malvertising hinges on a deep understanding of community-level user interactions, making it a sophisticated and challenging threat to counter.

The future of spear phishing

Figure 11. The capabilities of DA-based threats that use spear phishing

This threat involves compromised DAs tricking users into providing information, such as financial data, by using PII it already has access to. For example, the DA might use information on previous purchases or places visited by the individual to create a plausible scenario (e.g., “There is a problem with a previous purchase, please provide financial information to fix it”). This approach can work when the DA has some level of agency, but doesn’t have the authority to proceed with financial operations on its own.

Semantic Search Engine Optimization (SEO) abuse

Figure 12. The capabilities of DA-based threats that abuse SEO

Semantic SEO abuse represents a growing threat in manipulating DAs, as the world moves towards real-time search and retrieval-augmented generation (RAG) models. Unlike traditional keyword-based indexing, semantic indexing focuses on understanding the meaning and context of information. Malicious actors can exploit this by injecting content that appears semantically relevant to what a DA is searching for but is actually misleading the user. This type of abuse aims to manipulate the DA’ s results to present content that seems like the correct answer, thereby enticing users to visit a URL that may be harmful or deceptive.

As digital assistants become more reliant on semantic understanding to deliver information, attackers can craft pages that superficially align with user queries, but instead redirect users to undesirable outcomes. This manipulation not only undermines user trust in DAs but also poses significant security risks.

To mitigate these threats, it is essential to develop advanced filtering and verification mechanisms that can detect and prevent semantic manipulation, ensuring that DAs deliver accurate and reliable information. As semantic indexing becomes more prevalent, the challenge will be to maintain the integrity of search results in the face of increasingly sophisticated attempts to game the system.

Agents Abuse

Figure 13. The capabilities of DA-based threats that abuse agents

Agent abuse in DAs occurs when attackers exploit DA interaction APIs rather than directly manipulating the DA itself. This type of abuse is similar to targeted advertisements on social network platforms like Facebook, where malicious actors promote counterfeit products or scam services.

By manipulating the APIs that DAs rely on for information retrieval and decision-making, attackers can feed deceptive data into the system. If a DA recommends a product or service based on manipulated API responses, users are more likely to trust and act on this recommendation, believing their DA to be a reliable intermediary. This exploitation takes advantage of the inherent trust users place in their DAs, making them susceptible to scams and low-quality products.

As DAs evolve to become more integrated into daily decision-making, it becomes even more important to ensure the integrity of the APIs they interact with. Safeguards such as robust validation mechanisms, enhanced transparency of data sources, and regular audits can help mitigate the risk of agent abuse, preserving the trust relationship between users and their DAs.

Malicious External DAs

Figure 14. The capabilities of DA-based threats that use malicious external DAs

DAs often serve as intermediaries between users and APIs, possessing delegated authority to call and process data. This setup introduces significant security risks, as the DA, rather than the user, determines which APIs to interact with and how to manage the information being exchanged. OAuth or similar authorization mechanisms in a DA context would require a clear delineation of which providers the DA can communicate with, and the specific data authorized for sharing, such as personal information or health data.

This opens the door to a class of attacks where malicious external DAs can abuse vulnerabilities like those seen in today’s API security breaches, like domain takeovers. These attacks can be particularly dangerous since users typically focus on the provider rather than the underlying API interactions. The potential for DAs to accept natural language queries further complicates the risk landscape, as it creates new avenues for API misuse and manipulation.

As a result, a comprehensive understanding and adaptation of existing API security frameworks to the DA environment is crucial for mitigating these emerging threats. As a note, task complexity in the chart for this section uses a 1-6 ranking, since we consider 1 to be disinformation, and people will take action from 2 through 6. On the Ecosystem Integration, we figured that 3 indicates that users installed the DA themselves, and anything above that would involve the DA installing another DA on its own.