![]()

MusicGen is a powerful music generation model that takes text prompts and optional melodies and outputs music. This blog post shows you how to generate music with MusicGen using inference endpoints.

The inference endpoint allows you to create custom inference functions called custom handlers. These are especially useful when the model is not supported out-of-the-box by the transformer’s high-level abstraction pipeline.

Transformer pipelines provide a powerful abstraction for performing inference on transformer-based models. The inference endpoint leverages the pipeline API to easily deploy models with just a few clicks. However, you can also use inference endpoints to deploy non-pipelined or non-transformer models. This is accomplished using custom inference functions called custom handlers.

Let’s demonstrate this process using MusicGen as an example. To implement and deploy a custom handler function for MusicGen, you need to do the following:

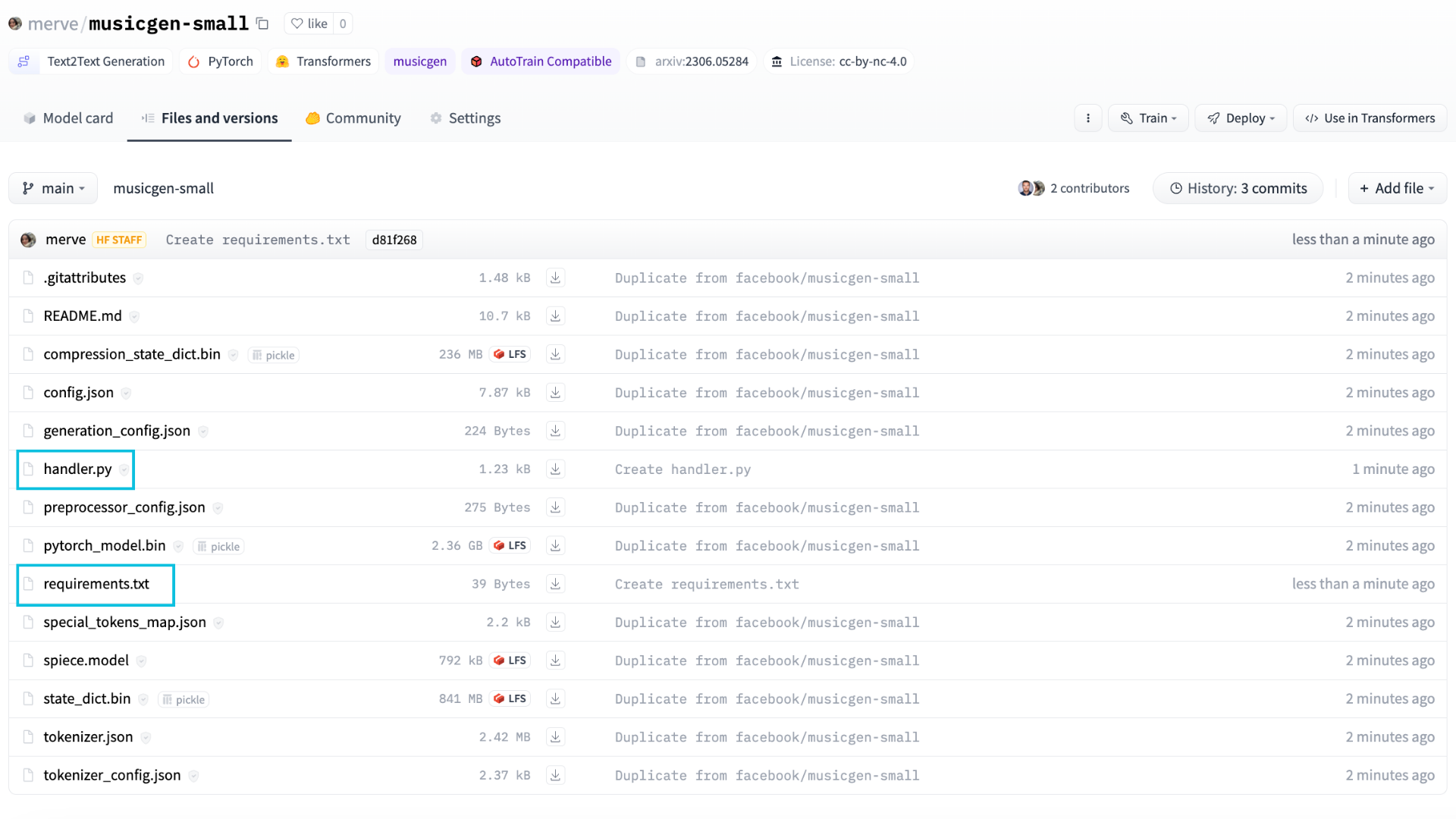

Clone the provided MusicGen repository, write a custom handler in handler.py, write dependencies in requirements.txt and add them to the cloned repository, and create an inference endpoint for that repository.

Or simply use the final result to deploy a custom MusicGen model repository. I just followed the above steps here 🙂

Let’s go!

First, use Repository Duplicator to clone the facebook/musicgen-large repository to your profile.

Next, add handler.py and requirements.txt to your cloned repository. First, let’s take a look at how to perform inference with MusicGen.

from transformer import AutoProcessor, MusicgenForConditionalGeneration processor = AutoProcessor.from_pretrained(“facebook/musicgen-large”) model = MusicgenForConditionalGeneration.from_pretrained(“facebook/musicgen-large”) input = processor( text=(“80’s pop track with bass drums and synths”), padding=truthreturn_tensors=“pt”) audio_values = model.generate(**inputs, do_sample=truthguidance_scale=3max_new_tokens=256)

Hear what it sounds like:

Your browser does not support the audio element.

Optionally, you can also use audio snippets to adjust the output. That is, you can also generate completion snippets that combine text-generated audio with input audio.

from transformer import Autoprocessor, MusicgenForConditionalGeneration

from dataset import load_dataset processor = AutoProcessor.from_pretrained(“facebook/musicgen-large”) model = MusicgenForConditionalGeneration.from_pretrained(“facebook/musicgen-large”) dataset =load_dataset(“Sanchit Gandhi/Gutsan”split =“train”streaming =truth) sample = Next(Iter(dataset))(“audio”) sample (“array”) = sample(“array”)(: Ren(sample(“array”)) // 2) input = processor( audio=sample(“array”), sampling rate = samples (“Sampling rate”), text=(“An 80’s blues track with a groovy saxophone”), padding=truthreturn_tensors=“pt”) audio_values = model.generate(**inputs, do_sample=truthguidance_scale=3max_new_tokens=256)

Let’s listen:

Your browser does not support the audio element.

In both cases, the model.generate method generates audio and follows the same principles as text generation. For more information, see How to generate blog posts.

Are you okay! With the above basic usage guide, let’s introduce MusicGen to have fun and profit.

First, define your custom handler in handler.py. You can use the inference endpoint template to override the __init__ and __call__ methods in your custom inference code. __init__ initializes the model and processor, and __call__ retrieves the data and returns the generated music. The modified EndpointHandler class can be found below. 👇

from typing import dictionary, list, Any

from transformer import Autoprocessor, MusicgenForConditionalGeneration

import torch

class endpoint handler:

surely __Initialization__(self, path =“”): self.processor = AutoProcessor.from_pretrained(path) self.model = MusicgenForConditionalGeneration.from_pretrained(path, torch_dtype=torch.float16).to(“Cuda”)

surely __phone__(yourself, data: dictionary(str, Any)) -> dictionary(str, str):

“”

argument:

Data (:dict:):

A payload containing a text prompt and generation parameters.

“”

input = data.pop(“input”data) parameter = data.pop(“parameter”, none) inputs = self.processor( text=(inputs), padding=truthreturn_tensors=“pt”,). To (“Cuda”)

if parameters teeth do not have none:

and Torch.Autocast(“Cuda”): output = self.model.generate(**input, **parameter)

Other than that:

and Torch.Autocast(“Cuda”): output = self.model.generate(**input,) prediction = output(0).cpu().numpy().tolist()

return ({“Generated Audio”: prediction})

To keep things simple, this example only generates audio from text and does not orchestrate it with melody. Next, create a requirements.txt file that contains all the dependencies needed to run your inference code.

Transformers==4.31.0 Acceleration>=0.20.3

Simply upload these two files to your repository to serve your model.

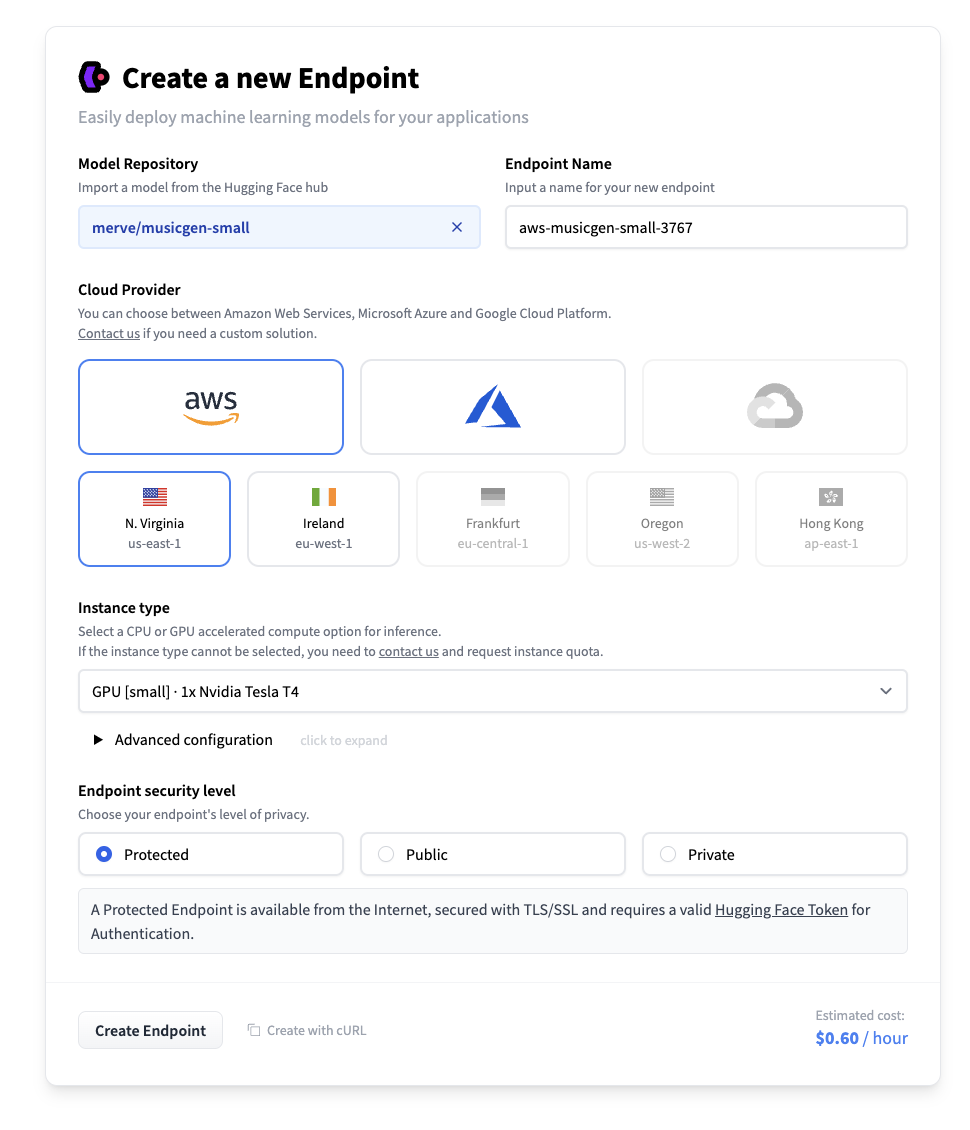

Now you can create an inference endpoint. Go to the (Inference Endpoint) page and click (Deploy your first model). In the Model Repository field, enter the identifier of the cloned repository. Next, select the required hardware and create an endpoint. Instances with at least 16 GB of RAM should work with musicgen-large.

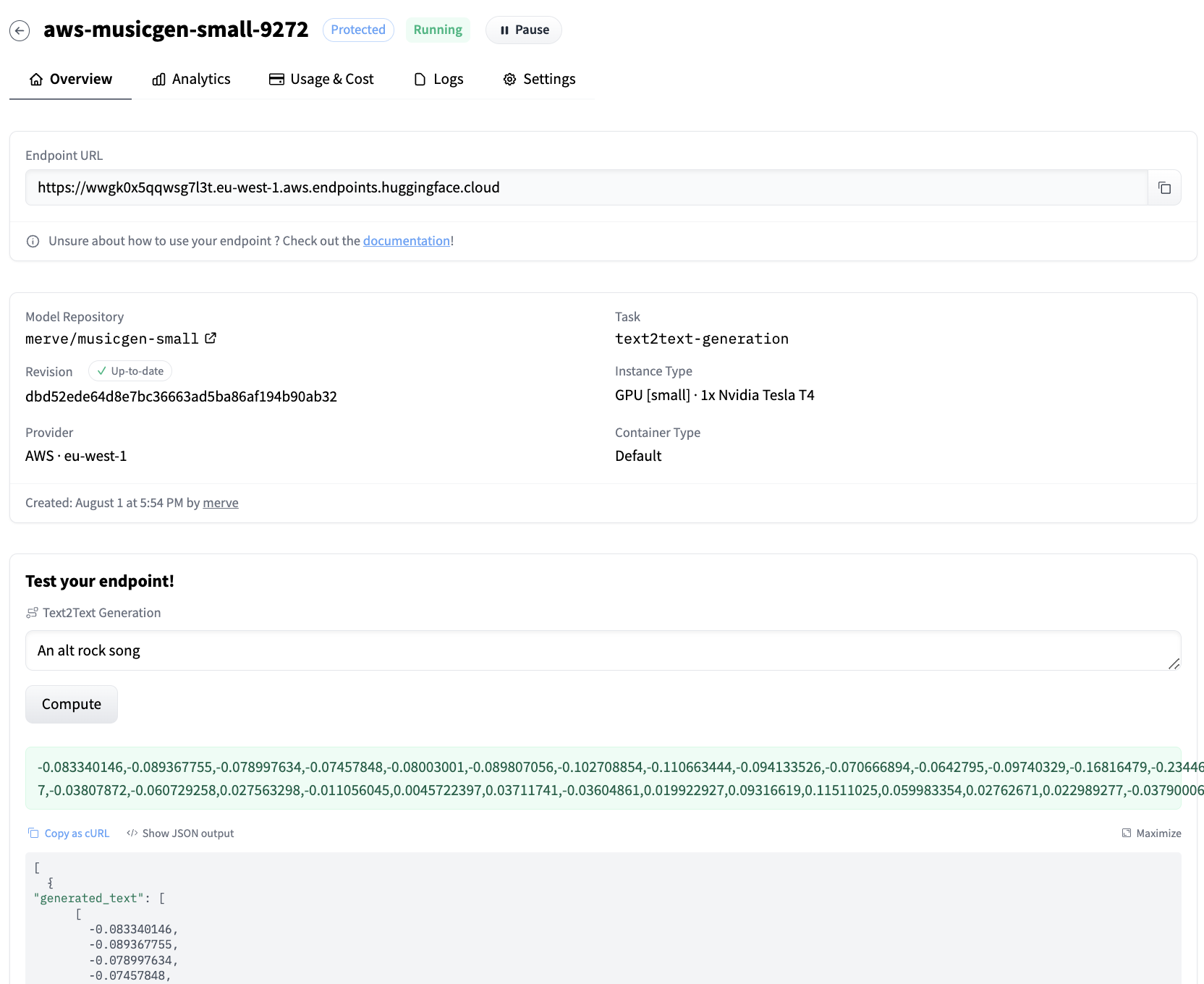

After you create an endpoint, it will be automatically started and ready to receive requests.

You can query the endpoint using the snippet below.

curl URL_OF_ENDPOINT \ -X POST \ -d ‘{“input”:”cheerful and lively happy folk song”}’ \ -H “Authorization: {YOUR_TOKEN_HERE}” \ -H “Content type: application/json”

The following waveform sequence is displayed as output.

({“Generated Audio”:((-0.024490159,-0.03154691,-0.0079551935,-0.003828604, …))})

Here’s what it sounds like:

Your browser does not support the audio element.

You can also access the endpoint using the InferenceClient class in the Hug Face Hub Python library.

from hug face hub import InferenceClient client = InferenceClient(model = URL_OF_ENDPOINT) response = client.post(json={“input”:“Alternative rock song”}) output = evaluation(response)(0)(“Generated Audio”)

The generated sequence can be converted to audio if desired. You can use scipy in Python to write to .wav files.

import Saipee

import lump as np scipy.io.wavfile.write(“musicgen_out.wav”rate =32000data=np.array(output(0)))

And voila!

Try the demo below to try out the endpoint.

conclusion

In this blog post, you learned how to deploy MusicGen using an inference endpoint with a custom inference handler. The same technique can be used for other models in the hub that don’t have associated pipelines. All you need to do is override the Endpoint Handler class in handler.py and add requirements.txt to reflect your project dependencies.

read more