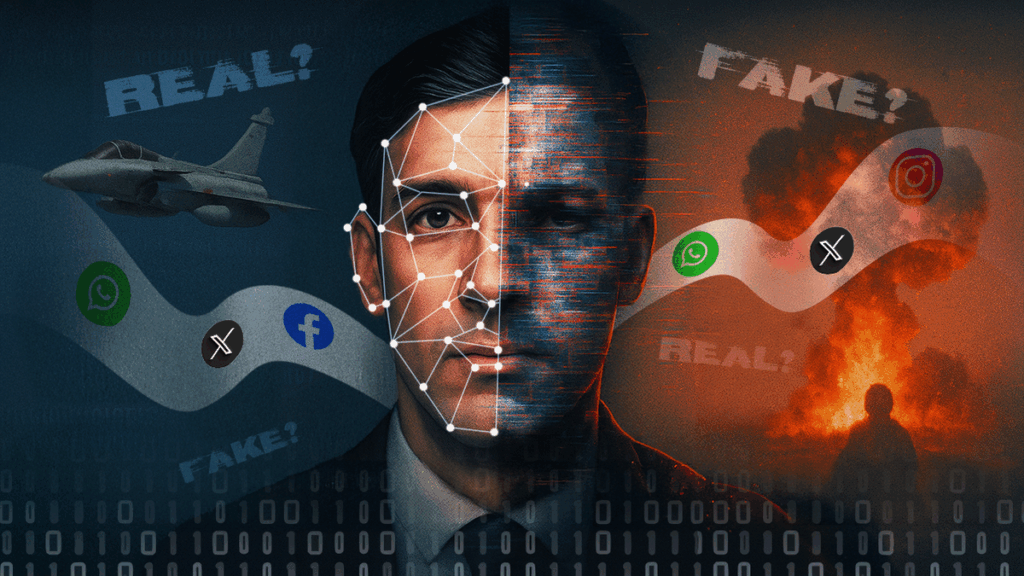

In the age of AI and real-time virality, the old saying “to see believe” is no longer true. In fact, what you see may be a synthetic illusion produced by lines of code designed to manipulate, misleading, and mobilize. The Internet is now at the heart of the information warfare between nations, as well as between truth and technology. From virus lies to global crisis, the surprising role of AI leads to misinformation.

Recent Infrequent Information Storms in India and Pakistan

Recently, tensions between India and Pakistan escalated after military exchanges along the border were reported. But beyond missiles and media briefings, different types of weapons were deployed. It thrives in the viral shadow. Below are some notable cases that the AntrackR team has closely analyzed.

Example 1: Phantom Fighter

Videos have been circulated across social media platforms claiming that Pakistan has admitted to losing two JF-17 fighter jets in the conflict. The footage featured what appears to be a spokesman for the Pakistani Army that issued a statement. However, in a close analysis by the fact checker, Boom LiveIt was revealed to be a deep fark with inconsistency in audio synchronization when lip movements are off-set. However, the fact checker Mohammed Zubair, co-founder of Alt News It flagged the clip as a deep-fark of tweets, pointing out lip syncs that were inconsistent with the manipulated audio. His timely intervention helped to publish the video as a made-up story.

Example 2: Chinese officials’ suspicions of anti-Indian comments

Another widely circulated clip Chinese officials are said to have said “China is more committed to Pakistan than India.” Picked up from Reddit and amplified on social media, the video has raised a red flag. Its reliability is questionable, but AI experts suspect that they have manipulated audio overlays, part of a new trend in which real footage is used as the basis for synthetic audio insertion.

/entrackr/media/media_files/2025/05/13/screenshot-851982.png)

Even after the original claims were exposed, there have been many instances of misinformation spreading on a large scale, leaving long-term awareness and mistrust between India and Pakistan.

The human brain is already at a disadvantage in an age where misinformation spreads six times faster than the truth while talking to Antrackr. Cognitive biases, particularly those that believe in content that is consistent with our emotions and existing beliefs, become extremely vulnerable to deepfakes and AI-generated propaganda. Even if a false claim is exposed, the damage is rarely reversed. Continuous impact effects. In this ultra-viral ecosystem, the internet is supporting rage over accuracy, attracting attention for slow, de facto journalism. Against this background, government regulations on AI and synthetic media are no longer options, but national security orders, protecting civil stability.

Media TRP Trap: When Verification Loses to Virality

The most annoying aspect was these videos, not viral on the Telegram Group or Fringe’s Twitter handle. From television news to digital outlets, mainstream media houses in India have been caught up in a race of exclusivity and engagement by airing or quoting these works without proper verification.

Once pride in the reliability of video game footage reused as “war zone visuals” or AI-generated soldier testimony, the channel has become a tool for misinformation. The media was the first victim and became the biggest amplifier of synthetic lies,” says a senior digital forensic analyst at an NGO who confirms the facts.

Deepfakes and synthetic media are not inherently evil. Power entertainment, dubbing, accessibility tools and virtual influencers. But with the wrong hands, they become digital weapons that can destroy reputation, incite community violence, cause diplomatic breakdowns, and create alternative reality that will own de facto journalism.

The collapse of trust in the Internet age

result? The deficit of trust in the medium, which aims to inform us. If you can deepen your respected anchor and say inflammatory, or if you can fake war updates with cinematic realism, what will remain real? The age of misinformation on the Internet has entered a new phase in which artificial intelligence not only reports news, but rewrites it. If the media, government, and citizens don’t act now, the line between truth and fiction will be permanently blurred.

According to senior executives at key fact check websiteswe are now dealing with misinformation that is not only misleading, but also creating people and moments. Media organizations require an internal AI detection workflow before publishing sensitive footage, and TRP is by no means a hit with the truth. Exclusivity without verification is conspiracy. Of course, we understand that it’s easier than it says.

The need for government regulation, law and accountability

The rise of synthetic media calls for urgent and coordinated responses from governments, technology platforms and media agencies. India needs to move quickly to criminalize malicious deepfakes and introduce robust laws that require AI to explicitly disclose content. Without legal deterrence, digital impersonation will continue to flourish unconfirmedly. “What we are witnessing deepfakes during the India-Pakistan conflict is not only a security concern, but a legal blank. India still lacks clear laws on synthetic media. Without accountability, the real harm is not unconfirmed. This is not a future threat.

As legal voids around synthetic media are unlikely to be addressed anytime soon, social media platforms must take greater responsibility by actively flagging, demoting and removing fake content, especially during times of geopolitical tensions. Collaboration with independent fact checkers and development of real-time detection systems should not be optional add-ons, but as a core component of platform accountability in the AI era.