This blog post provides a tutorial on how to use new data augmentation techniques for document images developed in collaboration with Altumentations AI.

motivation

Vision Language Models (VLM) has a huge range of applications, but often you need to fine-tune it to specific use cases, especially for document images, i.e. datasets containing high images with high text content. In these cases, it is important that the text and images interact with each other at all stages of model training, and this interaction is guaranteed by applying enhancements to both modalities. Essentially, I want the model to learn to read properly. This is difficult in the most common cases where data is missing.

Therefore, the need for effective data augmentation techniques for document images became apparent when addressing the challenges of fine-tuning models with limited data sets. A common concern is that typical image transformations such as background color changes, blurry, or changes can have a negative impact on the accuracy of text extraction.

We recognized the need for data augmentation techniques to maintain textual integrity while augmenting the dataset. Such data augmentation can help to generate new documents or modify existing documents while maintaining the quality of the text.

introduction

To address this need, we present a new data augmentation pipeline developed in collaboration with Altumentations AI. This pipeline processes both images and text within images, providing a comprehensive solution for document images. This class of data augmentation is multimodal as it simultaneously changes both image content and text annotations.

As explained in a previous blog post, our goal is to test the hypothesis that integration into both text and images during pre-deletion of VLMS is effective. Detailed parameters and use case illustrations are available in the AL documentation. AI allows for dynamic design of these augmentations and integration with other types of augmentations.

method

To extend the document image, start by randomly selecting rows in the document. HyperParameter fraction_range controls the number of fractions in the bounding box that you want to change.

Next, we apply one of several text augmentation methods to the corresponding line of text commonly used in text generation tasks. These methods include random insertion, deletion, swap, and swaping stopword exchange.

After modifying the text, use the original bounding box size as a proxy for the font size of the new text to blacken the portion of the image where the text was inserted and painted. The font size can be specified using the parameter font_size_fraction_range. This determines the range to select the font size as part of the height of the bounding box. Note that you can get the modified text and the corresponding bounding box to use for training. This process results in a dataset with semantically similar textual content and visually distorted images.

Key features of increasing text timerage

The library can be used for two main purposes:

Insert text into images: This feature allows you to overlay text into document images, effectively generating composite data. You can create a variety of training samples by using random images as backgrounds and rendering completely new text. A similar technique called Synthdog was introduced in the Document Understanding Transformer without OCR.

Insert expanded text into the image: This includes augmenting the following text:

Random Delete: Randomly removes words from the text. Random Swapping: Exchange words in text. Insert Stop Word: Inserts a common stop word into the text.

These extensions can be combined with other image transformations from Albertation to change images and text simultaneously. You can also retrieve extended text.

Note: The initial version of the data augmentation pipeline presented in this repository contains synonym replacements. This version removed this version because overhead caused considerable time.

install

! Pip Install – U Pillow! Pip install albumentations!

Import Albument As a

Import CV2

from matplotlib Import pyplot As plt

Import JSON

Import nltk nltk.download(“Stop word”))

from nltk.corpus Import Stop Word

Visualization

def Visualize(image): plt.figure(figsize =(20, 15)) plt.axis(‘off’)plt.imshow (image)

Load data

Note that IDL and PDF datasets are available for this type of augmentation. Provides the bounding box for the row you want to change. This tutorial focuses on sample IDL datasets.

bgr_image = cv2.imread(“Example/original/fkhy0236.tif”) Image = cv2.cvtcolor (bgr_image, cv2.color_bgr2rgb)

and open(“Example/original/fkhy0236.json”)) As F: Label=json.load(f)font_path= “/usr/share/fonts/truetype/liberation/liberationerif-regual.ttf”

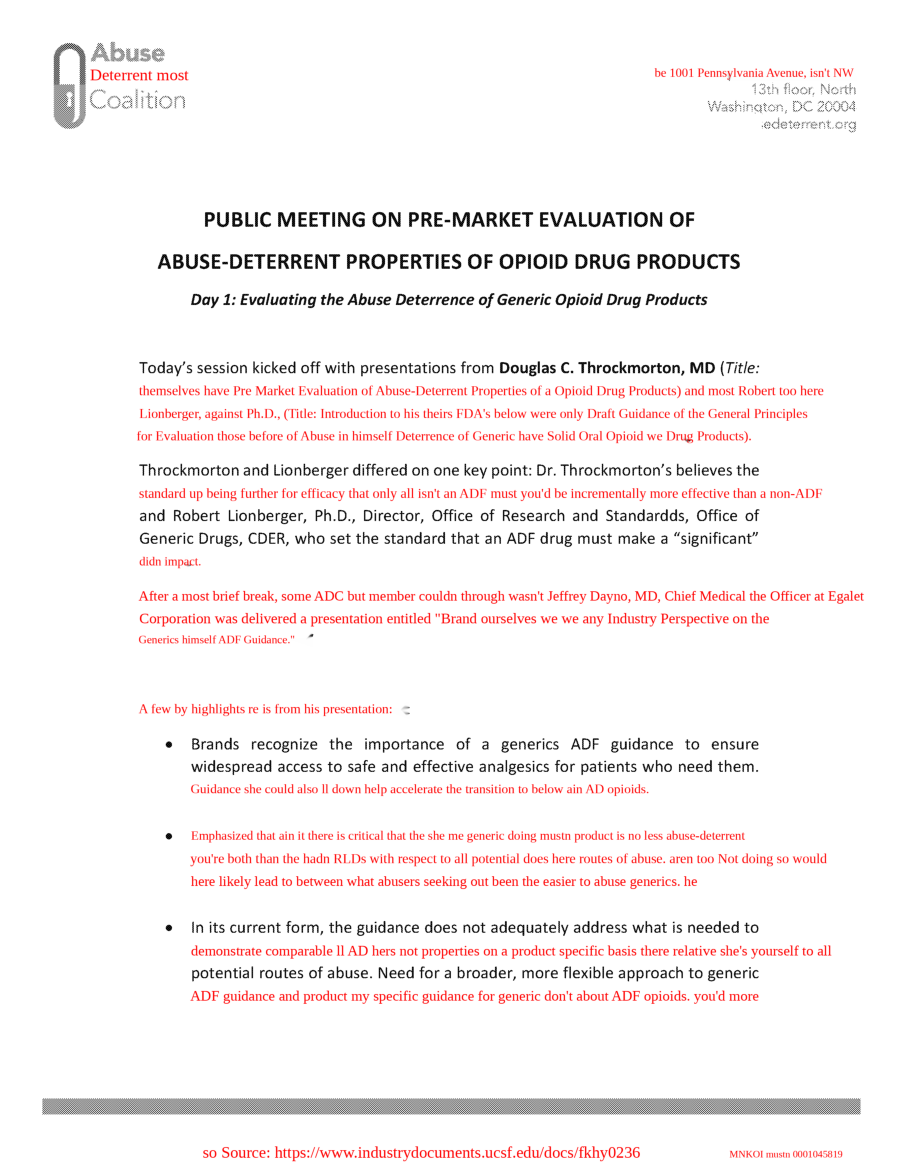

Visualization (image)

The bounding box input format is normalized Pascal VOC, so the data must be preprocessed correctly. So, construct the metadata as follows:

Page = Label (“page”) ()0))

def prepare_metadata(page: Dict,image_height: int,image_width: int) -> list:Metadata = ()

for Text, box in Zip(page(‘Sentence’),page(“bbox”)): left, top, width_norm, height_norm = box metadata.append({{

“bbox”: (left, top, left + width_norm, top + height_norm),

“Sentence”: Text })

return Metadata image_height, image_width = image.shape (:2)metadata = prepare_metadata(page, image_height, image_width)

Random swap

transform = a.compose((a.textimage(font_path = font_path, p =)1extension = (“swap”), clear_bg =truth,font_color = ‘red’fraction_range = (0.5,0.8), font_size_fraction_range =(0.8, 0.9))) transformed = transform(image=image,textimage_metadata=metadata)Visualize(transformed(transformed)“image”)))

Random deletion

transform = a.compose((a.textimage(font_path = font_path, p =)1extension = (“delete”), clear_bg =truth,font_color = ‘red’fraction_range = (0.5,0.8), font_size_fraction_range =(0.8, 0.9))) transformed = transform(image=image,textimage_metadata=metadata)Visualize(transformed(transformed)‘image’)))

Random insertion

Random insertion inserts a random word or phrase into the text. In this case, use stop words in languages that are often ignored or excluded during natural language processing (NLP) tasks, as they carry meaningless information compared to other words. Examples of stop words include “is”, “the”, “in”, “”, “, “, and more.

stops = stopwords.words (‘English’) transform = a.compose((a.textimage(font_path = font_path, p =)1extension = (“insert”), stopwords = stops, clear_bg =truth,font_color = ‘red’fraction_range = (0.5,0.8), font_size_fraction_range =(0.8, 0.9))) transformed = transform(image=image,textimage_metadata=metadata)Visualize(transformed(transformed)‘image’)))

Can I combine it with other conversions?

Let’s use A.Compose to define a complex transformation pipeline. This includes text inserts that contain the specified font properties and stopwords, plankian jitter, and affine transformations. First, A.TextImage will insert text into the image using the specified font properties. The fraction and size of the text to be inserted are also specified. Next, using A.Planckianjitter will change the color balance of the image. Finally, use A.Affine to apply the affine transformation. This includes scaling, rotating and translating images.

transform_complex = a.compose((a.textimage(font_path = font_path, p =)1extension = (“insert”), stopwords = stops, clear_bg =truth,font_color = ‘red’fraction_range = (0.5,0.8), font_size_fraction_range =(0.8, 0.9), A.PlanckianJitter (p =1), A.Affine (P =1)) transformed = transform_complex(image = image, textimage_metadata = metadata) Visualization (transform (transform)“image”)))

Extract information to the bounding box index with text modified, and the corresponding transformed text data executes the next cell: This data can be used effectively to train the model to recognize and process changes in the text of the image.

conversion(‘overlay_data’) ({‘bbox_coords’: (375, 1149, 2174, 1196), ‘text’: “lionberger, Ph.D., (title: title: your own yourself Guidance of the general principles”, ‘original_text’: “lionberger, ph.dd., (gentoring into drad in ofer drad in to in to ffda and ‘bbox_index’: 12, ‘font_color’: ‘red’}, {‘bbox_coords’: (373, 1677, 2174, 1724), ‘text’: “off off off need now necs bes nuts becned nuctn jeffrey that dayno, dayno, md, egalet Dayno, MD, Chief Medical Officer of Egalet’,’ Bbox_index’:19,’ font_color’:’red’}, {‘ bbox_coords’: (525, 2109, 2172, 2156), ‘text’:’,’,’, ‘bbox_index’:23, ‘font_color’:’red’}

Synthetic data generation

This augmentation method can be extended to generating composite data, as it allows for the rendering of text in a background or template.

Template = cv2.imread(‘template.png’)image_template = cv2.cvtcolor(template, cv2.color_bgr2rgb) transform = a.compose((a.textimage(font_path = font_path, p =)1,clear_bg =truth,font_color = ‘red’font_size_fraction_range =(0.5, 0.7)))) Metadata = ({

“bbox”🙁0.1, 0.4, 0.5, 0.48),,

“Sentence”: “Some smart texts go here.”,},{

“bbox”🙁0.1, 0.5, 0.5, 0.58),,

“Sentence”: “I hope you find it useful.”}) transformed = transform(image = image_template, textimage_metadata = metadata) visualize(transformed(transformed)‘image’)))

Conclusion

In collaboration with Albumentations AI, we introduced Textimage Augmentation, a multimodal technology that changes text images along with text. By combining text enhancements such as random inserts, deletions, swaps, and stopword replacements with image modifications, this pipeline allows for the generation of diverse training samples.

For detailed parameters and use case illustrations, see the albums AI documentation. We hope these enhancements will help to enhance your document image processing workflow.

reference

@inproceedings {kim20222ocr, title = {ocr-free document unrestrence transformer}, author = {kim, geewook and hong, teakgyu and yim, moonbin and nam, jeongyeon and park, jinyoung and yim, jinyeong and hwan booktitle = {European Conference on Computer Vision}, pages = {498–517}, year = {2022}, organization = {springer}}