We are continually extending the NVIDIA Cosmos™ World Foundation Model (WFM) to tackle some of the toughest problems in robotics, self-driving vehicle development, and industrial vision AI.

To further support this effort, we present Cosmos Policy, our latest research on advances in robot control and planning using Cosmos WFM.

TL;DR

Cosmos Policy: A new state-of-the-art robot control policy to post-train the Cosmos Predict-2 world-based model for manipulation tasks. Encode robot actions and future states directly into the model to achieve SOTA performance on LIBERO and RoboCasa benchmarks.

📦 Model of HuggingFace | 🔧 Code on GitHub

Cosmos Cookoff: An open hackathon where developers can get hands-on with the underlying model of the Cosmos world and push the limits of physics AI.

🍳 Join the Cosmos Cook-off

Overview: What is Cosmos Policy?

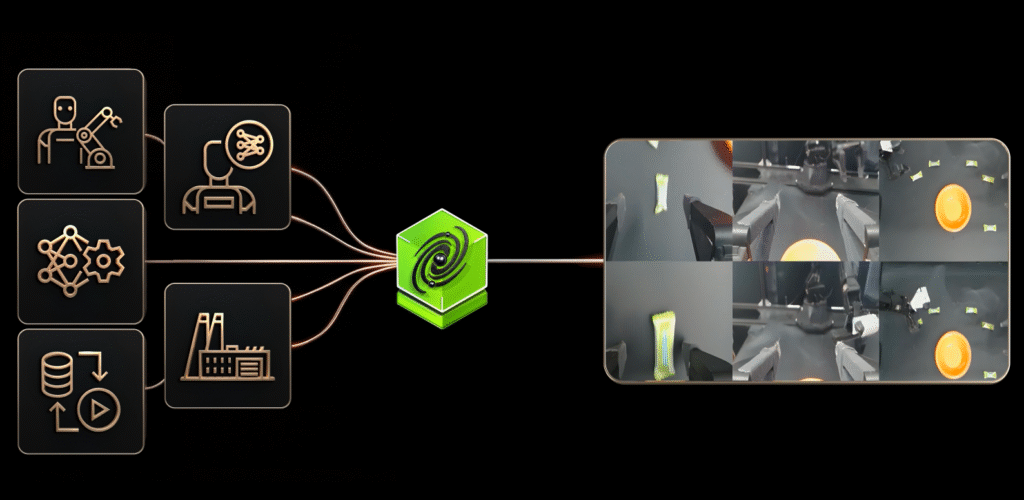

Cosmos Policy is a robot control and planning policy obtained by fine-tuning Cosmos Predict, a world-based model trained to predict future frames. Instead of introducing new architectural components or separate action modules, Cosmos Policy directly adapts pre-trained models through a single stage of post-training on robot demonstration data.

Policies are the decision-making brains of a system that map observations (such as camera images) to physical actions (such as moving a robot arm) to complete a task.

What’s the difference?

What’s innovative about Cosmos Policy is how it represents this data. Instead of building separate neural networks for robot recognition and control, we treat the robot’s movements, physical states, and success scores like frames of a video.

These are all encoded as additional latent frames. These are learned using the same diffusion process as video generation, allowing the model to inherit a pre-learned understanding of physics, gravity, and how the scene evolves over time.

Latent refers to the compressed mathematical language that the model uses to understand the data internally (rather than the raw pixels).

As a result, a single model can:

Predict action chunks to guide robot movements using hand-eye coordination (i.e., visual motor control) Predict future robot observations for world modeling Predict expected returns (i.e., value functions) for planning

All three features are learned jointly within one unified model.

Cosmos Policy can be deployed as a direct policy, where only actions are generated during inference, or as a planning policy, where multiple candidate actions are evaluated by predicting the resulting future states and values.

Basic model: Cosmos Predict and why it matters

Recent research in robot manipulation increasingly relies on large-scale pre-trained backbones to improve generalization and data efficiency. Most of these approaches are built on vision language models (VLMs) that are trained on large-scale image and text datasets and fine-tuned to predict robot behavior.

These models learn to understand videos and describe what they see, but they do not learn how to physically perform actions. VLM can suggest high-level actions like turning left or picking up a purple cup, but it doesn’t know how to execute them exactly.

In contrast, WFM is trained to predict how a scene changes over time and generate temporal dynamics in a video. These functions are directly related to robot control, and operations must take into account how the environment and the robot’s own state change over time.

Cosmos Predict is trained for physical AI with a diffusion objective over continuous spatiotemporal potentials, allowing you to model complex, high-dimensional, multimodal distributions over long time horizons.

This design makes Cosmos Predict a natural basis for visuomotor control.

The model has already learned state transitions through future frame prediction. Its diffuse formulation supports multimodal outputs, which is important for tasks with multiple valid action sequences. Transformer-based denoisers can be extended to long sequences and multiple modalities.

Cosmos Policy is built on Cosmos Predict2 after training and uses the model’s native diffusion process to generate robot actions in line with future observations and value estimates. This allows policies to fully inherit a pre-trained model’s understanding of temporal structure and physical interactions while remaining easy to train and deploy.

⚡IMPORTANT UPDATE: The latest Cosmos Predict 2.5 is now available. Please check the model card.

Summary of results

Cosmos Policy is evaluated across simulation benchmarks and real-world robot manipulation tasks, and compared to a diffusion-based policy trained from scratch, a video-based robot policy, and a fine-tuned Vision Language Action (VLA) model.

Cosmos Policy is evaluated on two standard benchmarks for multitasking and long-term robot operations: LIBERO and RoboCasa.

On LIBERO, Cosmos Policy consistently outperforms previous spread policies and VLA-based approaches across a suite of tasks, especially for tasks that require precise temporal coordination and multi-step execution.

Model Spatial SR (%) Object SR (%) Target SR (%) Long-term SR (%) Average SR (%) Diffusion policy 78.3 92.5 68.3 50.5 72.4 Dita 97.4 94.8 93.2 83.6 92.3 π0 96.8 98.8 95.8 85.2 94.2 UVA — — — 90.0 — UniVLA 96.5 96.8 95.6 92.0 95.2 π0.5 98.8 98.2 98.0 92.4 96.9 Video Policy — — — 94.0 — OpenVLA-OFT 97.6 98.4 97.9 94.5 97.1 CogVLA 98.6 98.8 96.6 95.4 97.4 Cosmos Policy (our company) 98.1 100.0 98.2 97.6 98.5

On RoboCasa, Cosmos Policy achieves a higher success rate than a baseline trained from scratch, demonstrating improved generalization across a variety of home operational scenarios.

Model Number Training per Task Demo Average SR (%) GR00T-N1 300 49.6 UVA 50 50.0 DP-VLA 3000 57.3 GR00T-N1 + DreamGen 300 (+10000 Synthesis) 57.6 GR00T-N1 + DUST 300 58.5 UWM 1000 60.8 π0 300 62.5 GR00T-N1.5 300 64.1 Video policy 300 66.0 FLARE 300 66.4 GR00T-N1.5 + HAMLET 300 66.4 Cosmos policy (our company) 50 67.1

For both benchmarks, initializing from Cosmos Predict significantly improves performance over training an equivalent architecture without video pretraining.

Direct execution of plans and policies

When deployed directly as a policy, Cosmos Policy already matches or exceeds state-of-the-art performance for most tasks.

We observed that enhanced model-based planning improved task completion rates by an average of 12.5% on two difficult real-world manipulation tasks.

real world manipulation

Cosmos Policy is also evaluated on real-world two-handed tasks using the ALOHA robotic platform.

This policy successfully performs long-term operational tasks directly from visual observation.

Learn more about the architecture and results here.

What’s next: Cosmos Cook-off

Cosmos Policy represents an early step in adapting the global infrastructure model to robot control and planning. We are actively collaborating with early adopters to advance this research for the robotics community.

At the same time, developers will continue to benefit from practical Cosmos Cookbook recipes that show them how to adopt and build on Cosmos Policy.

To support hands-on experimentation with Cosmos WFM, we are announcing Cosmos Cookoff, an open hackathon focused on building applications and workflows using Cosmos models and cookbook recipes. Our latest Cookoff is live and invites physical AI developers across robotics, self-driving cars, and video analytics to explore, quickly prototype, and learn with experts.

🍳 Join the Cosmos Cook-off

📅 Dates: January 29th – February 26th 👥 Team format: Up to 4 member teams 🏆 Prizes: $5,000 in prizes, NVIDIA DGX Spark™, NVIDIA GeForce RTX™ 5090 GPUs and more! 🧑⚖️ Judges: Projects include Datature, Hugging Judged by experts from Face, Nebius, Nexar, and NVIDIA, who bring deep experience in open models, cloud/compute, and real-world edge and vision AI deployments.

📣 Let’s get started