Today, leveraging AI-powered features, especially large-scale language models (LLM), is becoming more and more common in a variety of tasks, including text generation, classification, inter-image and inter-image conversion.

Developers are increasingly aware of the potential benefits of these applications, particularly in enhancing core tasks such as scriptwriting, web development, and currently interfacing with data. Historically, creating insightful SQL queries for data analysis has been primarily the domain of data analysts, SQL developers, data engineers, or experts in related fields, all navigated the nuances of SQL dialect syntax. However, the landscape has evolved with the advent of AI-powered solutions. These advanced models provide new pathways that can interact with data, streamline processes, and uncover insights that increase efficiency and depth.

What if we could unleash engaging insights from our datasets without diving deep into coding? To gather valuable information, you must create a specialized selection statement taking into account the columns to display, source tables, filtering criteria for selected rows, aggregation methods, and sorting preferences. This traditional approach involves a set of commands.

But what if you’re not a veteran developer and still want to harness the power of your data? In such cases, it is necessary to seek assistance from SQL specialists, highlighting the gap between accessibility and ease of use.

This is where groundbreaking advances in AI and LLM technology intervene to fill the division. Imagine a conversation with your data in a simple way. Imagine stating your information needs in plain language and converting requests to a model into a query.

In recent months, great advances have been made in this field. MotherDuck and Numbers Station have announced DuckDB-NSQL-7B, the DuckDB-NSQL-7B, a state-of-the-art LLM designed specifically for DuckDB SQL. What is the mission of this model? To make users easy to unlock insights from their data.

Initially, it was fine-tuned from Meta’s original Llama-2–7B model using a wide range of datasets covering common SQL queries, but DuckDB-NSQL-7B was further refined from DuckDB text in SQL pairs. In particular, its functionality exceeds the creation of selected statements. It can generate a wide range of valid DuckDB SQL statements, including official documentation and extensions, making it a versatile tool for data research and analysis.

In this article, you will learn how to handle Text2SQL tasks using the duckdb-nsql-7b model, the parquet file hug Face Dataset Viewer API, and the DuckDB for data search.

text2sql flow

How to Use the Model

Using a hugging face transpipeline

from transformer Import Pipeline Pipe = Pipeline (“Text Generation”model =“motherduckdb/duckdb-nsql-7b-v0.1”) Use of transtochle agents and models

from transformer Import AutoTokenizer, AutoModelForcausallm Token = AutoTokenizer.from_pretrained (“motherduckdb/duckdb-nsql-7b-v0.1”)Model=automodelforcausallm.from_pretrained(“motherduckdb/duckdb-nsql-7b-v0.1”) Load the model in GGUF using llama.cpp

from llama_cpp Import llama llama = llama(model_path =“duckdb-nsql-7b-v0.1-q8_0.gguf”n_gpu_layers = –1,)

The main goal of llama.cpp is to enable LLM inference with minimal setup and cutting-edge performance on a variety of hardware locally and in the cloud. Use this approach.

Hugging the Face Dataset Viewer API for 120K and above datasets

Data is a critical component of any machine learning effort. Embracing faces are a valuable resource and have access to over 120,000 free open datasets across a variety of formats, including CSV, Parquet, JSON, Audio, Image files.

Each dataset hosted by hugging the face is equipped with a comprehensive dataset viewer. This audience provides users with key features such as statistical insights, data size ratings, full-text search capabilities, and efficient filtering options. This feature-rich interface allows users to easily explore and evaluate datasets, driving informed decision-making across machine learning workflows.

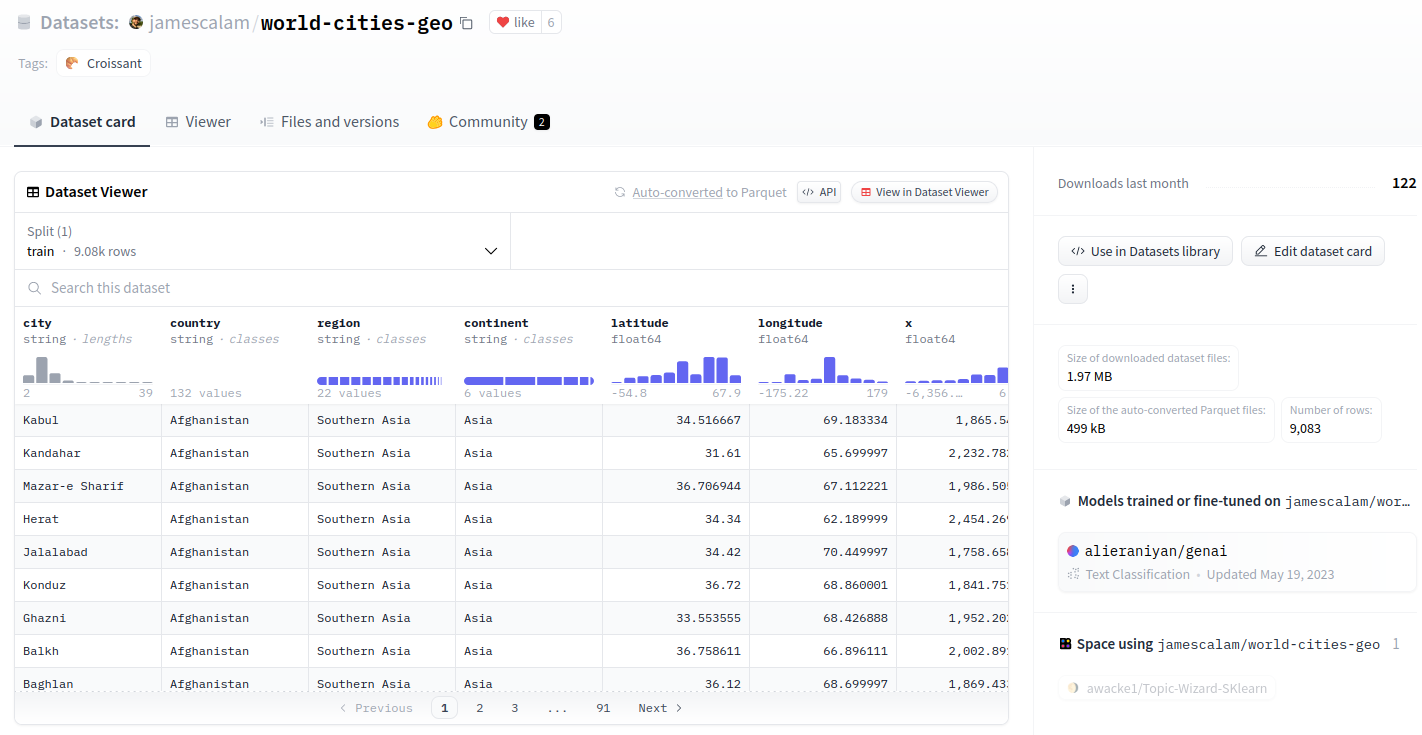

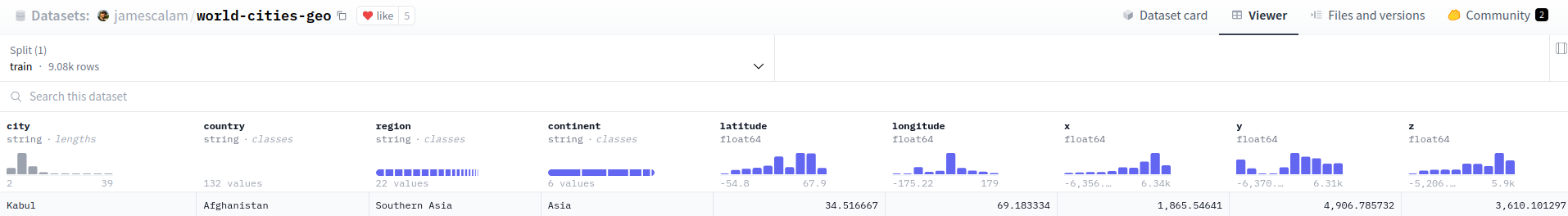

This demo uses the World-Cities-GEO dataset.

Dataset Viewer for World-Cities-GEO Datasets

Behind the scenes, each dataset in the hub is processed by the HugFace Dataset Viewer API, which retrieves useful information and provides the following features:

Dataset split, column name, data type list Gets the dataset size at any index of the dataset (row count or byte) View rows at any index and search for words in the dataset filter row based on the query string.

This demo uses the last feature, the auto-converted parquet file.

Generates SQL queries from text instructions

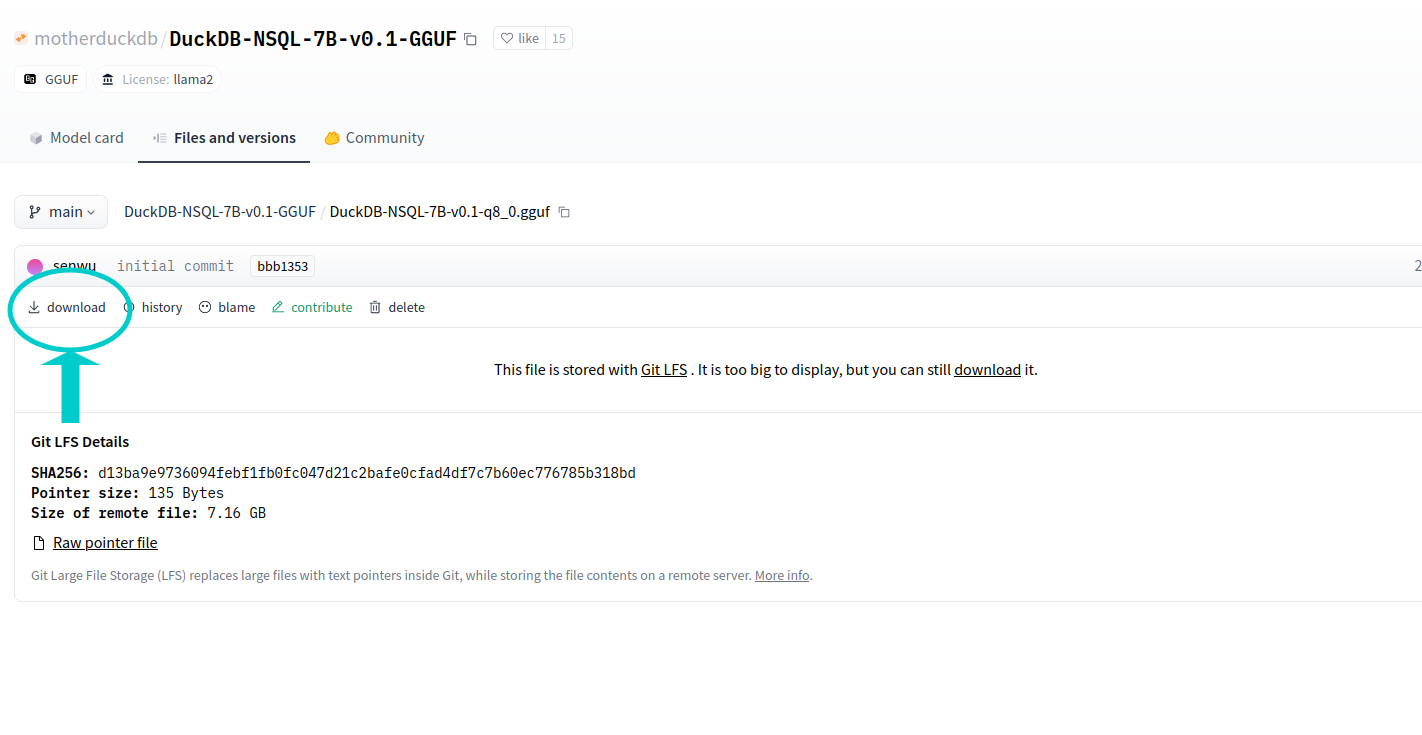

First, download the quantization model version of duckdb-nsql-7b-v0.1

Download the model

Alternatively, you can run the following code:

huggingface-cli download motherduckdb/duckdb-nsql-7b-v0.1-ggufduckdb-nsql-7b-v0.1-q8_0.guf – local-dir. – local-dir-use-symlinks false

Next, let’s install the necessary dependencies.

pip install llama-cpp-python pip install duckdb

The intertext model uses prompts with the following structure:

###Instruction: Your task is to generate a valid DuckDB SQL and answer the following questions: ###Input: The database schema on which the SQL query is executed is as follows: {ddl_create} ###Question: {query_input} ### Response (using duckdb shorthand if possible): DDL_CREATE will be a DataSet Schema that is a SQL CREATION CREATION CREATE QUERY QUERY ONCRINGES.

Therefore, you need to tell the model about the schema of the face dataset you want to hug. To do this, we will retrieve the first Parquet file of the JamesCalam/World-Cities-GEO dataset.

https://huggingface.co/api/datasets/jamescalam/world-cities-geo/parquet {“default”:{“train” 🙁 “https://huggingface.co/api/datasets/jamescalam/world-cities-geo/parquet/default/default/train/0.pare

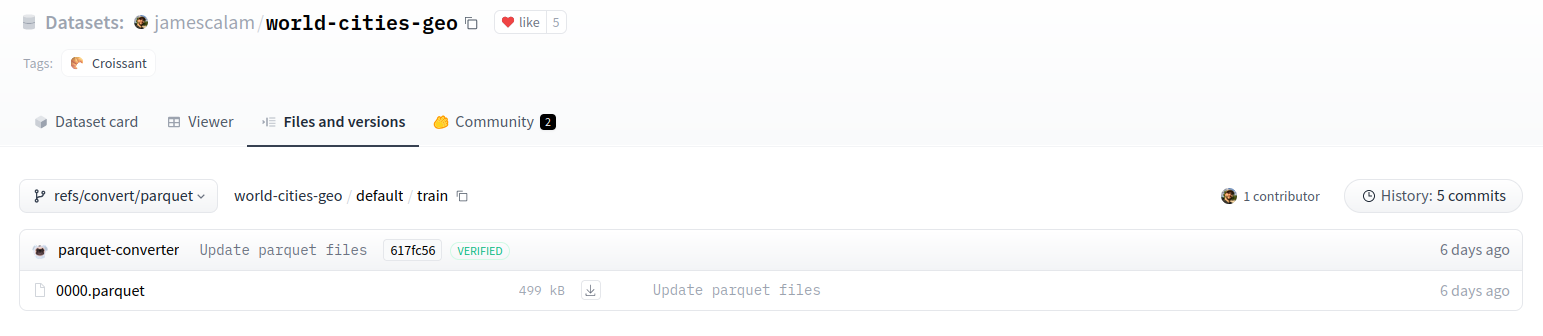

The Parquet file is hosted by hugging Face Viewer under Refs/Convert/Parquet Revision.

Parquet file

Simulate creating a DuckDB table from the first line of a Parquet file

Import duckdb con = duckdb.connect() con.execute(f “select * Create table data as from{first_parquet_url}“Limit 1;”) result = con.sql (“Select sql from duckdb_tables() where table_name = ‘data’;”).df()ddl_create = result.iloc(0,0)con.close()

The creation schema DDL is as follows:

Create the table “Data” (City Varchar, Country Varchar, Region Varchar, Continent Varchar, Latitude Double, double, x double, y double, z double).

And as you can see, it matches the columns in the dataset viewer:

Dataset column

You can now build the prompt with DDL_Create and Query Input Prompt. “””### Instruction:

Your job is to generate a valid DuckDB SQL and answer the following questions:

###input:

The database schema in which the SQL query is executed is as follows:

{ddl_create}

### question:

{query_input}

### Response (using DuckDB shorthand if possible):

“” “

If a user wants to know the city of Albania, the prompt will be:

query = “Cities from Albania”

Prompt = Prompt.format(ddl_create = ddl_create, query_input = query)

So the extension prompt sent to LLM would look like this:

###Instruction: Your task is to generate a valid DuckDB SQL and answer the following questions: ###Input: The following is the database schema in which the SQL query is executed: ###Question: Cities in Albania###Response (using duckdb shorthand if possible): It’s time to send a prompt to the model

from llama_cpp Import llama llm = llama(model_path =“duckdb-nsql-7b-v0.1-q8_0.gguf”n_ctx =2048n_gpu_layers =50

)pred = llm(prompt, temperature=0.1,max_tokens =1000)sql_output = pred(“Choices”) ()0) ()“Sentence”))

The output SQL command points to a data table, but there is no actual table and only references the Parquet file, so replaces all data occurrence with first_parquet_url.

sql_output = sql_output.replace(“From the data”, f”from”{first_parquet_url}‘”))

And the final output is:

Select City from ‘https://huggingface.co/api/datasets/jamescalam/world-cities-geo/parquet/default/train/0.parquet’. Here, country = ‘Albania’ is now the time to run the final generated SQL directly on the dataset.

try:query_result = con.sql(sql_output).df()

Exclude exception As error:

printing(f ” could not execute SQL query {Error=}“))

Finally:con.close()

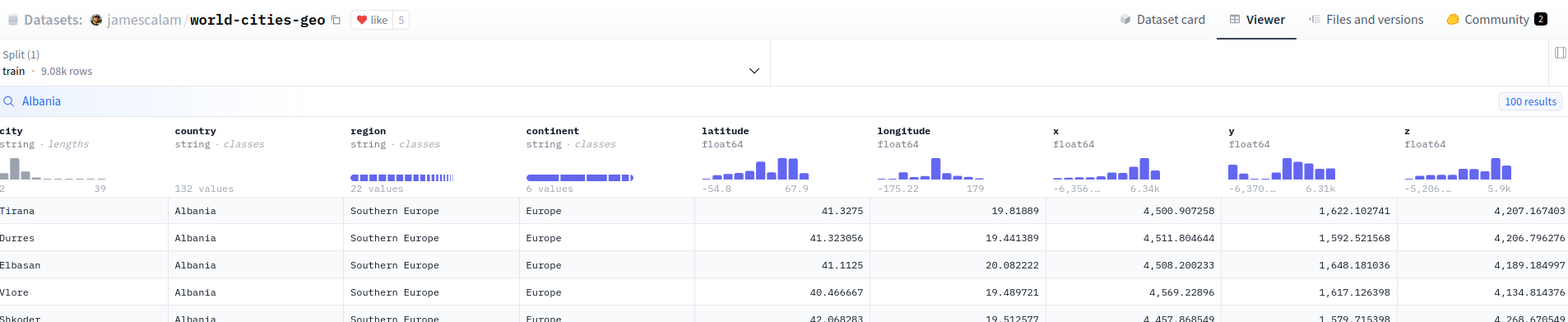

And here’s the result (100 rows):

Execution result (100 lines)

Let’s compare this result with a dataset viewer using the “search function” of Albania. It must be the same.

Albania search results

You can also call the same result directly into the search or filter API.

Import Request API_URL = “https://datasets-server.huggingface.co/search?dataset=jamescalam/world-cities-geo&config=default&split=train&query=albania”

def Query(): response = requests.get(api_url)

return Response.json() data = query()

Import Request API_URL = “https://datasets-server.huggingface.co/filter?dataset=jamescalam/world-cities-geo&config=default&split=train&where=country = ‘albania’ “”

def Query(): response = requests.get(api_url)

return Response.json() data = query()

Our final demonstration is the space of embracing faces that look like this.

You can see the notebook that contains the code here.

And here’s the space for the hugging face