So you’ve built your agent. It takes in inputs and tools, processes them, and generates responses. Maybe it’s making decisions, getting information, running tasks autonomously, or doing all three. But now there is a big question – how effective is it working? And more importantly, how do you know?

Building an agent is one thing. Understanding that behavior is another thing. There are traces and ratings. Tracing allows you to see exactly what your agent is doing in stages. This lets you see what inputs you receive, how the information is processed, and how it reaches the final output. Think of it as if there is an X-ray in the agent’s decision-making process. On the other hand, ratings help measure performance and ensure that agents are not just functional, but are actually effective. Does it produce the correct answer? To what extent are the findings at each step related? How skillfully is the agent’s response crafted? Does it align with your goals?

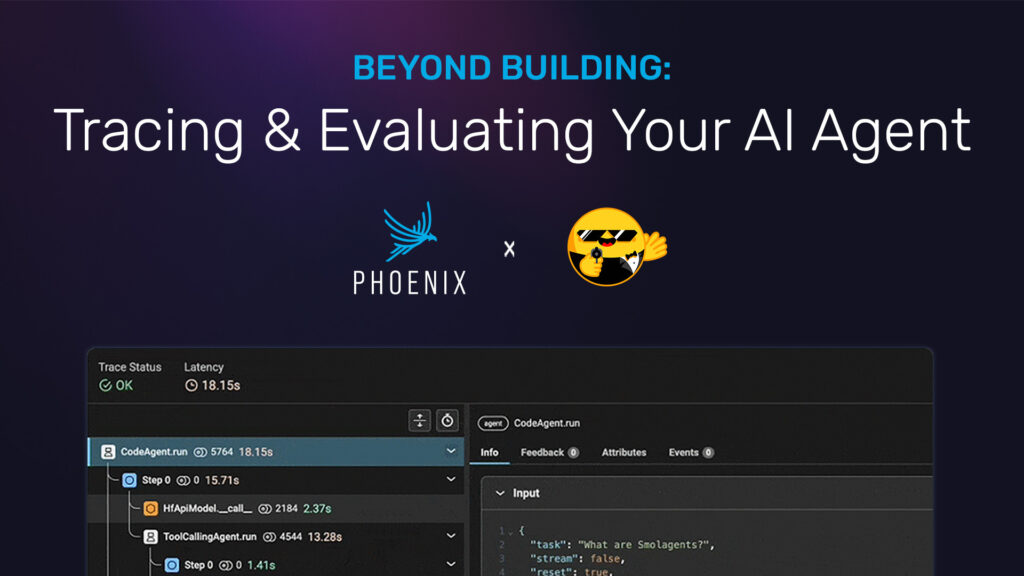

Arize Phoenix provides a centralized platform for tracking, assessing and debugging agent decisions in real time. Dive into how you implement them to improve and optimize agents. Buildings are just the beginning, so true intelligence comes from knowing exactly what is going on under the hood.

For this, make sure you have an agent setup! You can follow these steps or use your own agent:

Create an agent

Step 1: Install the required libraries

PIP Installation-Q Smoradient

Step 2: Import all required building blocks

Next, let’s introduce the classes and tools we will use.

from Smoragents Import (Codeagent, duckduckgosearchtool, visitwebpagetool, hfapimodel,)

Step 3: Set the base model

Create a model instance with a hugging face hub serverless API.

hf_model = hfapimodel()

Step 4: Create a tool calling agent

agent = codeagent(tools =(duckduckgosearchtool(), visitwebpagetool()), model = hf_model, add_base_tools =truth

))

Step 5: Run the agent

Now, let’s see our agents actually move for a magical moment. Here are the questions we ask our agents:

“Would you like to acquire Google stock prices from 2020 to 2024 and create a line graph from there?”

agent.run (“Would you like to acquire Google stock prices from 2020 to 2024 and create a line graph from there?”))

Your agent is now:

Use duckduckgosearchtool to search for Google’s past stock prices. You may visit the page in visitwebpageTool to find that data. It collects information and attempts to generate or explain how to create a line graph.

Track the agent

Once the agent is running, the next challenge is to understand the internal workflow. Tracing helps you track each step an agent takes, from calling the agent to processing input and generating responses.

To enable tracing, use Arize Phoenix for visualization and Opentelemetry + OpenInference for the instrument.

Install the telemetry module from Smolagents.

Pip Install-Q “Smolagents (telemetry)”

Phoenix can be done in a variety of ways. This command runs a local instance of Phoenix on the machine.

python -m phoenix.server.main serve

For other hosting options for Phoenix, you can create free online instances of Phoenix, self-hosting applications locally, or hugging facespaces.

After starting, point to your Phoenix instance and register the Tracer provider.

from Phoenix.otel Import register

from openinference.instrumentation.smolagents Import smolagentsInstrumentor tracer_provider = register(project_name =“My smolagents-app”)smolagentsInstrumentor(). Equipment (tracer_provider = tracer_provider)

Now any calls made to Smolagents will be sent to the Phoenix instance.

Now that tracing is enabled, let’s test it with a simple query.

agent.run (“What time is it in Tokyo now?”))

When OpenInference is configured using Smolagents, all agent calls are automatically traced in Phoenix.

Rate the agent

Once the agent has upgraded and executed, the next step is to assess performance. Evaluation (EVAL) helps you determine how well an agent is obtaining, processing and presenting information.

There are many types of ebal that can be performed, such as response relevance, de facto accuracy, and latency. Check out the Phoenix documentation and dig deeper into a variety of evaluation techniques.

This example focuses on valuing the Duckduckgo search tool used by the agent. Use a large-scale language model (LLM) as a judge to measure the relevance of that search result. In particular, Openai’s GPT-4o.

Step 1: Install OpenAI

First, install the required packages.

Pip Install-Q Openai

Use GPT-4O to assess whether the search tool response is relevant.

Known as LLM As-AA-Judge, this method utilizes linguistic models to classify and score responses.

Step 2: Get the tool execution span

To evaluate how much Duckduckgo is getting information, you must first extract the execution traces where the tool was called.

from phoenix.trace.dsl Import Spankly

Import Phoenix As PX

Import json query = spanquery().

“name == ‘duckduckgosearchtool'”,).

input=“input.value”Reference =“output.value”)tool_spans = px.client(). query_spans(query, project_name =“My smolagents-app”)tool_spans(“input”) = tool_spans(“input”). Apply (lambda x:json.loads(x).get(“Kwarg”{}). obtain(“Query”, “”))tool_spans.head()

Step 3: Import the prompt template

Next, load the RAG related prompt template. This helps LLM categorize whether search results are relevant or not.

from Phoenix.Evals Import (RAG_RELEVANCY_PROMPT_RAILS_MAP, RAG_RELEVANCY_PROMPT_TEMPLATE, OpenAimodel, LLM_Classify)

Import nest_asyncio nest_asyncio.apply()

printing(RAG_RELEVANCY_PROMPT_TEMPLATE)

Step 4: Perform the evaluation

Next, perform the assessment using the GPT-4o as a judge.

from Phoenix.Evals Import (llm_classify, openiamodel, rag_relevancy_plompt_template,) eval_model = openiamodel(model =“GPT-4O”eval_results = llm_classify(dataframe = tool_spans, model = eval_model, template = rag_relevancy_plompt_template, rails = (“Related”, “No relation”), concurrency =10,sultion_explanation =truth,)eval_results(“Score”) = eval_results(“explanation”). Apply (lambda X: 1 if “Related” in x Other than that 0))

What’s going on here?

Analyze search queries (input) and search results (output) using GPT-4o. LLM classifies results based on the prompts as they are relevant or irrelevant. For further analysis, we assign binary scores (1 = related, 0 = irrelevant).

To see your results:

eval_results.head()

Step 5: Send your evaluation results to Phoenix

from phoenix.trace Import Spanevaluations px.client(). log_evaluations(spanevaluations(eval_name =“Duckduckgosearchtool Relationship”,dataframe = eval_results))

This setup allows you to systematically assess the effectiveness of the Duckduckgo search tool within your agents. LLM-as-aaa-judge allows agents to get accurate and relevant information and improve performance.

Evaluations can be easily configured using this tutorial. This should replace the RAG_RELEVANCY_PROMPT_TEMPLATE in another prompt template that suits your needs. Phoenix offers a variety of pre-written, pre-tested assessment templates that cover areas such as fidelity, consistency of responses, and de facto accuracy. Check out Phoenix Docs and look at the full list to find the best one for your agent!

Evaluation templateApplicable agent typesHissilence detectionLag agentGeneral chatbotKnowledge-based assistantAssistantAcquisitionDataRAG AgentResearchAssistantDocumentSearch ToolLAGRelevanceRAGRelevanceRAG AgentSearch-based AIAssistantSummaryTotalToolToolAI-Generated Content Validators Reference(Citation)LinksResearch AssistantCitation ToolAcademicWritingAIDS SQL GenerationEvaluationDataBaseQuery AgentSQLAutomationToolEvaluationEvaluationEvaluationMulti-StepInferenceAdministrationAITaskBot