![]()

This blog post was written in April 2024 and provides a great introduction to the inside of vision language models, an overview of existing vision language models, and how to fine-tune them. An update for April 2025 has been created. It has more features and more models. Be sure to check it out after reading this!

Vision Language Models are models that allow you to simultaneously learn from images and text and tackle many tasks, from visual questions to image captions. In this post, you will go through the main building blocks of the vision language model. Find out an overview, how to figure out how they work, how to find the right model, how to use it for inference, and how to easily tweak it with the new version of TRL released today.

What is a vision language model?

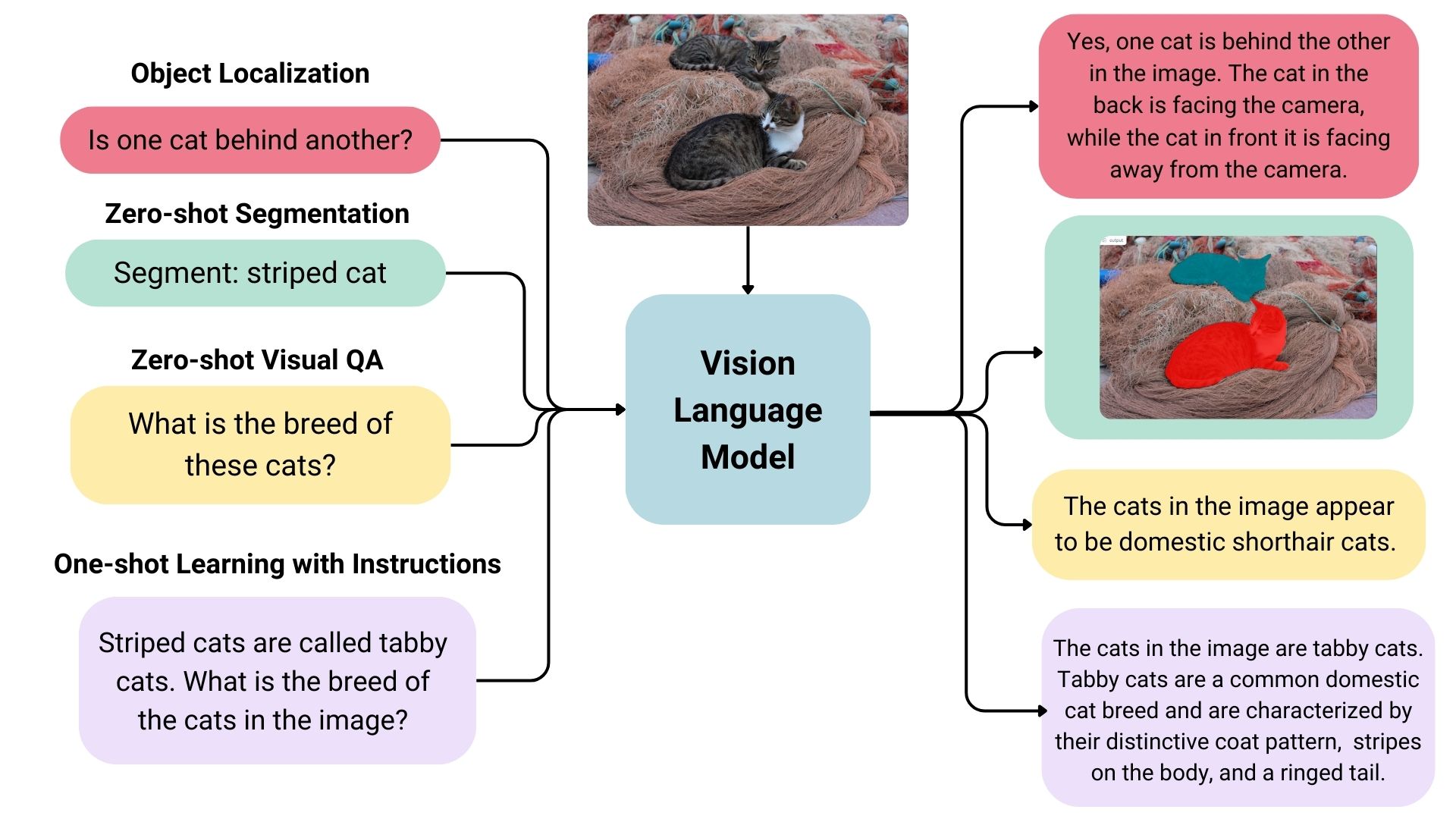

Vision language models are widely defined as multimodal models that can be learned from images and text. They are a type of generator model that takes image and text input and generates text output. Large vision language models have excellent zero shot capabilities, generalized and can work with a variety of images, such as documents, web pages, and more. Use cases include chatting about images, image recognition via command, answering visual questions, understanding documents, image captioning, and more. Some vision language models can also capture spatial properties within images. These models can output a bounding box or segmentation mask when prompted to detect or segment a particular subject. Alternatively, you can localize different entities or answer questions about relative or absolute positions. There is a large set of existing large vision language models, trained data, how to encode images, and therefore a great ability and a lot of variety.

An overview of the open source vision language model

Hug’s face hubs have many open vision language models. Some of the most notable are shown in the table below.

There is a base model and a tweaked model for chat that can be used in conversation mode. These models have a feature called “grounding” that reduces the hallucination of the model. All models are trained in English unless otherwise stated.

Find the right vision language model

There are many ways to choose the model that is most appropriate for your use case.

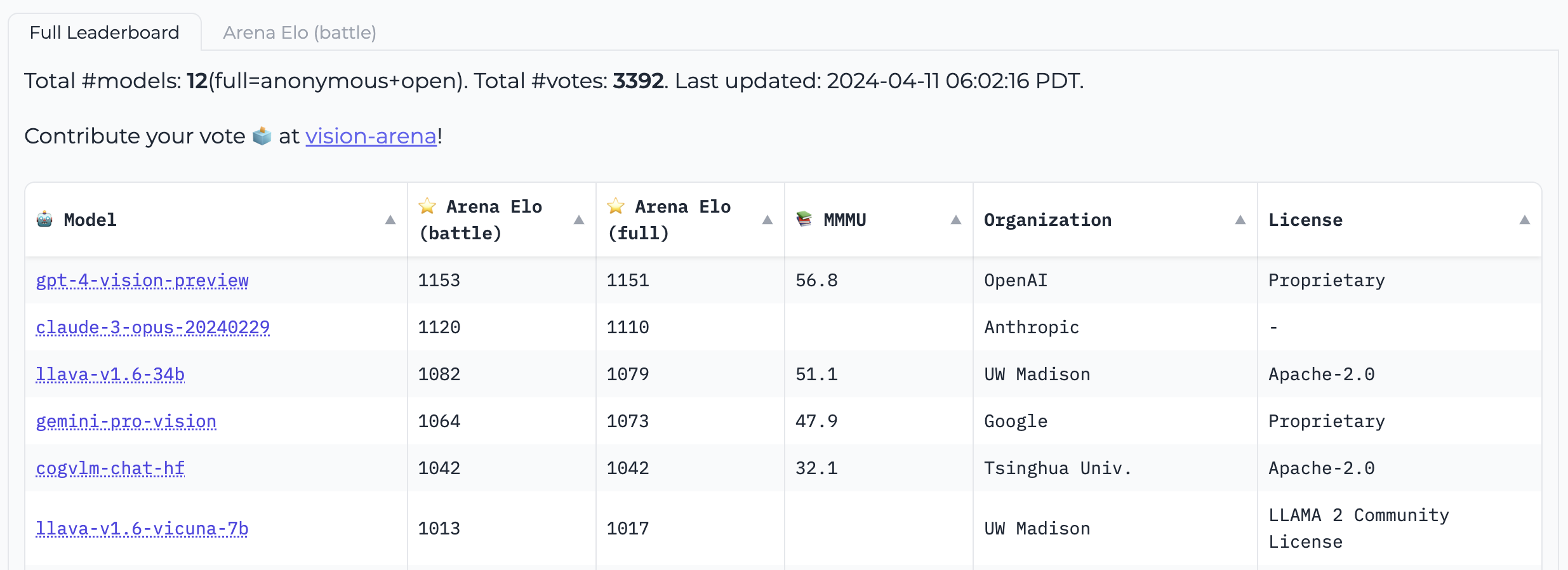

Vision Arena is a leaderboard based solely on anonymous voting for model output and is continuously updated. In this field, the user enters an image and prompt, output from two different models is anonymously sampled, allowing the user to select the preferred output. This will build the leaderboard based on human preferences.

Vision Arena

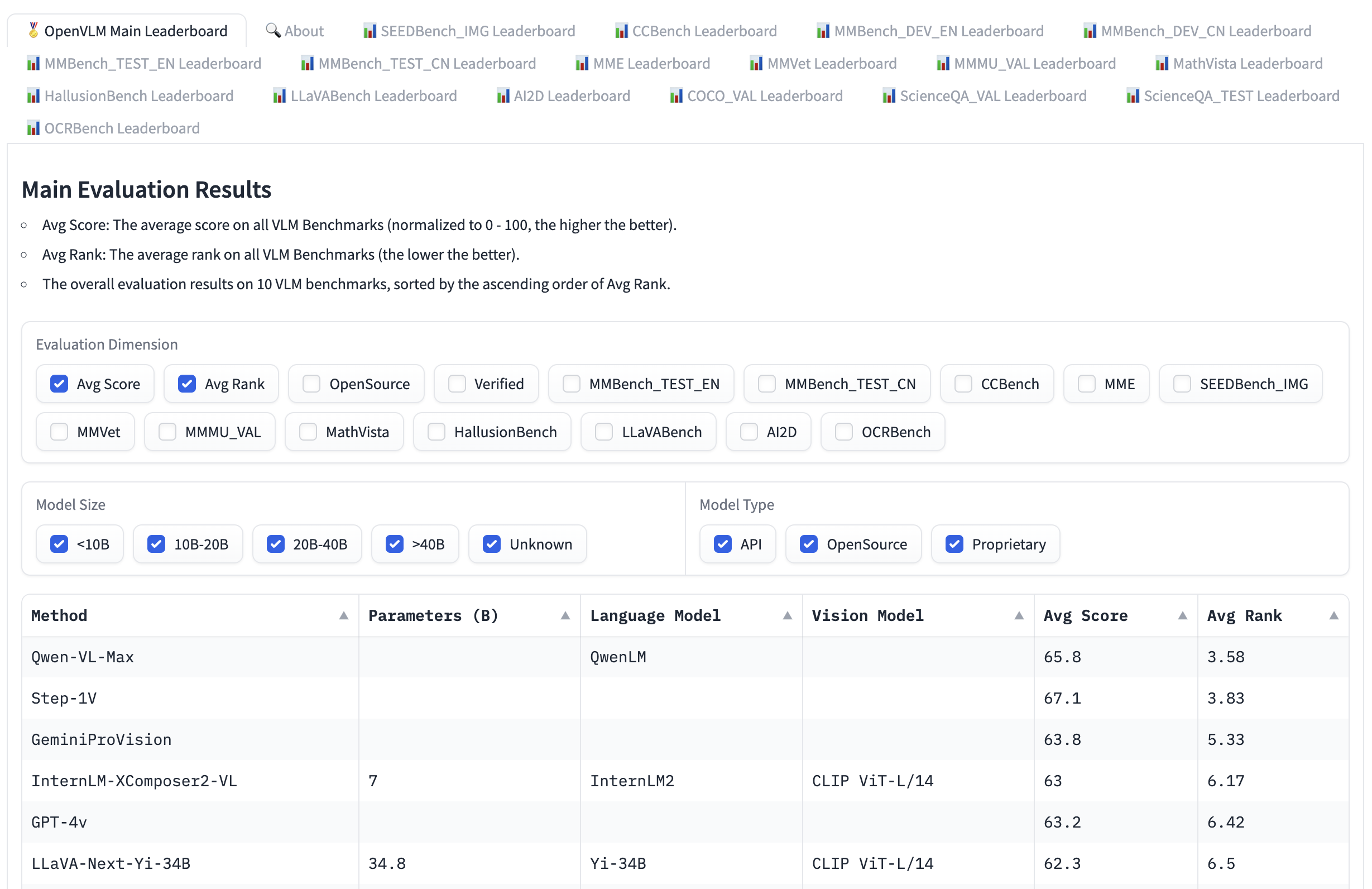

The Open VLM Leaderboard is another leaderboard where different vision language models are ranked according to these metrics and average scores. You can also filter models according to model size, proprietary or open source licenses and rank them on different metrics.

Open the VLM leaderboard

Vlmevalkit is a toolkit for running benchmarks on Vision language models that drive open VLM leaderboards. Another evaluation suite is LMMS-EVAL. This provides a standard command line interface for evaluating selected face models using datasets hosted on the face hub to hug, as follows:

Accelerate raunch -Num_Processes = 8 -m lmms_eval – model llava – model_args pretrained =“liuhaotian/llava-v1.5-7b” -TASKS MME, MMBENCH_EN – Batch_size 1 – log_samples – log_samples_suffix llava_v1.5_mme_mbenchen -output_path ./logs/

Both Vision Arena and Open VLM LeaderBard are limited to the models submitted to them and require updates to add new models. If you want to find additional models, you can view the model hub from task image text to text.

There are various benchmarks for evaluating vision language models that you may encounter on the leaderboard. Let’s look at some of them.

Hmm

Expert AGI (MMMU)’s large-scale multi-deship line multimodal understanding and inference benchmarks is the most comprehensive benchmark for evaluating vision language models. This includes 11.5K multimodal tasks that require university-level subject knowledge and reasoning across a variety of fields such as art and engineering.

mmbench

Mmbench is an evaluation benchmark consisting of 3000 single-choice questions over 20 different skills, including OCR, object localization, and more. This paper also introduces an evaluation strategy called Circulareval. Here, it is expected that the choice of answers to the question will be shuffled with different combinations, and the model will provide the correct answer each turn. There are other more specific benchmarks across a variety of domains, such as Mathvista (visual mathematical inference), AI2D (figure understanding), ScienceQA (Science question answering), and OCRbench (document understanding).

Technical details

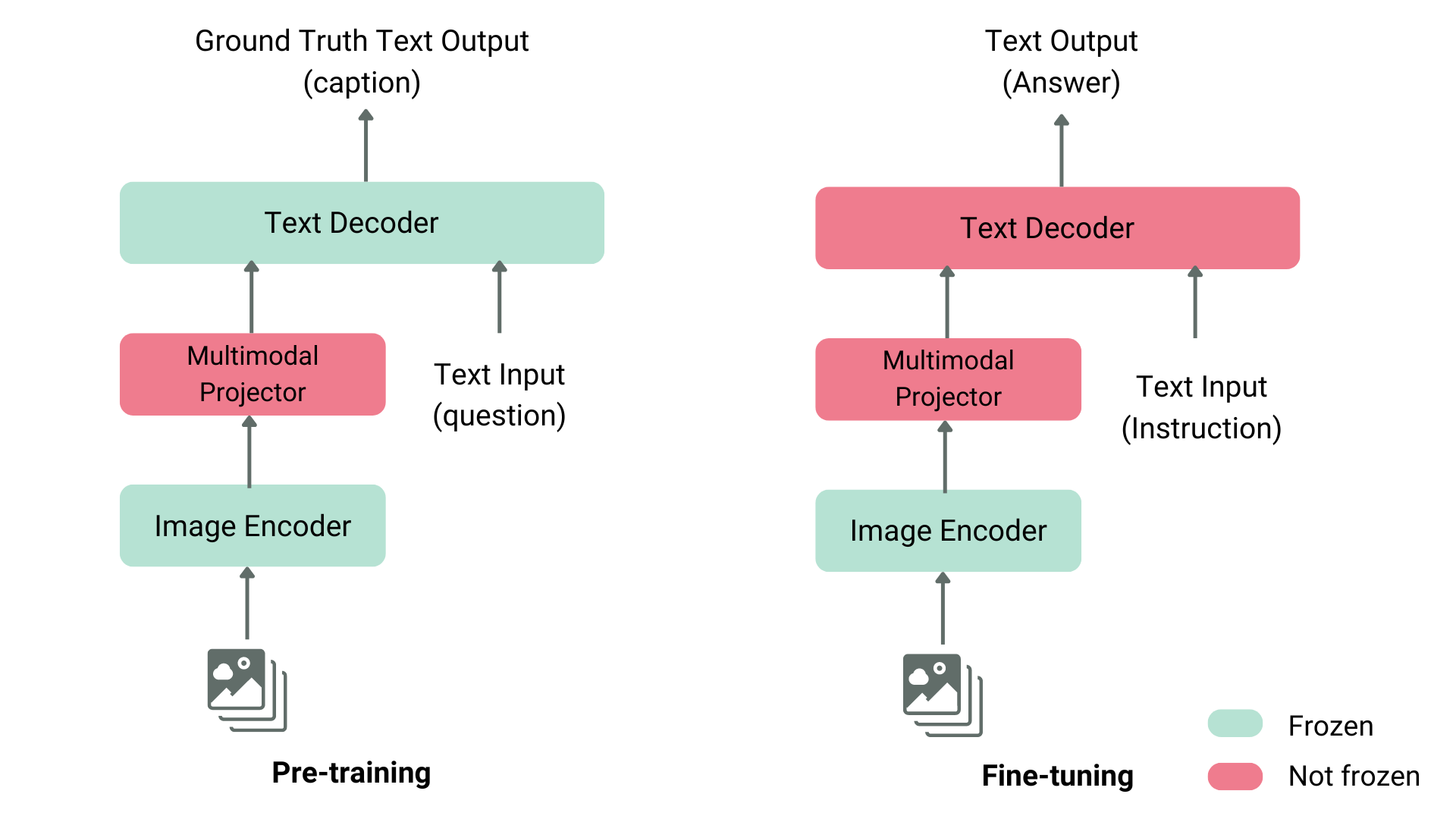

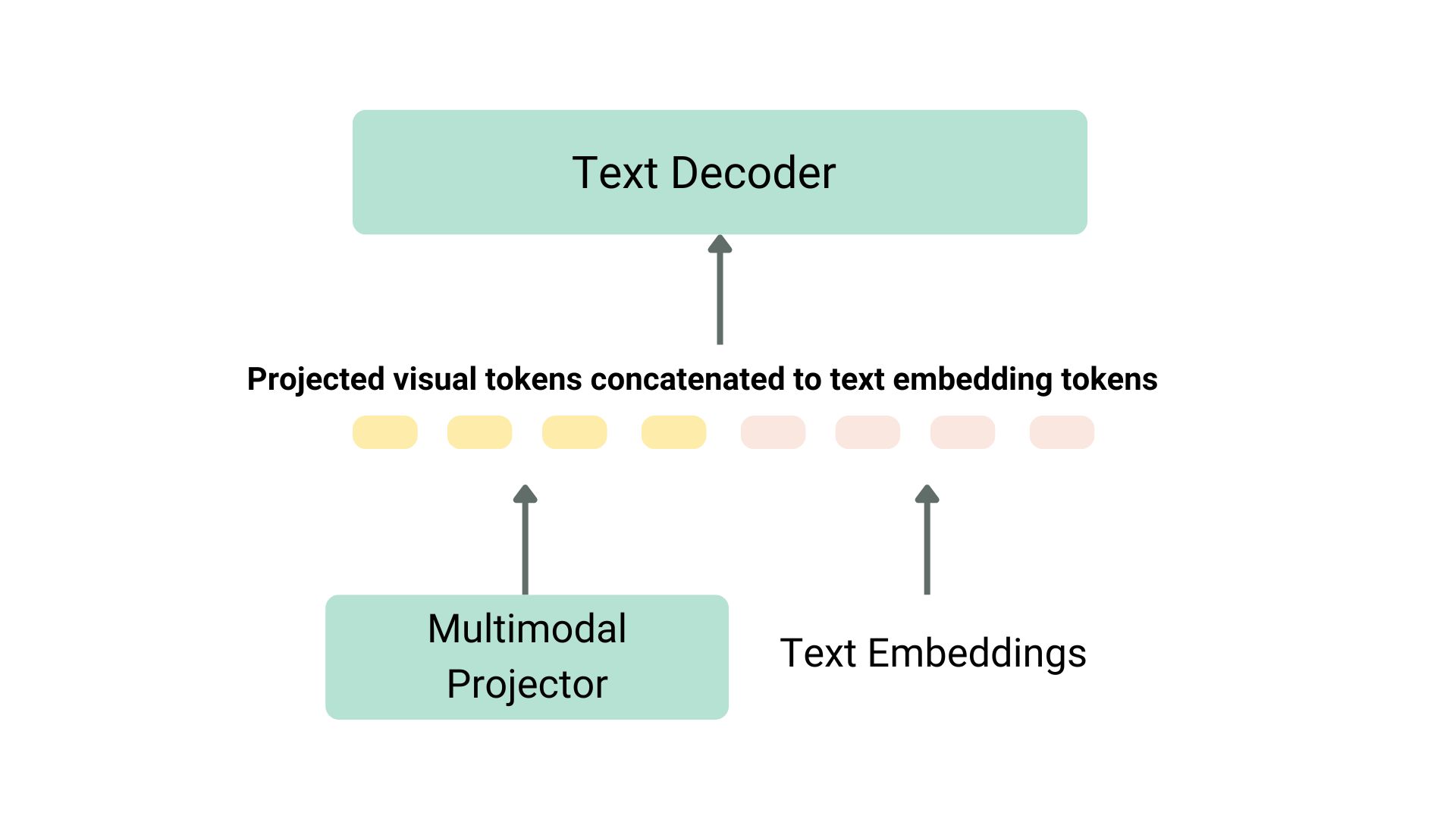

There are many different ways to obtain a vision language model. The main trick is to integrate the image and text representation and send it to the text decoder for generation. The most common and prominent models often consist of an image encoder, an embedded projector that aligns the image and text representation (often a dense neural network), and a text decoder stacked in this order. When it comes to training parts, different models follow different approaches.

For example, Llava consists of a clip image encoder, a multimodal projector, and a Vicuna text decoder. The authors provided GPT-4 with a dataset of images and captions and generated questions related to captions and images. The authors have frozen image encoders and text decoders, adjusted the image and text functionality to train a multimodal projector by feeding the model images and generated questions, and comparing the model output with ground truth captions. After pre-escape of the projector, freeze the image encoder, thaw the text decoder, and train the projector with the decoder. This method of pre-training and fine-tuning is the most common way to train a vision language model.

Structure of a typical vision language model

Projection and text embedding are concatenated

Another example is Kosmos-2, in which the author chose to fully train the end-to-end of the model. The authors later fine-tuned language-only instruction to tweak the model. The Fuyu-8B, another example, doesn’t even have an image encoder. Instead, the image patch is fed directly into the projection layer, and the sequence passes through an autoregressive decoder. In most cases, you don’t need to train your vision language model beforehand, as you can use one of the vision language models or fine-tune them in your own use cases. We’ll show you how to use these models using transformers and fine tune them using SftTrainer.

Use a vision language model with transformers

You can infer in Llava using the Llavanext model as shown below.

First, initialize the model and processor.

from transformer Import llavanextprocessor, llavanextforconditionalgeneration

Import Torch device = torch.device(“cuda” if torch.cuda.is_available() Other than that ‘CPU’) processor = llavanextprocessor.from_pretrained(“llava-hf/llava-v1.6-mistral-7b-hf”) model = llavanextforconditionalgeneration.from_pretrained(

“llava-hf/llava-v1.6-mistral-7b-hf”torch_dtype = torch.float16, low_cpu_mem_usage =truth

)model.o (device)

It then passes the image and text prompt to the processor, and passes the processed input to the generation. Note that each model uses its own prompt template. Take care to avoid degradation of performance by using the right template.

from pill Import image

Import Request url = “https://github.com/haotian-liu/llava/blob/1a91fc274d7c35a9b50b3cb29c4247ae5837ce39/images/llava_v1_5_5_radar.jpg = true = true”

Image = Image.open(requests.get(url, stream=truth).RAW)PROMPT = “(inst)\nWhat is displayed in this image (/inst)”

inputs = processor(prompt, image, return_tensors =“PT”).to(device)output = model.generate(** inputs, max_new_tokens =100))

Call decode to decode the output token.

printing(processor.decode(output)0), skip_special_tokens =truth)))

Fine-tuned vision language model using TRL

We look forward to announce that TRL’s SFTTrainer includes experimental support for vision language models. Here is an example of how to run SFT on a Llava 1.5 VLM using a Llava-Instruct dataset that contains 260k image conversion pairs. The dataset contains user assistant interactions formatted as a sequence of messages. For example, each conversation is paired with an image that the user asks.

To use experimental VLM training support, you must install the latest version of TRL using PIP installation-U TRL. The complete sample script can be found here.

from trl.commands.cli_utils Import sftscriptarguments, trlparser parser = trlparser((sftscriptarguments, Trainingarguments)) args, training_args = parser.parse_args_and_config()

Initialize the chat template for fine tuning instructions.

llava_chat_template = “”“Chat between curious users and artificial intelligence assistants. The assistant provides useful, detailed, polite answers to user questions. {Message%} {%If(‘role’)== ‘user’%} users: {%endif%} {%endif} {%for empert’)=’ item(‘text’)}} {%elif item(‘type’)== ‘image’%} {%endfor%} {%if if message(‘role’)== ‘user’%} {%ells} {{eos_token}}} {%endif%} {%endfor} {%endfor} ^“”

This will initialize the model and tokensor.

from transformer Import Auto Token Agent, Auto Processor, Training Argu, llavaforconditionalgeneration

Import Torch Model_id = “llava-hf/llava-1.5-7b-hf”

Tokenizer = autotokenizer.from_pretrained(model_id) tokenizer.chat_template = llava_chat_template processor = autoprocessor.from_pretrained(model_id) processor.tokenizer = Tokeiser model = llavaforconditionalgeneration.

Combine text and image pairs to create a data collater.

class llavadacollator:

def __init__(Self, processor): self.processor = processor

def __phone__(Self, examples):texts =()images =()

for example in Example: Message = Example (“message”)text = self.processor.tokenizer.apply_chat_template(messages, tokenize =erroradd_generation_prompt =error

)texts.append(text)Image.append(example(“image”) ()0)) batch = self.processor(text, image, return_tensors =“PT”padding =truth) Label = Batch (“input_ids”). clone()

if self.processor.tokenizer.pad_token_id teeth do not have none:labels(labels == self.processor.tokenizer.pad_token_id)= –100

batch(“label”) = Label

return batch data_collator = llavadatacollator (processor)

Loads the dataset.

from Dataset Import load_dataset raw_datasets = load_dataset(“Huggingfaceh4/llava-instruct-mix-vsft”train_dataset = raw_datasets(“train”)eval_dataset = raw_datasets(“test”))

Initializes the Sfttrainer, passes through the model, and passes through dataset splitting, PEFT configuration, data collater, and call train (). To push the final checkpoint into the hub, call push_to_hub().

from TRL Import sfttrainer trainer = sfttrainer(model = model, args = training_args, train_dataset = train_dataset, eval_dataset = eval_dataset, dataset_text_field =“Sentence”,Tokenizer = Tokenizer, data_collator = data_collator, dataset_kwargs = {“skip_prepare_dataset”: truth},)trainer.train()

Save the model and push it onto the hugging face hub.

trainer.save_model(training_args.output_dir) trainer.push_to_hub()

You can find the trained model here.

You can try out the models we just trained directly in our VLM playground ⬇️

Acknowledgments

Thank you to Pedro Cuenca, Lewis Tunstall, Kashif Rasul and Omar Sanseviero for reviews and suggestions on this blog post.