Yesterday, our friends at Unsloth shared an issue with gradient accumulation affecting Transformers Trainer. The first report comes from @bnjmn_marie (kudos to him!).

Gradient accumulation can be considered the mathematical equivalent of full batch training. However, the losses were not consistent between training runs with the setting turned on and off.

Where did it come from?

Within each model’s modeling code, the transformer provides a “default” loss function that is most commonly used for the model’s task. This depends on what you use the modeling class for (question answering, token classification, causal LM, masked LM).

This is the default loss function and is not intended to be customizable. The user does not need to calculate the loss, as it is only calculated when the label and input_id are passed as inputs to the model. The default loss is useful, but limited by design. If something were to be handled differently, the labels would not be passed directly, and the user would be expected to retrieve the logits from the model and use it to calculate the loss outside of the model.

However, Transformers trainers and many trainers use these methods heavily due to their simplicity. This is a double-edged sword. Providing a simple API that is different for different use cases is not a well-thought-out API. We ourselves were caught off guard.

More precisely, for gradient accumulation over token-level tasks like causal LM training, the correct loss is the total loss over all batches in the gradient accumulation step, plus the sum of all non-padding tokens within those batches. It must be calculated by dividing by a number. This is not the same as the average loss value per batch. The fix is very easy. See below.

def ForCausalLMLoss(logits, labels, vocab_size, **kwargs): # Upcast to a float if you need to calculate the loss to avoid potential precision issues. logits = logits.float() # Shift tokens < n. ..., :-1, :).contiguous() shift label = label(..., 1:).contiguous() #flatten the token. Shift_logits = SHIFT_LOGITTS.VIEW(-1, vocab_size) SHIFT_LABELS = SHIFT_LABELS.VIEW(-1) # Enable parallelism in the model. SHIFT_LABELS = SHIFT_LABELS.TO(SHIFT_LOGITTS.device) num_items = Kwargs.pop("num_items", None)

+ loss = nn.function.cross_entropy(shift_logits, shift_labels, ignore_index=-100, reduction=”sum”)

+ loss = loss / num_items

– loss = nn.function.cross_entropy(shift_logits, shift_labels, ignore_index=-100)

return loss

how do you fix it

To address this issue, we change the way the model and training work in two ways:

If the user is using the “default” loss function, the necessary changes are automatically taken into account when using gradient accumulation, ensuring that the appropriate loss is reported and utilized, solving the problem at hand. will be done. To prevent users from being blocked due to loss calculation issues in the future, we will expose an API that allows users to pass their own loss functions directly to the trainer. This makes it easy for users to use their own fixes until the issue is fixed internally. And we released new Transformers.

All models that inherit from PreTrainedModel now have a loss_function property determined by one of the following:

config.loss_type: This is to allow anyone to use their own custom loss. To do this, change LOSS_MAPPING.

surely my super loss(logit, label):

return loss = nn.function.cross_entropy(logits, label,ignore_index=-100) LOSS_MAPPING(“My type of loss”) = my_super_loss

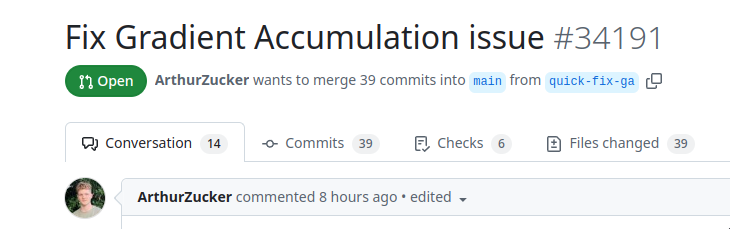

We are working to ship the first changes for the most popular model in this PR: https://github.com/huggingface/transformers/pull/34191#pullrequestreview-2372725010. This will be followed by a call for contributions to disseminate this to the remaining models so that the majority will be supported in the next release.

We are also actively working to release the second change to this PR: https://github.com/huggingface/transformers/pull/34198. This allows users to use their own loss function and take advantage of the number of samples displayed at a time. – Batch to help with loss calculations (more models supported from previous changes, perform correct loss calculations during gradient accumulation)

—

By tomorrow, we should be able to expect the trainer to work properly with gradient accumulation. Please install from main to benefit from the fix.

pip install git+https://github.com/huggingface/transformers

We generally respond very quickly to bug reports submitted to our issue tracker (https://github.com/huggingface/transformers/issues).

This issue has existed in Transformers for a while, as it is mostly default and needs to be updated by the end user. However, if the default becomes less intuitive, it will change. In this example, we updated the code and shipped the fix within 24 hours. This aims to solve such problems in transformers. If you have any issues, please feel free to submit them. This is the only way to improve transformers and make them better suited to different use cases.

Transformers team 🤗