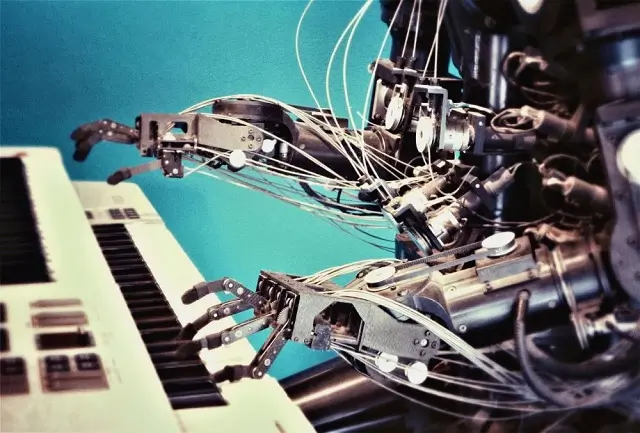

In today’s digital landscape, artificial intelligence has transcended simple text generation in order to embrace a more holistic approach to understanding our world. The latest frontier in AI development combines visual perception with language understanding to create systems that allow images and text to be interpreted simultaneously like humans. These multimodal AI systems, particularly the Vision Language Model (VLM), are rapidly changing the way content is created, consumed, and interacted.

AI evolution: From text only to multimodal

For years, AI systems have been running on silos. The text model processed the language, while the computer vision system analyzed the images individually. The breakthrough came when researchers realized that combining these modalities could create more powerful, versatile AI systems that better mimic human cognition.

The journey from specialized AI to multimodal systems is worth noting.

First Generation: Simple text generator and basic image recognition. Second generation: Advanced language models such as GPT and image generators such as Dall-E. Current Generation: An integrated system that simultaneously processes both text and images.

This evolution has opened up new possibilities for content creation that previously unthinkable and enabled more intuitive and creative applications.

How the vision language model works

Their core vision language model combines two powerful neural network architectures.

Vision Encoder: These components process and understand visual information and identify objects, scenes, and visual relationships. Language Model: These components understand and generate human language.

When these systems work together, they create a unified representation that connects visual elements to linguistic concepts. This integration allows AI to “display” images and “talk” them consistently about them.

Technical architectures usually include Transformers. This is the same technology that bolsters many gaming platforms like Vulkan Bet, which is revolutionizing the way AI processes sequential data. These sophisticated neural networks can connect to related language concepts while maintaining attention across different parts of the image.

Transforming the creative industry

Multimodal AI is restructuring content creation across many industries.

Marketing and Advertising

Now, marketers leverage vision language models to generate product descriptions from images, create targeted ad copies based on visual content, and design an entire campaign with consistent visual and textual elements. This technology can analyze existing visual brand assets and generate matching text that maintains brand voice and message.

Entertainment and Media

Film studios and game developers use multimodal AI as follows:

Generate script ideas from concept art. Create a storyboard from the written explanation. Develop character dialogue based on visual scenes.

These applications streamline the creative process while maintaining creative control for human artists.

ecommerce and retail

Online retailers employ vision language models to automatically generate product descriptions from photos, create virtual shopping assistants that can visually discuss products, and build a more intuitive search experience where customers can find products based on visual attributes described in natural language.

Practical applications that transform content creation

The practical impact of multimodal AI on content creation is profound in some domains.

Automatic content generation

Modern content creators use the Vision-Language model to generate the first draft of the article using related images, create social media posts that match visuals and captions, and develop multimedia presentations that maintain theme consistency across slides. With this automation, creators focus on high-level strategies and creative direction.

Enhanced accessibility

One of the most valuable applications is making content more accessible. The Vision-Language model automatically generates detailed image descriptions for visually impaired users, creates video captions that contain visual context beyond the dialogue, and translates visual content across languages while maintaining cultural context.

Personalized content experience

Brands now offer a highly personalized content experience by analyzing both visual preferences and text engagement. This feature allows them to tailor content to the preferences of individual users at large, creating a more engaging and relevant experience.

Challenges and ethical considerations

Despite their transformational possibilities, vision language models face important challenges:

Bias and expression

Like all AI systems, multimodal models can perpetuate and amplify biases present in training data. This is especially concerning when these biases affect the visual representation of people of different demographics.

Possibility of incorrect information

The ability to generate compelling text about images opens up the possibility of sophisticated misinformation. Fake news can be created by generating false but plausible explanations of actual images or by creating images to match the produced narrative.

Copyright and Ownership

As these systems learn from existing creative works, questions about copyright, fair use, and creative ownership become increasingly complicated. When AI can analyze and reproduce visual and text styles, the line between inspiration and reproduction is blurred.

The future of multimodal AI in content creation

In the future, several trends may shape the evolution of visual models.

Greater Creative Agency: Future systems could provide more control to human creators who act as collaborative tools rather than exchange technologies. Improved Context Understanding: The next-generation model gives a better grasp of cultural, historical, and situational contexts, both visual and textual content. Cross-modal creativity: More systems allow for novel ways to translate concepts between modalities, such as generating music from images or architectural design from narrative descriptions.

As vision language models continue to evolve, content creators who embrace these tools while maintaining human surveillance and creative direction will gain great benefits. The most successful approach may be a collaborative approach that improves human creativity, rather than replacing it with AI capabilities.

The fusion of vision and language in AI represents not only technical achievements, but also fundamental changes in the way content is created and consumed. By understanding both what we see and what we say about what we see, these systems bring us closer to AI that understands the world just as we do.

Navigating this new frontier ensures that challenges leverage these powerful tools, but rather than reducing them, they help human creativity and communication. The future of content creation is not about choosing between humans and artificial intelligence, but finding the best collaboration between them.

***