AI Ethics

What is AI Ethics?

AI ethics refers to the study and implementation of moral principles and guidelines to govern the design, development, and deployment of responsible artificial intelligence systems. As AI technologies continue to influence various aspects of human life—from healthcare and finance to entertainment and governance—AI ethics ensures these advancements align with human values, fairness, and societal well-being.

AI Ethics

Santa Claus is coming to town and bringing AI

Generate list and verify twice Santa’s toughest job is to make a grand list of…

AI Ethics

From rap battles to AI documentation: Top 6 PAI blog posts of 2024

As 2024 comes to a close, we’d like to take a look back at some…

AI Ethics

Frederike Kalteuner, Senior EU and Global Governance Officer at AI Now, will hold expert consultation on the Guiding Principles on Business and Human Rights at the United Nations

On 28 November 2024, Frederike Kalschuner, Head of the EU and Global Governance, will provide…

AI Ethics

PAI Community Priorities for 2025: Insights from the Partner Forum

For the third and final time this year, Partnership on AI brought together a cross-disciplinary…

AI Ethics

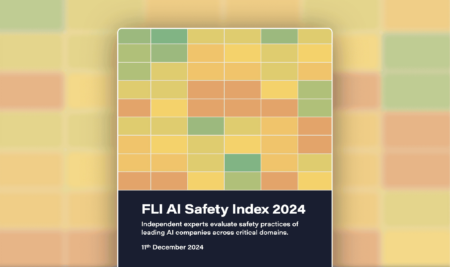

Announcing AI Safety Index – Future of Life Institute

For immediate releaseDecember 11, 2024Media Contact: Chase Hardin, chase@futureoflife.org+1 (623)986-0161 Leading AI expert in external…

AI Ethics

AI Generated Business: The Rise of AGI and the Rush to Find a Working Revenue Model

THE DREAM OF AGI AND THE FULLY AUTOMATED ORGANIZATION In sum, OpenAI was likely conceived…

AI Ethics

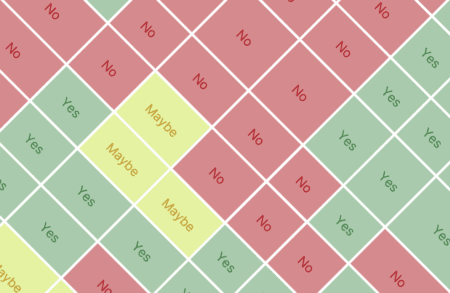

Will you be grateful or will you be mischievous? Test your AI spotting skills with this fun quiz

While news coverage has focused on the potential for harm and misinformation of AI-generated content,…

AI Ethics

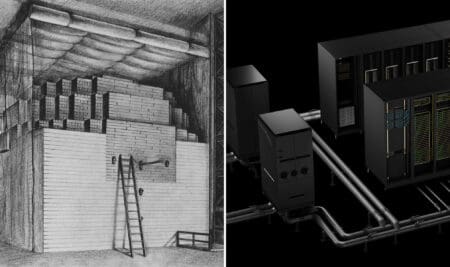

Max Tegmark on the AGI Manhattan Project

A new report by the U.S.-China Economic and Security Review Commission recommends that “Congress establish…

AI Ethics

Developing general-purpose AI guidelines: What the EU can learn from PAI’s model deployment guidance

Foundation models, also known as general purpose AI, are large models trained on vast amounts…

AI Ethics

Assessing deepfake proposals in Congress

As advances in AI systems accelerate, the creation and spread of deepfakes on the internet…