In recent years, the AI model has been increasing, but it has become much larger. The amount of memory occupied by the weight of the neural network is steadily growing, and some are 500 billion parameters and even trillions. Executing inference on the conventional computer architecture allows you to calculate time and energy each time you move these weights. An analog in memory computing that eliminates memory and calculation provides exceptional performance while eliminating this bottleneck and saving time and energy.

IBM’s researcher is a new paper trio, with an innovation of 3D analog in Memoria Citect, compact edge -sized models, and algorithms that promote algorithms that promote the attention of the transformer. Introducing scalable hardware work.

According to a new study by the IBM Research team, the chips based on the memory computing in the analog are very suitable for running the cutting -edge mixture of the expert (MOE) model and exceeding the GPU by multiple measurements. 。 Their research on the Journal Nature Computer Science is that each expert in the MoE network can be mapped to the 3D -abductuous 3D -abolished memory of chip architecture inspired by a 3D brain. Is shown. -Base analog in memory computing chips. Through a large -scale numerical simulation and benchmarks, the team has discovered that this mapping can achieve excellent throughput and energy efficiency to execute the MoE model.

In addition to two other new papers in IBM RESEARCH, both Edge and Enterprise Cloud applications show promises in in -memory computing to operate AI models using transcript. And, according to these new paper, moving this experimental technology from the laboratory is approaching.

“By incorporating memory computing in analog into the third dimension, you will be able to store large -scale transformer model parameters in completely on -chip,” said IBM’s graduate school Julian View Celle, Moe paper. Julian View Cyl, the culprit, has demonstrated that it is useful. With 3D analog in memory computing tiles, each “expert” of MOE is piled up on each other.

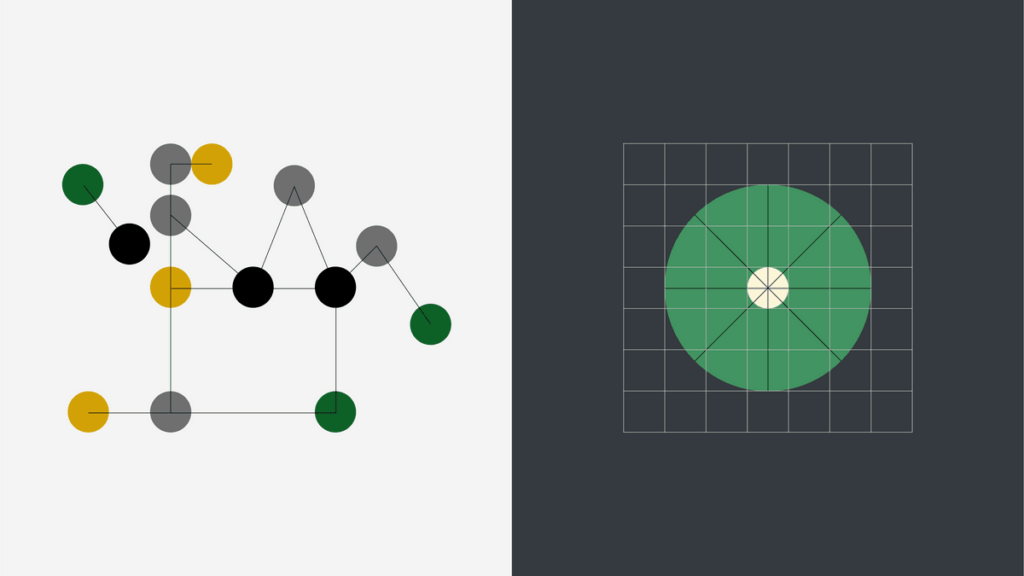

The MOE model allows a specific layer of the neural network into a smaller layer. Each of these small layers is called “expert” and refers to the fact that it specializes in processing data subsets. When the input comes, the routing layer will decide which expert (or experts) will be sent. When two standard MOE models were executed in the performance simulation tool, the simulated hardware exceeded the state -of -the -art GPU.

“Then, you can scaling a much better neural network and use a much smaller calculation footprint to develop a large and very competent neural network,” he said. Led IBM Research Scientist ABU SEBASTIAN. “As you can imagine, minimize the calculation amount required for inference.” Granite 1B and 3B use this model architecture to reduce latency.

In a new study, we used a simulated hardware to map the MoE network layer into an analog in memory computing tile. Each included multiple vertical stacks. These layers, including the weight of the model, can be accessed individually. In the dissertation, the team describes layers as a high -rise office building with various floors, and includes various experts called as needed.

It is intuitive to build an expert layer in a clear layer, but the results of this strategy are important. In the simulation, the memory computing architecture in the 3D analog has a higher throughput when running a MoE model compared to a commercially available GPU that runs the same model. I did it. When the GPU moves the weight of the model between the memory and the calculation, the advantage of energy efficiency was the largest because it sacrificed a lot of time and energy. This is a problem that does not exist in memory computing architectures in analog.

According to HSINYU (Sidney) TSAI, a researcher at IBM, this is an important step to mature 3D analog in memory computing that can ultimately accelerate enterprise AI computing in a cloud environment.

The second papers worked on were research on the accelerator architecture announced in a lecture invited at the IEEE International Electronic Device Conference in December. They have demonstrated the possibility of performing AI inference in the edge application using an ultra -low power device. The phase change memory (PCM) device saves the weight of the model through the conductivity of the carcogenized glass. When more voltage passes through the glass, it is redesigned from the crystal to Amorphus Solid. This reduces conductivity and changes the value of the matrix vector multiplication operation.Phase change memory (PCM) In the case of analog in memory computing -efficiently leads the transformer to the edge. The team’s analysis shows their neural processing units with a competitive throughput of a transformer model with the interests of the expected energy.

“The edge device has energy constraints, cost constraints, areas restrictions, and flexibility constraints,” said IREM BOYBAT, a researcher in IBM. “So, we proposed this architecture to deal with these requirements of Edge AI.” She and her colleagues outlined the neurological unit in a combination of PCM -based analog accelerator and digital accelerator node.

Boybat said that this flexible architecture could run various neural networks on these devices. For the purpose of this dissertation, her and her colleagues explored a transformer model called Mobilebert customized for mobile devices. The proposed neural processing unit has a better performance than an existing low -cost accelerator in the market in accordance with its own throughput benchmark, and is measured by Mobilebert’s inference benchmark. I approached the performance.

This work is a step toward the future when all model weights of the AI model are stored in an on -chip and the memory calculation device in the analog may be mass -produced. Such devices may form the basics of a microcontroller to support the edge application AI reasoning, such as cameras for autonomous cars and car sensors.

Last but not least, researchers have overstated the first development of transcript in a memory computing chip in analog, including all matrix vector multiplication, including the weight of the static model. Compared to the scenario where all operations run at floating points, it is executed within 2 % of a benchmark called a long -range arena, testing the accuracy with a long sequence. The result has appeared in Nature Machine Intelligence.

A larger picture, these experiments, showed that analog -in memory computing could accelerate caution mechanisms, according to Manuel Le Le Gallo Bulldeau, a researcher in IBM. “Transforms must be done. It’s not easy to accelerate in analog,” he added. Disability is the value that must be calculated by the caution mechanism. Because they are changing dynamically, you need constant re -programming of analog devices. This is an unrealistic goal from the viewpoint of energy and endurance.

To overcome the barrier, they used an experimental analog chip using a mathematical method called kernel approximation. This development stated that this development is important because it was previously believed that this circuit architecture could only process linear functions. This chip uses a design that is inspired by the brain that preserves the weight of the model on a phase change memory device placed on a crossbar -like system such as a system -like system.

“The cautionary calculation is a non -linear function, a very unpleasant mathematical operation of the AI accelerator, but it is a very unpleasant mathematical operation, especially for memory computing accelerators in analog,” says Sebastian. “But this proves that this trick can do it, and can improve the efficiency of the entire system.”

The kernel approximation, which is this trick, uses a randomly sampled vector to project the input into a high -dimensional space, and calculates the dot accumulation in a high -dimensional space that occurs to the need for nonlinear functions. 。 Kernel approximation is a common method that can be applied not only to a system that uses analog in memory computing, but also to various scenarios, but it can work very well for that purpose.

“These papers offer an important breakthrough for the future that modern AI workloads can be executed in both cloud and edges,” commented IBM Fellow VIJAY NARAYANAN.