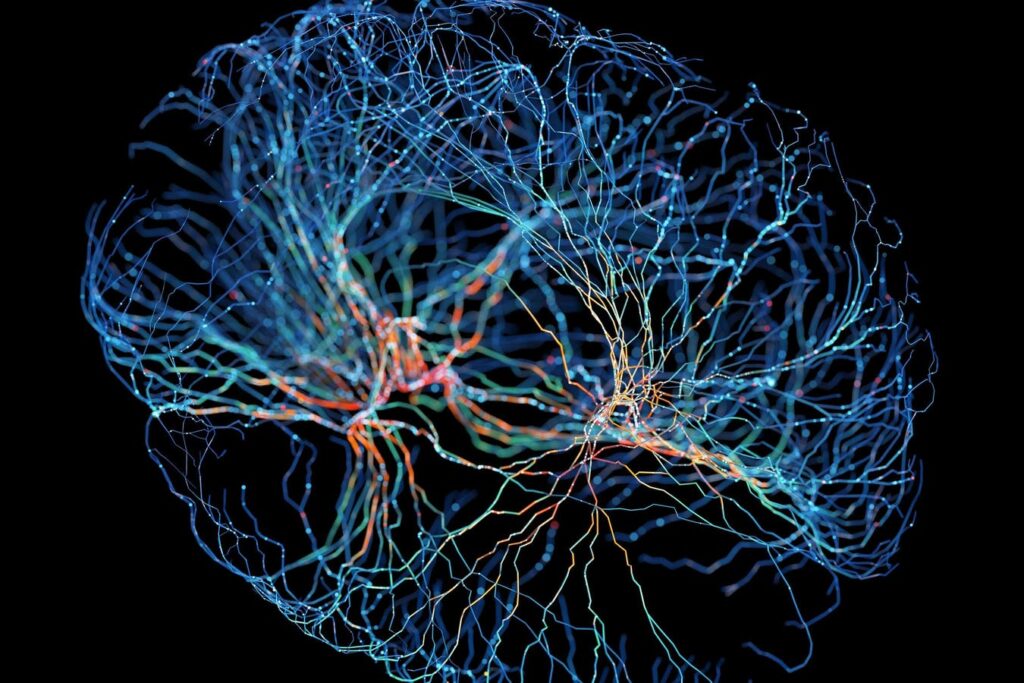

Neuron system with a glowing connection on a black background

Getty

Asking 10 people how the large -scale movement of the language model works, you can get 10 answers. Or you may actually get only two or three answers. The remaining respondents lament the lack of general understanding of these extensive “courage”, saying, “I don’t know, don’t worry.” system.

It is rarely encountered such a ubiquitous technique, recognizing that the majority of people really do not know how it works. However, for fairness, this stage was already set for this kind of knowledge gap in the world of technology. Many people explained what the cloud was 10 or 15 years ago. Regarding cipher, most people still hate to explain the blockchain in very detail.

However, AI is different. Furdies are different -the impact of our society and private life is different. Therefore, it will help you know how AI agents, LLMS, and neural netets make decisions and process them around them.

I go to some basic elements of hearing that people are talking about using some specific quotes from the famous computer scientists Yann Lecun. 。 Lecun had some very important things about the actual state of AI development and what it means for our world.

Computer collection

This is the first main idea that people close to the industry have presented to the audience for the past few years.

In a sense, it is an exemption on the rapid appearance of AI on behalf of a kind of human. This is a general qualifier that AI has no obstacles to acquire more human beings over time.

If you look at the results of a large new model like Openai O1 and the latest models of DeepSeek, you may think that AI can jump on human intelligence rods simply by adding power and tokens.

But, according to some of the best theorists, that would be wrong. Because true intelligence is a collection of systems that cooperate to produce very accurate and robust results.

In Marvin Minsky’s “Society of the Heart”, famous theorists have a wide variety of statements about artificial intelligence in contrast to the human mind. Human hearts are not one computer, but many small interconnected agents that make up a “society of the heart”, and all individual components cooperate with greater results.

Think about it: Social people are working together for a consistent purpose. It is a model given by Minsky -a very powerful supercomputer not only becomes a god in its intelligence, but also a “village” with an interconnected or module like living cells.

Yann Lecun reflects this when commenting on the development of LLMS.

“We are not a place where we cannot reproduce the kind of intelligence that can be observed by animals, not humans,” he says. “Intelligence is not just linear. Here, over the barrier, there are human intelligence and super intelligence. It is not at all. It is a collection of skills and gaining new skills very quickly. And the ability to solve the problem without actually learning anything.

When you start thinking about such AI, you change the way to frame it, which creates a big difference.

Memory and context

Lecun also suggests that “permanent memory” is needed for these systems to be powerful. And that’s what we have talked to us over and over again when an expert is working on a new AI model.

This can be spoken in a context window used to perceive what the system is working on, for example, from the viewpoint of memory or from context.

To do this, let’s talk about two types of memory, parametric memory and working memory.

These two types of memory can be mapped to two different types of human memories that make up short -term memory and long -term memory (short -term memory and long -term memory). Parametric memory is a long -term memory, that is, historic context and knowledge that has long -term thinking of machines. Working memory is a short -term memory. This basically corresponds to the clues for context windows and contexts where the machine learns in real time. (See the details of this resource of the Data Scientist Association)

Both of them have their own importance in the AI system, as they do in the human brain. Neurologists talk about the differences in long -term and short -term human memory functions. The theorist should talk about the difference between AI’s parametric memory and work memory.

New framework

As Minsky and Lecun pointed out, what is taking home if the human mind is more elaborate than a single supercomputer?

“I need a completely new architecture,” Lecun says. “It doesn’t happen with LLMS. Nothing happens so far. We need to have common sense … If we give the LLM a standard puzzle, the answer will flow back.”

One of his proposals is the emergence of what is called the “World Model,” and the AI begins to build the necessary context and become more recognized as humans.

“What happens if you have some idea about the state of the world and imagine the actions you might take?” “Can you predict the next state of the world from this action? … If you have such a system, you may be able to predict what action sequence will create. Action that satisfies the task. They are this purpose. That’s what we’re working on … and maybe some specific results in a wider world within a few years. The year is almost more difficult than we think.

Open source model advantage

This does not end without mentioning Lecun’s current statement about the open source model.

The business world (and investment world) is suitable for new announcements of Deep Seek, a Prime Open Source AI model that postpon plans by US companies like a meta. However, instead of regarding it as “the United States to China,” Lecun urges it to consider it as its own open source. And it also helps our expectations for what will happen after this year.

Apply these frameworks to AI research when moving forward.