Over the past decade, we have laid the foundations for many of the modern AI era, from pioneering transformer architectures based on all major language models to developing agent systems that can be learned and planned, such as Alphago and AlphaZero.

These techniques were applied to breakthroughs in quantum computing, mathematics, life sciences and algorithm discovery. And we continue to double the breadth and depth of basic research, working to invent the next big breakthrough needed for artificial general information (AGI).

This is what the brain does, we are working to expand the Gemini 2.5 Pro, the best multimodal foundation model, to become a “world model” where you can plan and imagine new experiences by understanding and simulating aspects of the world.

I’ve been walking around this direction from pioneering work training agents to learning complex games like Go and Starcraft. This builds Genie 2, which can generate a 3D simulation environment that can interact from a single image prompt.

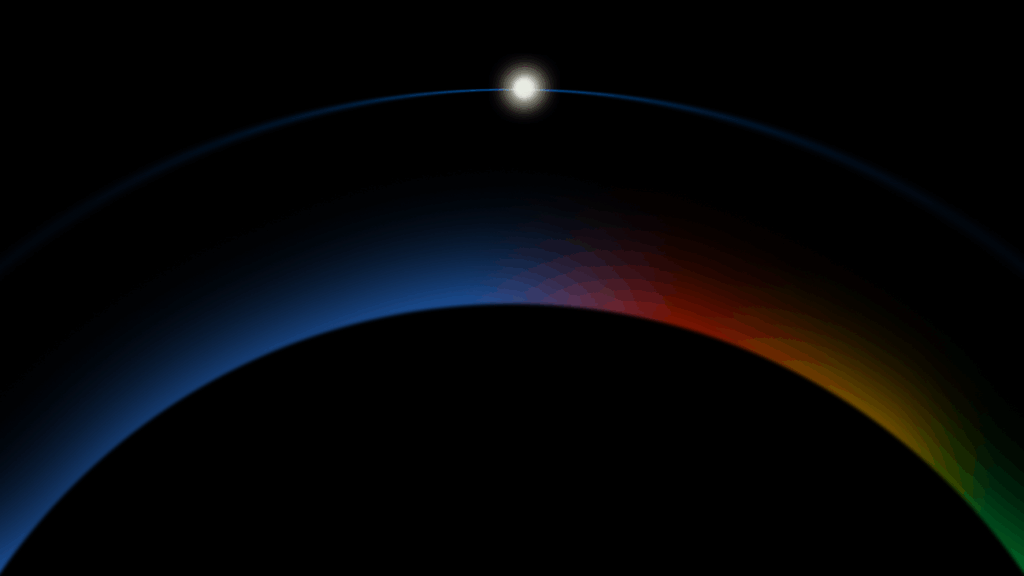

Already, we can see evidence of these abilities that manifest in the ability to use world knowledge and reasoning to express and simulate the natural environment using world knowledge and reasoning, Veo’s intuitive understanding of physics, and the ability to explain and simulate the robots, how Gemini Robotics grasps, directs, and simulates them.

Making Gemini a global model is a critical step in developing new, more general, more useful AI, or universal AI assistants. This is an AI that is intelligent, understands the context you are in, and can plan and take action on your behalf on any device.