![]()

TL;DR: Keeps the base model warm, allowing fast LORA inference to multiple users while replacing stable diffusion LORA adapters with each user request. This can be experienced by browsing the LORA catalog and playing with the inference widget.

In this blog, we will explain in detail how we achieved that.

Based on the public diffusion model, we were able to significantly speed up the reasoning of public Lora hubs. This allowed us to save computing resources and provide a faster and better user experience.

There are two steps to perform inference on a particular model.

Warm-up phase – consists of model download and service setup (25 seconds). Next, the inference job itself (10S).

The improvement has reduced the warm-up time from 25 seconds to 3 seconds. Now we can provide inferences for hundreds of different LORAs below 5 A10g GPUs, but the response time to user requests has been reduced from 35 seconds to 13 seconds.

Let’s take advantage of some of the latest features developed in the Diffusers Library to provide many different LORAs in a dynamic way with one service.

Lola

LORA is a fine-tuning technique that belongs to a family of “parameter-efficient” (PEFT) methods, which attempts to reduce the number of trainable parameters affected by the fine-tuning process. Increases the tweak speed while reducing the size of the finely tuned checkpoints.

Instead of performing small changes to all the weights to fine-tune the model, it freezes most layers and trains only a few specific layers in the attention block. Additionally, we will not touch the parameters of these layers by adding the product of two small matrices to the original weights. These small matrices are matrices that have been saved to disk, with weights updated during the fine-tuning process. This means that the original parameters of all models are saved, and you can load the LORA weights up using the adaptation method.

The name of Lora (low rank adaptation) comes from the small matrix we mentioned. For more information about this method, see this post or the original paper.

The above diagram shows two small orange matrices stored as part of a Lora adapter. Later you can load the LORA adapter and merge it with the blue base model to get the yellow tweak model. Importantly, you can also unload the adapter so you can always return it to your original base model.

In other words, the LORA adapter is like a base model add-on that can be added and removed on demand. Also, A and B are small, so they are very light compared to the model size. So loading is much faster than loading the entire base model.

For example, looking inside the Stable Diffusion XL base 1.0 model repository, which is widely used as the base model for many LORA adapters, we can see that its size is approximately 7 GB. However, a typical Lora adapter like this takes up only 24 MB of space!

There are far fewer blue base models on the hub than the yellow base models. There are ways to quickly go from blue to yellow, and vice versa, as well as many clear yellow models with several different blue developments.

For a more thorough presentation on what LORA is, see the following blog post. Use LORA to make efficient, stable diffusion fine-tuning or refer to the original paper directly.

advantage

The hub has around 2500 different public loras. The majority (~92%) of them are Lora based on the stable diffusion XL base 1.0 model.

Before this reciprocalization, this meant deploying dedicated services to all of them (for example, for all the yellow merged matrices in the diagram above). Release + Reservations for at least one new GPU. It takes about 25 seconds to prepare to generate services and provide requests for a particular model. In addition to this, there is inference time (using 25 inference steps in A10G for 1024×1024 SDXL inference diffusion). If an adapter is occasionally requested, the service will be stopped on free resources that others will preempt.

If you were asking for a less popular Lora, even if it was based on SDXL models like most of the adapters found in the hub so far, you would have needed 35 to warm it up and get a response on your first request (it was taking inference time like 10S, for example).

Currently: Requests have been reduced from 35 seconds to 13 seconds as the adapter uses only a few different “blue” base models (such as two important models). Even if the adapter isn’t that popular, its “blue” service may already be warming up. In other words, there is a good chance that you will avoid a 25-second warm-up time without frequently requesting a model. The blue model has already been downloaded and ready. Simply unload the old adapter and load the new adapter.

Overall, this means fewer GPUs to serve all different models, despite already having a way to share GPUs across deployments to maximize computational usage. In a two-minute time frame, approximately 10 different Lora weights are requested. Instead of laying out 10 deployments and keeping them warm, it offers all 1-2 GPUs (or more if there is a request burst).

implementation

LORA reciprocation has been implemented in the inference API. When a request is performed on a model available on the platform, it is first determined whether this is LORA or not. It then has the ability to identify the base model of LORA, route requests to a common backend farm, and provide requests for the above model. Inference requests are provided by keeping the base model warm and loading/unloading LORA on the spot. In this way, you can ultimately reuse the same computational resources to provide many different models at once.

Rora Structure

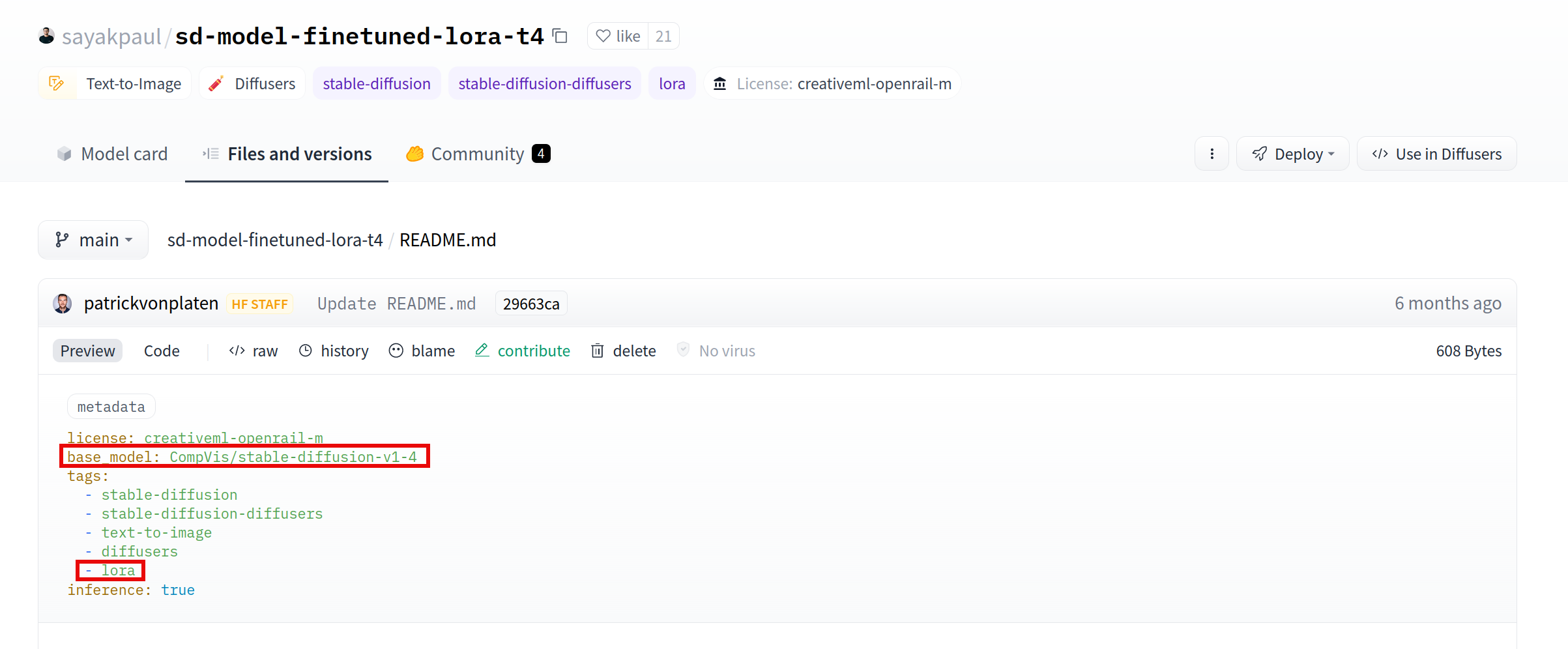

The hub allows you to identify a Lora with two attributes.

LORA has a base_model attribute. This is simply a model where Lora was built and should be applied when performing inference.

Because it’s not just models with such attributes (there are one duplicate models), LORA tags must be properly identified in LORA.

Diffusers LORA Road/Off Road 🧨

Note that there is a more unseen way to use the PEFT library to run it in the same way as presented in this section. See the documentation for more information. The principle remains the same as below (moving from the blue box to the blue box in the illustration above, or from the yellow one)

Four functions are used in the Diffusers library to load and unload different Lora weights.

Load and fuse weights with load_lora_weights and fuse_lora main layers. Note that merging weights with the main model before performing inference can reduce inference time by 30%.

To unload_lora_weights and unfuse_lora.

Here is an example of how to leverage the Diffusers library to quickly load some Lora weights onto the base model:

Import torch

from Diffuser Import (autoencoderkl, diffusionpipeline,)

Import Time base = “stabilityai/stable-diffusion-xl-base-1.0”

adapter1 = ‘nerijs/pixel-art-xl’

weightname1 = ‘pixel-art-xl.safetensors’

adapter2 = ‘minimaxir/sdxl-wrong-lora’

weightname2 = none

Input = “elephant”

kwargs = {}

if torch.cuda.is_available():kwargs(“torch_dtype”) = torch.float16 start = time.time() vae = autoencoderkl.from_pretrained(

“Madebyollin/sdxl-vae-fp16-fix”torch_dtype = torch.float16,) kwargs(“Vae”) = Vae Kwargs (“Multibody”)= “FP16”

Model = diffusionPipeline.from_pretrained(base, **kwargs)

if torch.cuda.is_available(): model.o(“cuda”)Elapsed = time.time() – start

printing(f “The base model has been loaded and has passed {Elapsed:.2f} Seconds”))

def inference(Adapter, weightName): start = time.time() model.load_lora_weights(adapter, weight_name = weightname) model.fuse_lora() elapsed = time.time() – start

printing(f”lora adapter loaded and fused to main model and passed {Elapsed:.2f} Seconds”)start = time.time() data = model(inputs, num_inference_steps =twenty five).images(0)Elapsed = time.time() – start

printing(f “Inference time, passing {Elapsed:.2f} Seconds”)start = time.time() model.unfuse_lora() model.unload_lora_weights() elapsed = time.time() – start

printing(f”lora adapters are excluded/unloaded from the base model {Elapsed:.2f} Seconds”) Inference (adapter1, weightname1) Inference (adapter2, weightname2)

Loading numbers

All the following numbers are in seconds:

Loading GPU T4 A10G Base Model – Uncached 20 20 Base Model Loading – CACHED 5.95 4.09 Adapter 1 Load 3.07 3.46 Adapter 1 Adapter 1.71 Adapter 2 Load 1.44 2.71 Adapter 2 0.19 0.13 Inference Time 20.7 8.58.58.5.5

With 2-4 seconds added per inference, many different loras can be provided. However, with the A10G GPU, inference time is significantly reduced while the adapter load time does not change much, making Lora load/unloading relatively expensive.

Serving Requests

Use this open source community image to provide inference requests

You can find the aforementioned mechanism used in the TextToImagePipeline class.

Once Lora is requested, look at what’s loaded and modify it as needed, and performs inference as usual. This allows you to provide requests for many different adapters from the base model.

Below is an example of how to test and request this image:

$git clone https://github.com/huggingface/api-inference-community.git $cd api-inference-community/docker_images/diffusers $docker build -tTest: 1.0 -f dockerfile. $ cat> /tmp /env_file

Next, on another terminal, perform a request to the base model and/or other Rolla adapters that are located in the HF hub.

# Request for base model $ curl 0:8888 -d ‘{“inputs”: “elephant”, “parameters”: {“num_inference_steps”: 20}’> /tmp/base.jpg “elephant”, “parameters”: {“num_inference_steps”: 20}}’ > /tmp/adapter1.jpg # Request another one $ curl -H ‘lora: nerijs/pixel-art-xl’ 0:8888 -d ‘{“inputs”: “elephant”, “parameters”: {“num_inference_steps”: 20}}’> /tmp/adapter2.jpg

How about the batch?

Recently, a very interesting paper has emerged that explains how to increase throughput by performing batch inference on a LORA model. In short, all inference requests are collected in batches, calculations related to the common base model are performed at once, and the remaining adapter-specific products are calculated. We did not implement such a technique (close to the approach adopted in LLMS text-generated inference). Instead, they stuck to a single sequential inference request. The reason for this is because we observed that batching is not of interest to the diffuser. Throughput does not increase significantly in batch size. The simple image generation benchmark we ran only increased by 25% with a batch size of 8 in exchange for a 6x increase in latency! In comparison, batches are much more interesting for LLMS. This is because you get 8x sequential throughput with just a 10% increase in latency. This is why we didn’t implement batching of the diffuser.

Conclusion: Time!

I was able to use dynamic LORA loads to store computing resources and improve the user experience with the hub inference API. The inference time response is much shorter due to the fact that the serving process is often already running despite the extra time added by the process of unloading previously loaded adapters and loading the adapters of interest.

Note that in order for Lora to benefit from this inference optimization, it must be based on a non-exposed, uncoordinated and ungated public model. Please let us know if you apply the same method to your deployment!