Indian Institute of Science IISC and ArtPark are embracing their embraces to help developers around the world access Vaani, India’s most diverse open source, multimodal, multilingual dataset. Both organizations share their commitment to building inclusive, accessible, cutting-edge AI technologies that celebrate linguistic and cultural diversity.

partnership

The embracing face and IISC/ArtPark partnership aims to improve the accessibility of Vaani datasets and improve the usability of Vaani datasets, aiming to better understand India’s diverse languages and promote the development of AI systems that meet people’s digital needs.

About Vaani Data Set

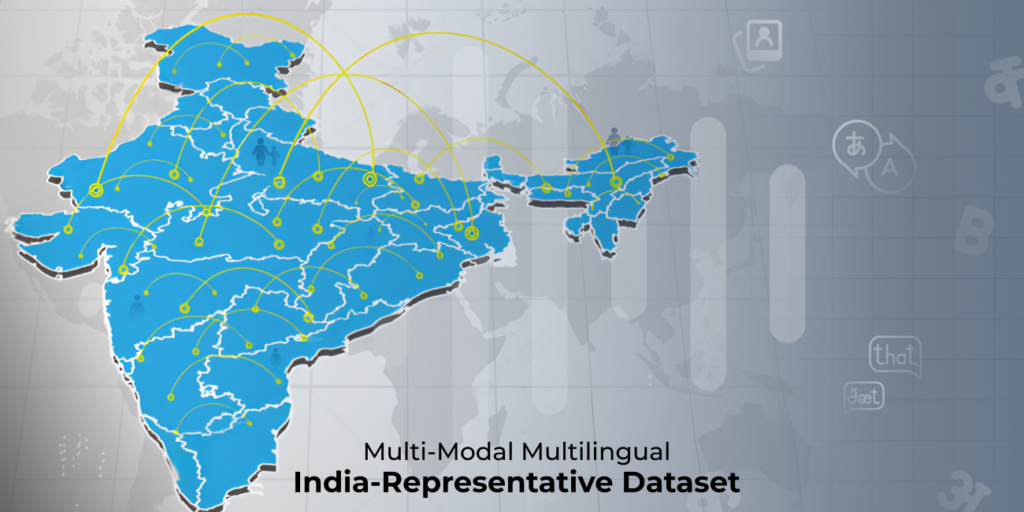

Started in 2022 by IISC/ArtPark and Google, Project Vaani is a pioneering initiative aimed at creating open source multimodal datasets that truly represent the diversity of Indian languages. This dataset is unique with a geographically centric approach, allowing for the collection of dialects and languages spoken in remote regions, rather than focusing solely on mainstream languages.

Vaani targets a collection of over 150,000 hours of speech and 15,000 hours of transcribed text data from 1 million people in all 773 districts, ensuring diversity in language, dialects and demographics.

The dataset is embedded in phases, with phase 1 covering 80 districts already open source. Phase 2 is currently underway, expanding the dataset to 100 more districts, further enhancing the vani scope and impact across India’s diverse linguistic landscape.

Important highlights of Vaani dataset, open source so far: (15-02-2025)

Language distribution in districts

The Vani dataset shows a rich distribution of languages across Indian districts, highlighting language diversity at the regional level. This information is valuable to researchers, AI developers, and innovators in language technology looking to build speech models tailored to a particular region or dialect. To find out more detailed district-by-zone language distribution, visit Huggingface’s Vaani dataset

Transcribed subsets

If you need to access only transcribed data and skip data only for non-transcribed audio, a large subset of the data is now open. This dataset has 790 hours of transcription audio with ~7L speakers covering 70k images. This resource includes small, segmented audio units that match the exact transcription, allowing for a variety of tasks, including:

Speech recognition: A training model that accurately transcribes speech language. Language modeling: Building more sophisticated language models. Segmentation Task: Identify different audio units to improve transcription accuracy.

This additional dataset complements the main Vaani dataset and allows you to develop end-to-end speech recognition systems and more targeted AI solutions.

Vani’s utility in the LLMS era

The VAANI dataset offers several important benefits, including extensive language coverage (54 languages), representation of diverse geographical regions, diverse educational and socioeconomic backgrounds, extremely large speaker coverage, spontaneous voice data, and real data collection environments. These features enable the following comprehensive AI models:

Speech to Text and Speech: Fine-tune these models in both LLM and non-LLM-based applications. Additionally, transcription tagging enables the development of code-switching (Indian and English) ASR models. Basic Speech Models for Indian Languages: The critical language and geographic coverage of the dataset supports the development of robust basic models for Indian Languages. Speaker Identification/Verification Model: With data from over 80,000 speakers, the dataset is suitable for developing robust speaker identification and validation models. Language Identification Model: Enables the creation of language Identification Models for various real applications. Voice Enhancement System: Dataset tagging systems support the development of advanced voice enhancing technologies. Multimodal LLMS Enhancements: A unique data collection approach helps build and improve the multimodal functionality of LLM when combined with other multimodal data sets. Performance Benchmark: Datasets are the ideal choice for benchmarking speech models due to a variety of linguistic, geographical, and actual data properties.

These AI models can power a wide range of conversational AI applications. From educational tools to telehealth platforms, healthcare solutions, voter helplines, media localization and multilingual smart devices, Vaani datasets can be game-changers for real-world scenarios.

What’s next?

IISC/ArtPark and Google have expanded their partnership to Phase 2 (an additional 100 districts). This means Vani covers all states in India! We look forward to providing this dataset to you.

The map highlights the districts across India where data was collected as of February 5th

How to contribute

The most meaningful contribution you can make is to use the Vaani dataset. Engagement can help improve and expand your projects, such as building new AI applications, conducting research, and investigating innovative use cases.

We look forward to hearing from you whether you have any feedback or insights from using the dataset. Please contact vaanicontact@gmail.com to inquire about opportunities to share your experiences/collaboration, or fill out this feedback form.

Made in ❤️ for the diversity of Indian languages