Because of its impressive capabilities, large-scale language models (LLMs) require important computing power and are rarely available on personal computers. As a result, there’s no choice but to deploy them on powerful, bespoke AI servers hosted on-premises or in the cloud.

Why Local LLM Inference is Preferred

What if you could run cutting-edge open source LLM on a typical personal computer? Would we not enjoy the following benefits:

Increased privacy: Data is not sent to external APIs for inference. Low latency: Save network round trips. Offline work: Work without a network connection (the dream of a frequent flyer!). Low cost: No money is spent on API calls or model hosting. Customizability: Each user can find the best model for the tasks they are working on every day, and can also tweak them or use locally searched generation (RAG) to increase their relevance.

All this sounds very exciting. So why haven’t we already done it? Returning to the opening statement, a typical affordable laptop doesn’t pack enough computing punches to run LLM with acceptable performance. There are no thousands of core GPUs and no visible bandwidth.

Is it the cause that was lost? Of course it’s not.

Why Local LLM Inference is Now Available?

There is nothing that the human mind cannot be smaller, faster, more elegant and more cost-effective. Over the past few months, the AI community has worked hard to scale back models without compromising predictive quality. Three areas are exciting:

Hardware Acceleration: Modern CPU architectures accelerate the most common deep learning operators, such as matrix multiplication and convolution, embedding hardware to enable new generation AI applications on AI PCs, and significantly improve speed and efficiency.

Small Language Models (SLMS): These models outperform larger models thanks to their innovative architecture and training techniques. Due to the low parameters, inference is a great candidate for resource-constrained environments, as it has fewer computing and memory.

Quantization: Quantization is the process of reducing memory and computational requirements by reducing the bit width of the model weights and activation from a 16-bit floating point (FP16) to an 8-bit integer (INT8). Reducing the number of bits means that the resulting model needs less memory for inference time, speeding up latency for memory bound steps like the decoding phase when text is generated. Furthermore, operations such as multiplication of a matrix can be performed faster with integer arithmetic in quantizing both weights and activations.

In this post, we will take advantage of all of the above. Starting with the Microsoft PHI-2 model, we apply 4-bit quantization to model weights thanks to Intel OpenVino integration into the best Intel libraries. Next, run the inference on a midrange laptop with an Intel Meteor Lake CPU.

Note: If you’re interested in applying quantization to both weights and activation, you can find more details in the documentation.

Let’s get to work.

Intel Meteor Lake

Released in December 2023, Intel Meteor Lake has now been renamed Core Ultra and is a new architecture optimized for high-performance laptops.

The first Intel client processors using Meteor Lake’s Chiplet Architecture include:

Power efficient CPU with up to 16 cores,

Integrated GPU (IGPU) with up to 8 Xe cores. Each is equipped with 16 Xe Vector Engines (XVEs). As the name suggests, XVE can perform vector operations on 256-bit vectors. It also implements a DP4A instruction that calculates a dot product between two vectors of 4-byte values, stores the result in a 32-bit integer and appends it to the third 32-bit integer.

The first neural machining unit (NPU) in Intel architecture. NPU is a dedicated AI engine built for efficient client AI. Optimized to efficiently handle demanding AI calculations, freeing up the main CPU and graphics for other tasks. Compared to using a CPU or IGPU for AI tasks, NPUs are designed to be more power efficient.

I chose a midrange laptop with a Core Ultra 7 155H CPU to run the demo below. So let’s choose a nice little language model to run on this laptop.

Note: To run this code on Linux, follow these instructions to install the GPU driver.

Microsoft PHI-2 model

Released in December 2023, PHI-2 is a 2.7 billion parameter model trained for text generation.

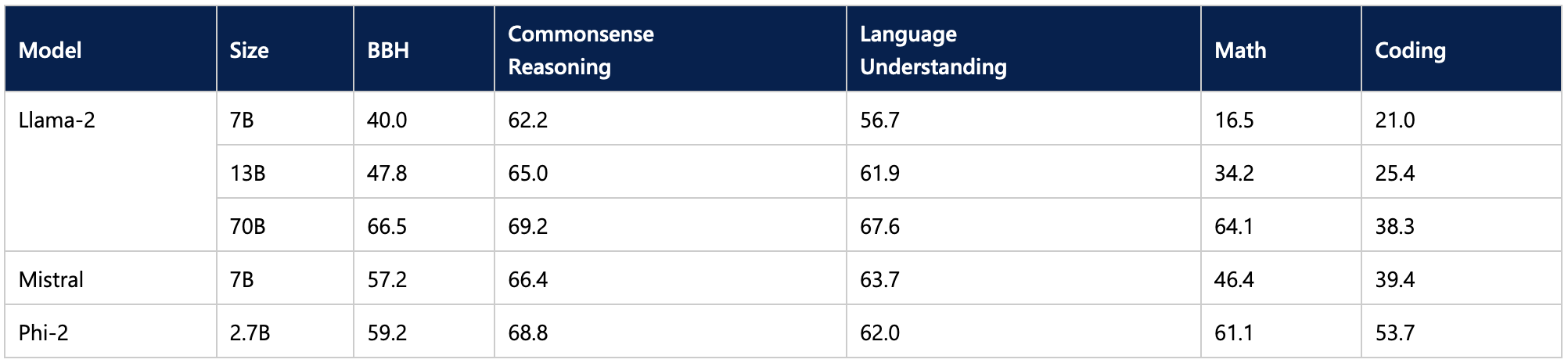

The PHI-2 is a reported benchmark that is eager to its small size, surpassing some of the highest 7 billion LLM and 13 billion LLM, staying within the notable distance of the much larger Llama-2 70B model.

This makes you an exciting candidate for laptop reasoning. Curious readers may want to try out the 1.1 billion Tinyllama model.

So let’s see how you can reduce the model to make it smaller and faster.

Quantization with Intel OpenVino and Optimum Intel

Intel OpenVino is an open source toolkit for optimizing AI inference on many Intel hardware platforms (GitHub, documentation), especially through model quantization.

We partnered with Intel to integrate OpenVino with Optimum Intel. This is a dedicated open source library that accelerates the face model of hugs on Intel platforms (GitHub, docs).

First, make sure you have the latest version of Optimum-Intel that has all the libraries you need installed.

PIP Installation – Upgrade – Strategy Eaver Optimum (OpenVino, NNCF)

This integration will simplify PHI-2 to 4-bit. Define the quantization configuration, set optimization parameters, and load the model from the hub. Once quantized and optimized, save it locally.

from transformer Import Auto Tokeniser, Pipeline

from optimum.intel Import ovmodelforcausalllm, ovweightquantizationconfig model_id = “Microsoft/Phi-2”

Device= “GPU”

q_config = ovweightquantizationconfig (bits =4group_size =128ratio =0.8)ov_config = {“performance_hint”: “Latency”, “cache_dir”: “model_cache”, “Inference_precision_hint”: “F32”} tokenizer = autotokenizer.from_pretrained(model_id) model = ovmodelforcausallm.from_pretrained(model_id, export =truth,Quantization_config = q_config, device = device, ov_config = ov_config,) model.compile()pipe = pipeline (“Text Generation”model = model, tokenizer = talknaser) results = pipe (“He is a terrible magician.”)save_directory = “Phi-2-Openvino”

model.save_pretrained(save_directory) tokenizer.save_pretrained(save_directory)

The ratio parameter controls the percentage of weights quantized to 4 bits (here, 80%), and the rest to 8 bits. The Group_size parameter defines the size of the weight quantization group (here, 128), and each group has a scaling factor. Reducing these two values improves accuracy at the expense of model size and inference delays.

In our documentation you can find more information about the field quantization.

Note: The entire notebook with examples of text generation is available on GitHub.

So how fast is a quantized model of a laptop? Watch the video below to check it out yourself. For maximum sharpness, choose a resolution of 1080p.

In the first video, we ask our model a question about high school physics: “Lily has a rubber ball falling from the top of the wall. The wall is 2 meters high. How long does it take for the ball to reach the ground?”

https://www.youtube.com/watch?v=ntnyrdorq14

In the second video, you will be asked the coding question in the model. “Use Numpy to create a class that implements fully connected layers with forward and backward functions. Use markdown markers in your code.”

https://www.youtube.com/watch?v=igwrp8gnjzg

As you can see in both examples, the generated answer is of very high quality. The quantization process does not reduce the high quality of PHI-2 and produces appropriate rates. I am happy to work locally with this model every day.

Conclusion

Thanks to my embrace of Face and Intel, I now have the opportunity to run LLMS on my laptop and enjoy many of the benefits of local inference, such as privacy, low latency, and low cost. We hope that a higher quality model will be optimized for the Meteor Lake platform and its successor, Lunar Lake. The Optimum Intel library makes quantizing models on the Intel platform very easy. So try out a great model with a hugging face hub. You can use it more anytime!

Here are some resources to help you get started:

If you have any questions or feedback, I would like to answer them on the Hugging Face Forum.

Thank you for reading!