Making long contexts efficiently has been a long-standing challenge in natural language processing. As large-scale linguistic models expand their ability to read, understand, and generate text, attention mechanisms that focus on how input is processed can become a bottleneck. In a typical transformer architecture, this mechanism results in computational cost of comparing all tokens with all other tokens and scaling them quadratic by sequence length. This problem is becoming more pressing as language models are applied to tasks that require the reference of textual information, such as vast amounts of textual information. If a model has to navigate dozens or hundreds of thousands of tokens, the cost of paying full attention is prohibited.

Previous efforts to address this issue often rely on imposing fixed structures or approximations that can impair quality in certain scenarios. For example, the mechanism of the slide window limits the token to local regions, which could blur important global relationships. Meanwhile, approaches that fundamentally change the basic architecture, such as replacing softmax attention with an entirely new construct, require extensive retraining from the ground up, making it difficult to benefit from existing pretraining models. Masu. How researchers maintain the key benefits of the original transformer design (adaptability and ability to capture broad dependencies) without covering the computational overhead associated with traditional full attention of very long sequences I’m looking for something.

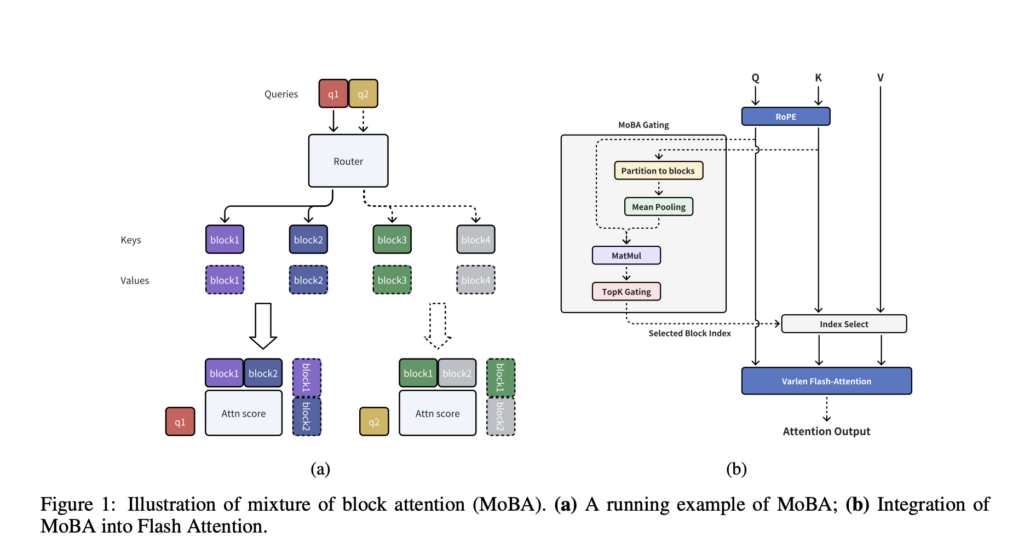

Researchers at Moonshot AI, Tsinghua University, and Zhijiang University present a mix of Block Anterestion (MOBA), an innovative approach that applies expert (MOE) principles to attention mechanisms. By splitting the input into manageable “blocks” and using a trainable gating system to determine the blocks associated with each query token, the MOBA will allow the model to have all tokens with all other tokens. Addresses inefficiencies that arise when you need to compare. Unlike an approach that forces local or window attention strictly, MOBA allows the model to learn where to focus. This design is guided by the principle of “less structure.” This means that the architecture does not exactly define which tokens interact. Instead, they delegate these decisions to the learned gating network.

An important feature of MOBA is its ability to function seamlessly with existing transformer-based models. Rather than discarding the standard self-accounting interface, MOBA works as a “plug-in” or alternative form. To maintain the same number of parameters, the architecture is not bloated, and causal masking is preserved to ensure correctness of autoregressive generation. In real deployments, MOBA can switch between sparse and full attention, allowing the model to benefit from speeding up when tackling very long inputs.

Technical details and benefits

MOBA focuses on splitting the context into blocks, each spanning a range of consecutive tokens. The gating mechanism calculates the query token and the “affinity” score between each block. Typically, you compare a query to a pooled representation of the keys of a block. Next, select the block for the top score. As a result, only tokens within the most relevant block contribute to the final attention distribution. The block containing the query itself is always included, making it accessible to the local context. At the same time, a causal mask is enforced to prevent tokens from attending future positions and maintain the left-to-right autoregressive property.

Because of this procedure, Moba’s attention matrix is much sparse than the original transformer. However, if necessary, you can remain flexible enough to allow your queries to attend far-reaching information. For example, if a question raised near the end of the text can only be answered by referencing details near the beginning, then the gating mechanism can learn to assign high scores to the relevant previous blocks. Technically, this block-based approach reduces the number of token comparisons. This brings about an increase in efficiency that becomes particularly apparent as the context length increases to hundreds of thousands or millions of tokens.

Another attractive aspect of MOBA is its compatibility with modern accelerators and special kernels. In particular, the author combines MOBA with Flashattention. This is a high-performance library with high-speed, memory-efficient and accurate attention. (The calculation can be streamlined by carefully grouping query and key value operations according to the block in which the block is selected. The authors have a million tokens, and the MOBA has a traditional full attention and It reports that it can speed up by about six times compared, highlighting its practicality in actual use cases.

Results and insights

According to technical reports, MOBAs show performance in PAR with full attention across a variety of tasks, providing significant computational savings when dealing with long sequences. Testing the language modeling data shows that MOBA confusion remains close to the confusion of a full audition transformer with sequence lengths of 8,192 or 32,768 tokens. Importantly, as researchers gradually expand the context length to over 128,000, MOBA retains a robust, long context understanding. The author presents a “sequential token” rating that focuses on the model’s ability to predict tokens near the end of a long prompt. This is an area that emphasizes the weaknesses of methods that usually rely on heavy approximations. MOBA effectively manages these subsequent positions without dramatic losses in predicted quality.

We also explore the sensitivity of approaches that block size and gating strategies. In some experiments, improving granularity (i.e., using smaller blocks, but choosing more of them) allows the model to focus more closely on attention. Even in settings where MOBA excludes most of the context, adaptive gating can identify blocks that are truly important to a query. Meanwhile, the “hybrid” regime shows a balanced approach. Some layers continue to use MOBA for speed, with a few layers fully careful and undoing. This hybrid approach is particularly beneficial when performing monitored fine-tuning. Here, a particular position of the input may be covered from the training target. By paying full attention at some upper layers, the model can retain broad context coverage and benefit tasks that require a more global perspective.

Overall, these findings suggest that MOBAs are suitable for tasks that involve a wide range of context, such as reading comprehension for long documents, large code completion, or multi-turn dialogue systems where an entire conversation history is essential. It suggests. Its practical efficiency improvement and minimal performance trade-offs position MOBA as an attractive way to make large-scale language models more efficient.

Conclusion

In conclusion, a mix of block attention (MOBA) provides a pathway to more efficient, longer context processing in large language models without massive overhauls or performance degradation of the trans-architecture. By employing a mix of expert ideas within the attention module, MOBA offers a learnable yet sparse way to concentrate on the relevant parts of a very long input. Adaptability inherent to its design – in particular, the seamless switching between sparse and full attention is particularly appealing to ongoing or future training pipelines. Researchers can choose how aggressively they will adjust to crop attention patterns or to complete attention for tasks that require thorough coverage.

While much of the attention to MOBA focuses on textual context, the underlying mechanisms could also be promising for modalities in other data. If the length of the sequence is large enough to raise computation or memory concerns, the concept of allocating queries to block experts maintains the ability to handle intrinsic global dependencies, It may reduce bottlenecks. As the length of sequences of language applications continues to grow, approaches like MOBA may play an important role in increasing the scalability and cost-effectiveness of neo-language modeling.

Please see the paper and the github page. All credits for this study will be sent to researchers in this project. Also, feel free to follow us on Twitter. Don’t forget to join 75K+ ML SubredDit.

Committed read-lg lg ai Research releases Nexus: an advanced system that integrates agent AI systems and data compliance standards to address legal concerns in AI datasets

Asif Razzaq is CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, ASIF is committed to leveraging the possibilities of artificial intelligence for social benefits. His latest efforts are the launch of MarkTechPost, an artificial intelligence media platform. This is distinguished by its detailed coverage of machine learning and deep learning news, and is easy to understand by a technically sound and wide audience. The platform has over 2 million views each month, indicating its popularity among viewers.