If you’re sharing photos or music online, you’ve definitely processed JPG or MP3 files. Both compression formats shrink the file by discarding details that are perceived as unimportant for the eyes and ears. In contrast, zip compression consolidates redundant information so that files can be restored to their original size and quality when unzipped.

Due to its “lossless” nature, ZIP is often used to archive documents. Until now, it was not considered suitable for compressing AI models with billions of seemingly random numerical weights. When researchers looked closely at model weights, they discovered patterns that could be exploited to reduce both model size and bandwidth constraints.

In collaboration with Boston University, Dartmouth, MIT, and Tel Aviv University, IBM researchers created Zipnn. This is an open source library that automatically finds the best compression algorithm for a particular model based on its properties. Zipnn reduces the popular LLM format by a third, and then compresses and decompresses models 1.5 times faster than the best techniques. The team reported findings from a new study to be presented at the upcoming 2025 IEEE Cloud Conference.

“We’re focusing on AI and cloud infrastructure,” said Moshik Hershcovitch, IBM researcher focusing on AI and cloud infrastructure. “If you unzip the file, it will return to its original state. You will not lose anything.”

Compression with zero quality loss

There are several ways to slim down your AI model to reduce its size and the cost of sending it over the network. The model can be pruned to trim external weights. Its accuracy, or level of accuracy, can also be reduced by quantization. Alternatively, it can be distilled into a more compact version of itself through the student and teacher learning process. All three compression methods can increase the speed of AI inference, but quality can sometimes be painful as it removes information from the model.

In contrast, lossless compression temporarily deletes information and reverts it. LZ compression replaces long sequences with short pointers, while entropy encoding replaces frequently used elements with short representations.

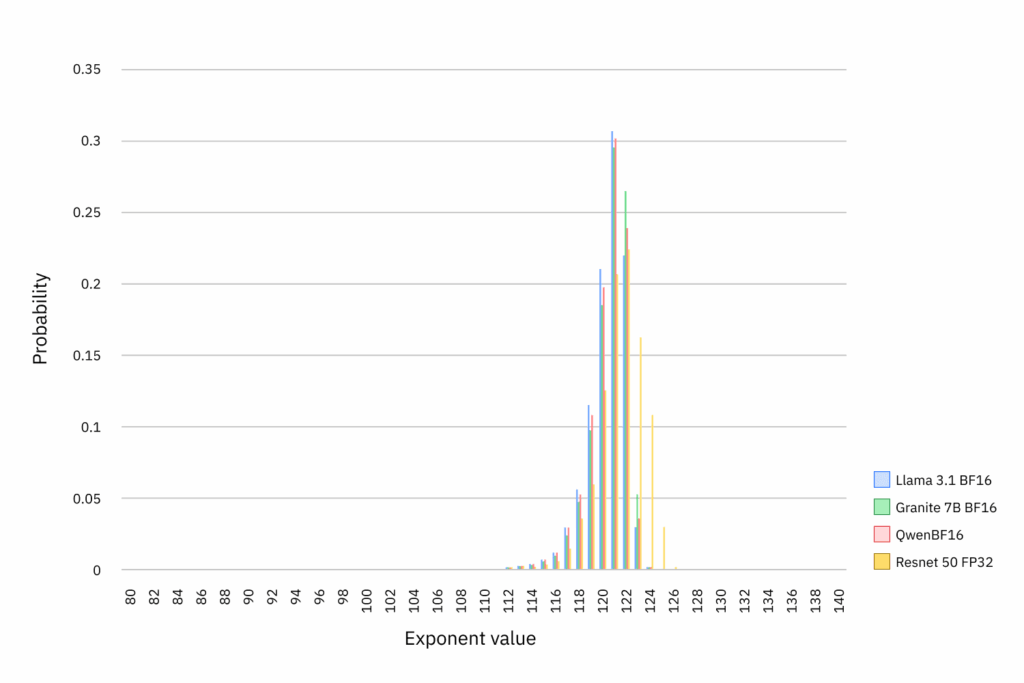

Information in AI models is typically stored as numeric weights, expressed as floating point numbers between 0 and 1. Each number has three parts: symbol, exponent, and fraction. Although the signs and fractions are usually random, IBM researchers recently discovered that the index is highly skewed. Of the possible values of 256, the same 12 appears to be 99.9% of the time.

The team behind Zipnn realized that they could use the form of entropy encoding to exploit this imbalance. They separated the exponents for each floating-point number from random indications and fractions and compressed the exponents using Huffman encoding.

The amount that ZIPNN can reduce the size of an AI model depends on the bit format of the model and how much space the exponent takes up. For comparison, Exponents takes up half of the bits in the new AI model in BF16 format, but only a quarter of the bits in the old FP32 format model.

Not surprisingly, Zipnn worked best on BF16 models in the Metalama, IBM Granite, and Mistral Model Series, reducing its size by 33%, and 11% improved over Meta’s ZStandard (ZSTD). The compression and decompression speeds have also been improved, with the Llama 3.1 model showing an average increase of 62% over ZSTD.

The FP32-Format model saw less savings, but it was still important. Zipnn reduces size by 17%, 8% better than ZTSD, and has also improved transfer speeds.

Researchers found that even higher compression rates can be obtained in popular models that were not fine-tuned by exploiting redundancy for fractions of each weight. Each percentage is split into three streams, called “byte groups,” and researchers have reduced the size of Meta’s XLM-Roberta model by more than half.

Byte Grouping was recently implemented by hugging your face to a new storage backend. In a blog post that announced the February move, one author said in the comments section that the use of “Zipnn-inspired byte groups” saved about 20% in storage costs.

The ZIPNN method saves time and money for companies and other organizations that train, store, or provide services to AI models at scale. A full implementation of ZIPNN with embrace faces, serving more than one million models per day, could eliminate petabytes of storage data and examples of networking data, researchers estimate. It could also reduce the time users spend uploading and downloading models from the site.

Storage in the “checkpoint” model is another potential use case. Developers save hundreds to thousands of initial versions for each completed AI model they generate. Reducing just a few of these rough draft models could lead to significant cost savings. The team behind IBM Granite will soon implement Zipnn to compress the checkpoint AI model.

The researchers behind ZIPNN are also exploring ways to integrate the library with VLLM, an open source platform for efficient AI inference.

Image: Captioned Figure 2: Information from the AI model is saved as numerical weights. This is usually expressed as a floating point number between 0 and 1. Each number has three parts: symbol, exponent, and fraction. Although the signs and fractions are usually random, researchers recently discovered that the index is highly skewed. Of the possible values of 256, 12 appears to be 99.9% of the time.