Recently, the AI community has seen a significant upsurge in the development of larger, more performant language models such as Falcon 40B, LLaMa-2 70B, Falcon 40B, MPT 30B, and the image processing field has seen the development of models such as SD2.1 and SDXL. These advances have undoubtedly pushed the boundaries of what AI can achieve, enabling highly versatile and state-of-the-art image generation and language understanding capabilities. But as we marvel at the power and complexity of these models, it is essential to recognize the growing need to make AI models smaller, more efficient, and more accessible, especially by making them open source.

At Segmind, we’ve been working on ways to create generative AI models faster and cheaper. Last year, we open sourced an accelerated SD-WebUI library called voltaML. This is an AITemplate/TensorRT-based inference acceleration library with 4-6x inference speed improvements. To continue working toward the goal of making generative models faster, smaller, and cheaper, we are open-sourcing the weights and training code for our compressed SD models. SD-Small and SD-Tiny. Pre-trained checkpoints are available on Huggingface 🤗

distillation of knowledge

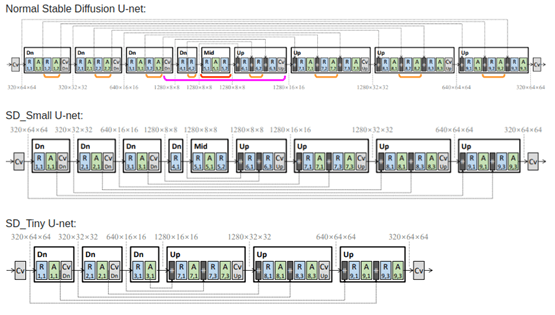

Our new compressed model is trained with knowledge distillation (KD) techniques, and its work is mainly based on this paper. The authors describe a deblocking knowledge distillation method in which some of the UNet layers are removed and the weights of the Student model are trained. Using the KD method described in the paper, we were able to train two compressed models using the 🧨 diffuser library. Small and Tiny have 35% and 55% fewer parameters than the base model, respectively, and achieve similar image fidelity to the base model. We open sourced the distillation code in this repository and pre-trained the checkpoints on Huggingface 🤗.

Neural network knowledge distillation training is similar to a teacher guiding a student step by step. A large supervised model is pretrained on a large amount of data, and then a smaller model is trained on a smaller dataset to mimic the output of the larger model, along with traditional training on the dataset.

In this particular type of knowledge distillation, a student model is trained to do the usual diffusion task of recovering an image from pure noise, but at the same time the model is created to match the output of a larger teacher model. Output matching is done block by block in the U-net, so the quality of the model is largely preserved. Therefore, using the previous analogy, we can say that during this kind of distillation, students try to learn not only from the questions and answers, but also from the teacher’s answers and the step-by-step method to arrive at the answer. To accomplish this, the loss function has three components. First is the classical loss between the target image latent and the generated image latent. Second, there is a loss between the teacher-generated image latencies and the student-generated image latencies. And finally, the most important factor is feature-level loss. This is the loss between the teacher and student outputs of each block.

All of these combined constitute Knowledge Distillation Training. Below is the architecture of the Block Removed UNet used in KD as described in the paper.

The image is taken from the paper “On architectural compression of text-to-image diffusion models” by Shinkook. etc. Al

We adopted Realistic-Vision 4.0 as the base supervised model and trained it on the LAION Art Aesthetic dataset with an image score of over 7.5 for high-quality image description. Unlike the paper, we chose to train two models with 1M images with 100K steps in Small mode and 125K steps in Tiny mode. The code for distillation training can be found here.

How to use the model

The model can be used using the 🧨 diffuser’s DifffusionPipeline

from diffuser import Diffusion pipeline

import Torch Pipeline = DiffusionPipeline.from_pretrained(“segmind/small-sd”torch_dtype=torch.float16) prompt = “Portrait of a cute girl”

negative prompt = “(Deformed iris, deformed pupil, semi-realistic, CGI, 3D, rendering, sketch, manga, drawing, anime: 1.4), text, close-up, cropped, out of frame, worst quality, low quality, JPEG Artifact, Ugly, Duplicate, Morbid, Amputated, Extra Finger, Mutated Hand, Poorly Drawn Hand, Poorly Drawn Face, Mutation, Deformed, Blurred, Dehydrated, Bad Anatomy, Bad Proportion, Extra Limb, Cloned Face, Ugly, Overall Proportion, Malformed Limb, Missing Arm, Missing Leg, Extra Arm, Extra Leg, Fused Fingers, Too Many Fingers, Long Neck.”

image = pipeline(prompt, negativeprompt = negativeprompt).images(0) Image.Save(“My image.png”)

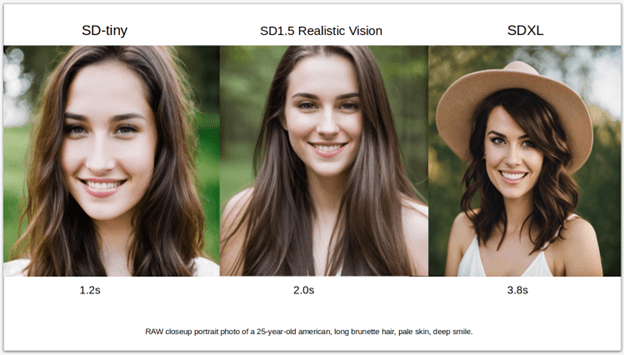

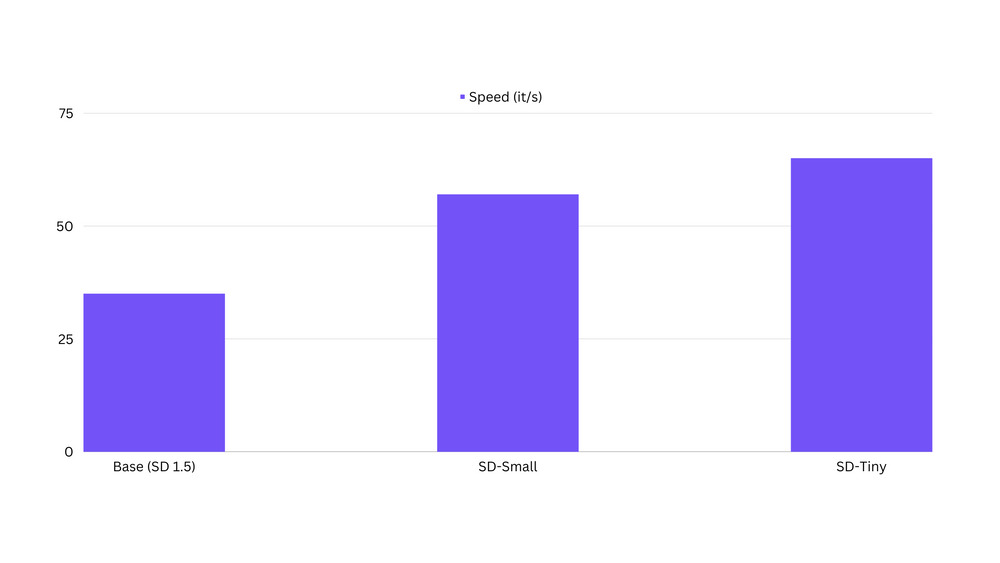

Speed in terms of inference latency

The distilled model is found to be up to 100% faster than the original base model. Benchmark code can be found here.

Potential limitations

The distilled model is in its early stages and the output may not yet be of production quality. These models may not be the best general model. These are best used as either fine-tuned or trained as LoRA based on a specific concept/style. Distilled models are not yet good at compositionality or multi-concept.

Fine-tune the SD-tiny model on the portrait dataset

I fine-tuned the sd-tiny model based on portrait images generated with the Realistic Vision v4.0 model. Below are the fine tuning parameters used.

Steps: 131000 Learning rate: 1e-4 Batch size: 32 Gradient accumulation steps: 4 Image resolution: 768 Dataset size – 7k images Mixed precision: fp16

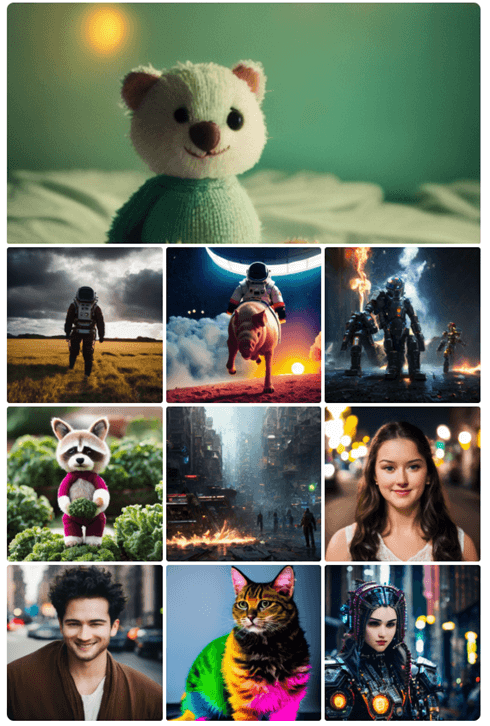

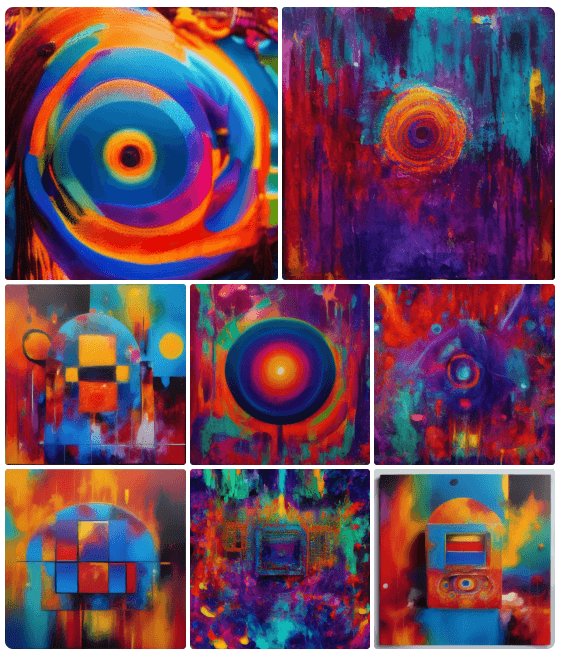

With almost 40% fewer parameters, we were able to generate image quality close to that produced by the original model. The sample results below speak for themselves.

The code for fine-tuning the basic model can be found here.

LoRA training

One of the benefits of LoRA training on extracted models is faster training. Below are some images of the first LoRA trained with a model extracted based on some abstract concepts. The code for LoRA training can be found here.

conclusion

We encourage the open source community to help us improve these distilled SD models and make them more widely adopted. Users can join our Discord server. So we’re announcing the latest updates to these models, releasing more checkpoints and some exciting new LoRA. If you like our work, please star us on Github.