Today we’re excited to welcome TII’s Falcon 180B to HuggingFace. The Falcon 180B introduces new cutting-edge technology to the open model. It is the largest openly available language model with 180 billion parameters and was trained on a massive 3.5 trillion tokens using TII’s RefinedWeb dataset. This represents the longest single-epoch pretraining of an open model.

You can find models in the Hugging Face Hub (base and chat models) and interact with them in the Falcon chat demo space.

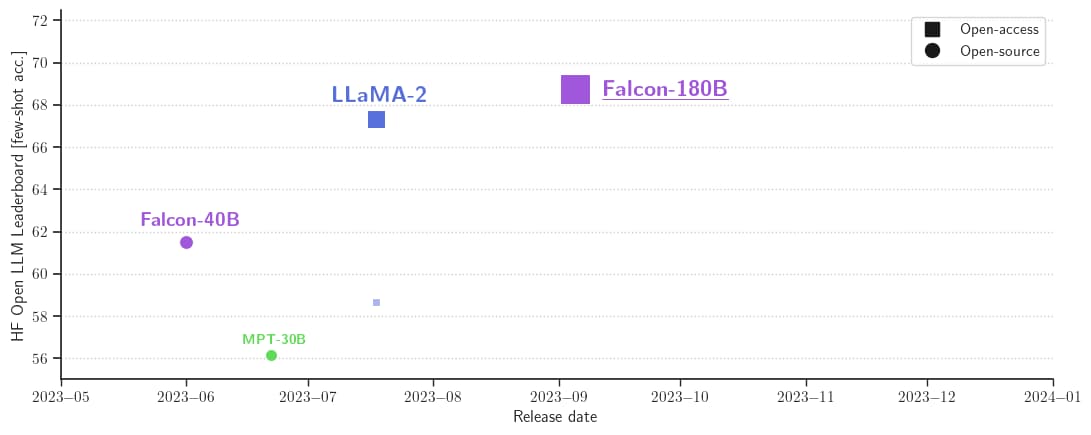

In terms of functionality, Falcon 180B achieves state-of-the-art results across natural language tasks. It is (at the time of release) at the top of the leaderboard of (pre-trained) open access models and comparable to proprietary models like PaLM-2. Although still difficult to rank definitively, it is considered on par with the PaLM-2 Large, and the Falcon 180B is widely regarded as one of the most capable LLMs.

In this blog post, we look at some evaluation results, explore what makes the Falcon 180B great, and show you how to use this model.

What is Falcon-180B?

The Falcon 180B is a model released by TII following previous releases in the Falcon family.

Architecturally, the Falcon 180B is a scaled-up version of the Falcon 40B, built on innovations such as multi-query attention for improved scalability. We recommend checking out the first blog post introducing Falcon to understand the architecture in more detail. Falcon 180B was trained with 3.5 trillion tokens on up to 4,096 GPUs simultaneously using Amazon SageMaker for a total of approximately 7,000,000 GPU hours. This means that Falcon 180B is 2.5 times larger than Llama 2 and trained with 4 times more compute.

The Falcon 180B dataset primarily consists of web data (approximately 85%) from RefinedWeb. Additionally, it is trained on a combination of carefully selected data, including conversations, technical papers, and small portions (up to 3%) of code. This pre-training dataset is large enough that even 3.5 trillion tokens constitute less than one epoch.

The released chat model has been fine-tuned based on a chat and instruction dataset that combines several large conversation datasets.

‼ ️ Commercial Use: The Falcon 180b is available for commercial use, but only under very limited conditions, with the exception of “hosting use.” If you are interested in commercial use, we recommend that you review the license and consult your legal team.

How good is Falcon 180B?

Falcon 180B is the best publicly released LLM at the time of release, outperforming Llama 2 70B and OpenAI’s GPT-3.5 in MMLU, and Google’s PaLM 2-Large in HellaSwag, LAMBADA, WebQuestions, Winogrande, PIQA, ARC, BoolQ, CB, COPA, RTE, WiC, WSC, and ReCoRD. showed similar performance. The Falcon 180B typically sits somewhere between GPT 3.5 and GPT4 depending on evaluation benchmarks, and it will be very interesting to follow further tweaks by the community now that it’s publicly released.

With a score of 68.74 on the Hugging Face Leaderboard at the time of release, Falcon 180B received the highest score of any publicly released pre-trained LLM, surpassing Meta’s Llama 2. *

Model Size Leaderboard Score Commercial Use or License Pre-Training Length Falcon 180B 67.85 🟠 3,500B Llama 2 70B 67.87 🟠 2,000B LLaMA 65B 61.19 🔴 1,400B Falcon 40B 58.07 🟢 1,000B MPT 30B 52.77 🟢 1,000B

Open LLM Leaderboard added two new benchmarks in November 2023 and updated the table above to reflect the latest score (67.85). According to the new methodology, Falcon is equivalent to Llama 2 70B.

The quantized Falcon model maintains similar metrics across benchmarks. Results were similar when evaluating torch.float16, 8-bit, and 4-bit. Check your results on the Open LLM Leaderboard.

How to use Falcon 180B?

Falcon 180B is available in the Hugging Face ecosystem starting with Transformers version 4.33.

demo

You can easily try out the Big Falcon model (180 billion parameters!) in this space or in the playground embedded below.

Hardware requirements

I ran some tests on the required hardware to run the model in different use cases. These are not the minimum numbers, but the minimum number of configurations that I was able to access.

Type Type Memory Example Falcon 180B Training Full Fine Tuning 5120GB 8x 8x A100 80GB Falcon 180B Training LoRA with ZeRO-3 1280GB 2x 8x A100 80GB Falcon 180B Training QLoRA 160GB 2x A100 80GB Falcon 180B Inference BF16/FP16 640GB 8x A100 80GB Falcon 180B Inference GPTQ/int4 320GB 8x A100 40GB

prompt format

The basic model has no prompt format. This is not a conversational model, nor was it trained according to your instructions, so don’t expect it to produce conversational responses. A pre-trained model is a good platform for further fine-tuning, but you probably shouldn’t use it as is. The conversation structure of the chat model is very simple.

System: Add optional system prompts here. User: This is user input. Falcon: This is what the model generates. User: This may be a second turn input. Falcon: etc.

transformers

With the release of Transformers 4.33, you can now use your Falcon 180B to take advantage of all the tools in the HF ecosystem, including:

Training and inference scripts and samples Secure file format (safe tensor) Integration with tools such as bit-sandbytes (4-bit quantization), PEFT (parameter efficient fine-tuning), and GPTQ-assisted generation (also known as “speculative decoding”) Support for RoPE scaling for larger context lengths Rich and powerful generation parameters

To use the model, you must agree to its license and terms of use. Please make sure you are logged into your Hugging Face account and that you are using the latest version of the transformer.

pip install –upgrade transformer, huggingface-cli login

bfloat16

This is how to use the basic model with bfloat16. Since the Falcon 180B is a large model, please consider the hardware requirements summarized in the table above.

from transformer import AutoTokenizer, AutoModelForCausalLM

import transformer

import Torch model ID = “Tihuae/Falcon-180B”

tokenizer = AutoTokenizer.from_pretrained(model_id) model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.bfloat16, device_map=“Auto”) prompt = “My name is Pedro and I live here.”

inputs = tokenizer(prompt, return_tensors=“pt”). To (“Cuda”) output = model.generate( input_ids=inputs(“input_ids”), attention_mask=input(“Caution mask”), do_sample=truthtemperature =0.6top_p=0.9max_new_tokens=50) output = output(0). To (“CPU”)

print(tokenizer.decode(output))

This can produce output similar to the following:

My name is Pedro, I’m 25 years old and live in Portugal. I’m a graphic designer, but I also have a passion for photography and video. I love to travel and am always looking for new adventures. I love meeting new people and exploring new places.

8-bit and 4-bit using bit sand bite

The 8-bit and 4-bit quantized versions of the Falcon 180B have very little difference in evaluation from the bfloat16 reference. This is very good news for inference, as we can confidently use the quantized version to reduce hardware requirements. However, keep in mind that 8-bit inference is much faster than running the model on 4-bit.

To use quantization, simply install the bitsandbytes library and enable the corresponding flag when loading your model.

model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.bfloat16, load_in_8bit=truthdevice map =“Auto”,)

chat model

As mentioned earlier, the version of the model that was fine-tuned to follow conversations used a very simple training template. To perform chat-style reasoning, you should follow the same pattern. For reference, let’s take a look at the format_prompt function in the Chat demo. This will look like this:

surely format prompt(messages, history, system_prompt): prompt = “”

if system_prompt: prompt += f “System: {system prompt}\n”

for User prompts, bot responses in History: Prompt += f “User: {user prompt}\n”

prompt += f”Falcon: {bot_response}\n”

prompt += f “User: {message}\nFalcon:”

return prompt

As you can see, interactions from the user and responses by the model are preceded by the User: and Falcon: delimiters. Concatenate them to form a prompt containing the entire history of the conversation. You can provide system prompts to adjust the generation style.

additional resources

Acknowledgment

Releasing a model like this with support and evaluation within the ecosystem would not be possible without the contributions of many community members, including Clémentine and Eleuther evaluation harnesses for LLM evaluation. Loubna and BigCode for code evaluation. Reasoning support by Nicolas. Lysandre, Matt, Daniel, Amy, Joan, and Arthur for integrating the Falcon into Transformers. Thanks to Baptiste and Patrick for providing the open source demo. Thanks to Thom, Lewis, TheBloke, Nouamane, and Tim Dettmers for multiple contributions to solving this problem. Finally, we would like to thank HF Cluster for enabling us to perform the LLM evaluation and providing a free open-source inferencing demo of the model.