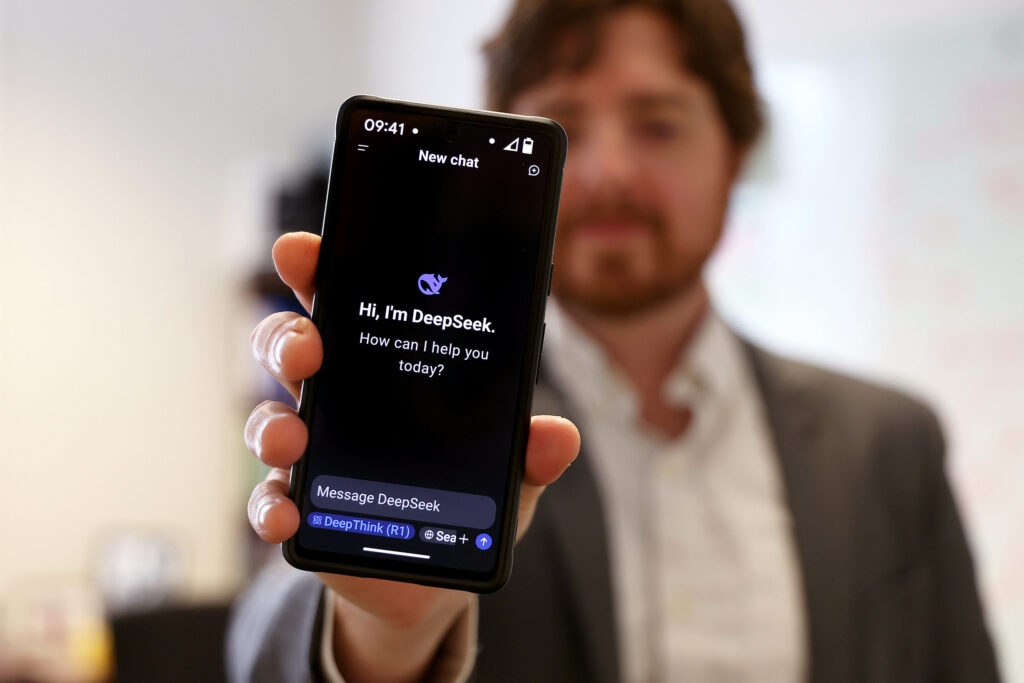

About a week ago, a very well -known Chinese technology company, called DeepSeek, quietly debuted an artificial intelligence app. What happened next was not quiet.

US technology stocks have been raised and lost billions of dollars. why? Deepseek’s AI was developed and trained at a low price. Compared to the huge amount of U.S. AI companies poured into R & D, the dollar is a little penny. Experts say that DeepSeek seems to be as good as ordinary names such as Chatgpt and Microsoft Copilot.

Is this a technology flowing? A shot that crosses a computing bow? AI abnormality?

UVA chatted today with AI at the University of Virginia’s Daden Business School and Michael Albert, a computing expert.

Q. First, what is DeepSeek?

A. DeepSeek is a Chinese AI laboratory established by hedge funds in China. Perhaps, unlike other commercial labs other than Meta, DeepSeek has mainly derived its models in open source. Unlike meta, it is really open source and is used by anyone for commercial purposes. We have released some of the models of the model. Each is the name DeepSeek, and the version number continues.

The recent excitement is about the release of a new model called Deepseek-R1. Deepseek-R1 is a modified version of the Deepseek-V3 model trained to infer an inference using “thinking”. This approach is a simple term, teaching a model that indicates the work by explicitly inferring the promot before answering. The approach of this idea is to strengthen the GPT O1 by Openai, the best model for mathematics, science, and programming questions. Deepseek-R1 is a very exciting model because it is a completely open source model that is very favorably compared to GPT O1.

Q. Why many people in the high -tech world noticed the company?

A. There are two excitement around Deepseek-R1 this week. First of all, the fact that Chinese companies work with a much less calculated budget ($ 100 million in Openai GPT-4) is to achieve the most advanced model. The fact that we were able to achieve the state -of -the -art model is the dominance of the United States in AI, which is considered a potential threat.

However, the suspicion of training efficiency seems to have been born from the application of a better model engineering practice from the basic advancement of AI technology. There seems to be no big new insight that led to more efficient training.

The second cause of excitement is that this model is open source. In other words, expanding efficiently on your own hardware will cause much lower usage costs from Openai directly than using GPT O1 directly. This will open new uses of these models that are not possible with a closed weight model, such as Openai models, for use conditions and generating costs.

Q. Investors are a bit cautious about US -based AI for the enormous costs of chips and computing power. Is DeepSeek’s AI model mainly hype or a game changer?

A. Deepseek-R1 is not a fundamental progress of AI technology. This is an interesting and gradual progress in training efficiency. However, it will always be more efficient than training something like GPT O1 for the first time. In reality, the main costs of these models are incurred when they are generating new textbooks for users, not training. Deepseek-R1 seems to have only a slight progress as far as the production efficiency. The actual change in earthquakes is that this model is completely open source.

This does not mean that China automatically controls the United States with AI technology. In December 2023, a French company named Mistral AI released the model, MIXTRAL 8X7B. However, the closed source model adopted a lot of insights from MIXTRAL 8X7B and improved. Since then, Mistral AI has been a relatively minor player in the basic model field.

Q. The United States is trying to control AI by restricting the availability of powerful computing chips to countries like China. If AI can be done without a cheap and expensive chip, what does it mean to American rule in technology?

A. I don’t think Deepseek-R1 can train AI cheaply and train without expensive chips. What they have demonstrated is that the previous training method was somewhat inefficient. This means that the next model round of the US company is more efficiently trained and the model is not stagnant, which means achieving better performance.