the study

Author published on March 12, 2025

Carolina Parada

Introducing Gemini Robotics, Gemini 2.0-based model designed for robotics

Google Deepmind advances in how Gemini models solve complex problems through multimodal inference across text, images, audio and video. However, so far, these capabilities have been primarily confined to the digital realm. In order for AI to be useful and useful to people in the physical realm, they need to demonstrate “embodied” reasoning, the human-like ability to understand and respond to the world around us, and take action safely to get things done.

Today we are introducing two new AI models based on Gemini 2.0. Gemini 2.0 lays the foundation for a new generation of useful robots.

The first is Gemini Robotics, an advanced vision language action (VLA) model built in Gemini 2.0 with the addition of physical actions as new output modalities for direct control of the robot. The second is Gemini Robotics-ER, a Gemini model with a high degree of spatial understanding, allowing roboticists to use Gemini’s embodied reasoning (ER) capabilities to execute their own programs.

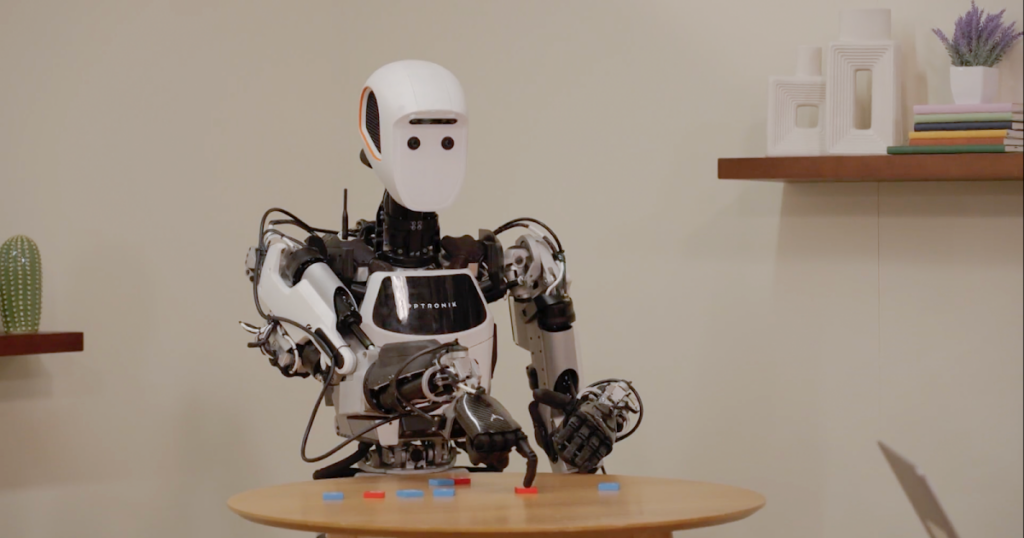

Both of these models allow a wider range of robots to perform real-world tasks than ever before. As part of our efforts, we are partnering with Apptronik to build the next generation of humanoid robots using Gemini 2.0. We also work with a selected number of trusted testers to guide the future of Gemini Robotics-ER.

I look forward to exploring the features of the model and continuing to develop the model in paths to real applications.

Gemini Robotics: Our Most Advanced Vision Language Action Model

To be useful and useful to people, the AI model of robotics requires three major qualities: They must be general. This means that you can adapt to a variety of situations. They must be interactive. This means that you can understand environmental instructions and changes and respond quickly. And they must be dexterous. This means that people can generally do what they can with their hands and fingers, as they manipulate objects carefully.

Although our previous research has shown advances in these fields, Gemini Robotics represents a substantial step in performance on all three axes, bringing you truly closer to a general purpose robot.

Generality

Gemini Robotics leverages the global understanding of Gemini to generalize to new situations and grasps a wide range of tasks, including tasks you’ve never seen before in training. Gemini Robotics is also skilled in dealing with new objects, diverse instructions, and new environments. Our technical report shows that Gemini Robotics performs more than twice as much on a comprehensive generalized benchmark compared to other cutting-edge vision language action models.

A demonstration of understanding the world of Gemini Robotics.

Interactiveness

To operate in a dynamic, physical world, robots need to be able to interact seamlessly with people and their surroundings and adapt to changes in the spot.

Built on top of Gemini 2.0’s foundation, Gemini Robotics is intuitively interactive. Take advantage of Gemini’s advanced language comprehension skills to understand and respond to commands expressed in everyday conversational languages and in a variety of languages.

It allows you to understand and respond to instructions in natural language much broader than previous models, adapting its behavior to input. It also continuously monitors your surroundings, detects changes to your environment or instructions, and adjusts actions accordingly. This kind of control, or “manometerability,” can help you work with your robot assistant in a variety of settings, from home to work.

If an object slips out of its grasp, or if someone is moving the item, Gemini Robotics is repeated and executed immediately.

Deftness

The third important pillar of building useful robots is to act with dexterity. Many everyday tasks that require human beings effortlessly incredibly incredible athletic abilities are still difficult for robots. In contrast, Gemini Robotics can tackle extremely complex multi-step tasks that require precise operations such as folding origami or stuffing snacks into Ziploc bags.

Gemini Robotics displays advanced levels of advanced dexterity

Multiple Implementations

Finally, robots come in all shapes and sizes. GeminiRobotics is designed to easily adapt to a variety of robot types. We trained a model primarily on the Aloha 2 data on the Bi-Arm Robotic platform, but also demonstrated that we could control the Bi-Arm platform based on the Franca arms used in many academic labs. Gemini Robotics can also focus on more complex implementations, such as the humanoid polo robots developed by Apptronik.

Gemini Robotics works with different types of robots

Enhance understanding of the Gemini world

In addition to Gemini Robotics, it introduces an advanced vision language model called Gemini Robotics-ER (short for “embodied reasoning”). This model will enhance understanding of Gemini’s world in the manner necessary for robotics, focusing in particular on spatial reasoning, allowing roboticists to connect to existing low-level controllers.

Gemini Robotics-ER improves the existing capabilities of Gemini 2.0, such as large margins such as pointing and 3D detection. Combining spatial reasoning with Gemini’s coding capabilities, Gemini Robotics-er can instantiate entirely new features on the fly. For example, if a coffee mug is displayed, the model can intuitively intuitively with a safe trajectory for a proper two finger to pick up the right two fingers at the handle.

Gemini Robotics-er can perform all the steps needed to control a robot out of the box, including recognition, state estimation, spatial understanding, planning, code generation, and more. In such an end-to-end setup, the model achieves 2x-3x success rate compared to Gemini 2.0. If code generation is not sufficient, Gemini Robotics-ER can even harness the power of in-context learning, following patterns of demonstrations by a few human beings to provide solutions.

Gemini Robotics-er has excellent embodied inference capabilities, such as detecting objects, pointing to object parts, finding corresponding points, and detecting objects in 3D.

We will promote AI and robotics responsibly

Investigating the ongoing possibilities of AI and robotics, we employ a layered, holistic approach to addressing research safety, from low-level motor control to high-level semantic understanding.

The physical safety of robots and the people around them have long been a fundamental concern in the science of robotics. Therefore, robotic players are responsible for classic safety measures such as avoiding collisions, limiting the magnitude of contact forces, ensuring the dynamic stability of mobile robots. Gemini Robotics-ER can interface with these “low-level” safety critical controllers that are unique to each particular embodiment. Based on Gemini’s core safety features, the Gemini Robotics-ER model enables you to understand whether a potential action is safe in a particular context and generate appropriate responses.

To advance robot safety research across academia and industry, we also release new datasets to assess and improve the semantic safety of embodied AI and robotics. Previous research has shown how the robot constitution, inspired by Isaac Asimov’s three laws of robotics, can help encourage LLMs to choose safer tasks for robots. We then developed a framework for automatically generating data-driven constitutions – rules expressed directly in natural language – to manipulate the movement of robots. This framework allows people to create, modify and apply constitutions to develop safer and more tailored to human values. Finally, the new Asimov dataset will help researchers to closely measure the safety impact of robot actions in real-world scenarios.

To further assess the social implications of our work, we work with experts in our responsible development and innovation teams, as well as the Responsible and Safety Council, with internal review groups committing to developing AI applications responsibly. We also consult with external experts about the specific challenges and opportunities presented by AI embodied in robotics applications.

In addition to our partnership with Apptronik, the Gemini Robotics-ER model can also be used for trusted testers such as agile robots, agility robots, Boston Dynamics, and enchanting tools. We look forward to exploring the capabilities of our models and continuing to develop AI for the next generation of more useful robots.

Acknowledgments

This work was developed by the Gemini Robotics team. For a complete list of authors and acknowledgements, see our technical report.